In the ever-expanding landscape of machine learning, the key to building highly accurate and efficient models often lies in the fine-tuning of hyperparameters. Welcome to the world of grid search, a systematic and effective approach to optimizing your model’s performance. In this article, we’ll explore how grid search empowers data scientists and machine learning enthusiasts to discover the ideal combination of hyperparameters, leading to models that excel in predictive accuracy. Join us on a journey through the intricacies of grid search and unlock the potential to harness the full capabilities of your machine learning algorithms.

What is Hyperparameter Tuning?

Machine learning models are akin to finely tuned instruments. Just as a musician adjusts their instrument to produce harmonious melodies, data scientists and machine learning practitioners must calibrate their models to achieve optimal performance. This process of calibration revolves around a critical aspect of machine learning: hyperparameters.

Hyperparameters are the dials and knobs that control the behavior of a machine learning model. They determine how the model learns, adapts, and ultimately makes predictions. Choosing the right hyperparameters is often the key to unlocking a model’s full potential.

Imagine training a model to prepare a dish. You have the ingredients (your data) and a recipe (the algorithm), but the outcome can vary greatly based on how you season it (the hyperparameters). Too much salt (a high learning rate) might ruin the dish (lead to divergence), while too little seasoning (a low learning rate) could leave it bland (resulting in slow convergence).

Hyperparameter tuning is the art of finding that perfect balance. It involves a systematic search for the ideal combination of hyperparameters that maximizes a model’s performance on a specific task. In this article, we will delve into the significance of hyperparameter tuning, explore various tuning techniques, and equip you with the tools to fine-tune your models for remarkable results.

How does the Grid Search work?

Grid search is a systematic approach to hyperparameter tuning that leaves no stone unturned in the quest for the best hyperparameters. It operates on a simple principle: instead of relying on intuition or guesswork, systematically evaluate a predefined set of hyperparameters and their combinations.

Here’s how grid search works:

- Define Hyperparameter Space: First, you specify a range of values or options for each hyperparameter you want to tune. For example, you might consider different learning rates, depths of decision trees, or regularization strengths.

- Create a Grid: Imagine a grid where each axis represents a hyperparameter, and the intersections represent specific combinations of hyperparameters. The grid is created by combining all possible values or options for each hyperparameter. In practice, this grid can be vast, depending on the number of hyperparameters and their options.

- Evaluation Metric: Select a performance metric, such as accuracy, mean squared error, or F1 score, that you want to optimize. This metric quantifies how well your model performs on your task.

- Search: Grid search then exhaustively trains and evaluates your model on each combination of hyperparameters in the grid. For each combination, it records the model’s performance based on the chosen evaluation metric.

- Identify the Best: After evaluating all combinations, grid search identifies the set of hyperparameters that produced the best performance on the evaluation metric. This set represents the optimal configuration for your model.

- Refinement (Optional): Depending on the results, you can perform a more focused grid search in a specific region of the hyperparameter space to fine-tune the configuration further. This can be particularly useful when you suspect that the best hyperparameters lie within a specific range.

Grid search’s strength lies in its exhaustive nature. It guarantees that you explore a comprehensive range of hyperparameters, which is essential for finding the best configuration, especially when the ideal hyperparameters are not obvious. However, it can be computationally expensive, particularly with a large hyperparameter space or complex models. In such cases, more advanced techniques like Randomized Search or Bayesian Optimization may be considered.

In the next sections, we’ll explore practical examples and considerations for applying grid search effectively in your machine learning projects.

How can you include cross validation in Grid Search?

In the quest to find the optimal set of hyperparameters for your machine learning model, cross-validation plays a pivotal role when combined with grid search. Cross-validation is a robust technique for assessing a model’s performance and generalizability, and it’s essential to employ it within the context of grid search for several reasons.

1. Robust Performance Evaluation: Cross-validation helps prevent overfitting and provides a more reliable estimate of a model’s performance. Instead of relying on a single train-test split, cross-validation involves dividing the dataset into multiple subsets or folds. The grid search algorithm evaluates each hyperparameter combination using these folds, yielding a more stable and trustworthy performance metric.

2. Reduces Data Dependency: When you split your data into training and testing sets for a single evaluation, the results may be heavily influenced by the specific instances in each split. Cross-validation mitigates this issue by performing multiple splits and averaging the results, making your performance evaluation less dependent on the luck of the draw.

3. Better Generalization: Cross-validation allows you to assess how well your model generalizes to unseen data. Each fold serves as a test set, ensuring that your hyperparameter choices lead to a model that performs well on different subsets of the data. This is crucial for ensuring that your model performs well on new, unseen examples.

4. Hyperparameter Tuning: Grid search systematically explores various hyperparameter combinations. With cross-validation, each combination is evaluated on different data subsets, giving you a more comprehensive view of how different settings impact the model’s performance. This informs your choice of the best hyperparameters for your specific problem.

5. Trade-Off Between Bias and Variance: Cross-validation helps strike a balance between bias and variance in your model evaluation. A single train-test split may lead to a biased evaluation if the split happens to contain unusual data points. Cross-validation reduces this bias by averaging performance over multiple splits.

Common cross-validation techniques include k-fold cross-validation and stratified k-fold cross-validation. In k-fold cross-validation, the dataset is divided into k equally sized folds, with each fold serving as a test set while the others are used for training. Stratified k-fold cross-validation maintains class distribution ratios in each fold, ensuring that each class is well-represented in both training and testing.

To incorporate cross-validation into your grid search process, you specify the number of folds (k) as one of the parameters. The grid search algorithm then evaluates each hyperparameter combination using k-fold cross-validation, providing a more accurate assessment of how well those hyperparameters generalize to different data partitions.

In summary, cross-validation is an integral component of grid search for hyperparameter tuning. It ensures robust model evaluation, reduces data dependency, and helps you make informed choices about the best hyperparameters for your machine learning model.

Which tips should you consider for effective Grid Search?

Grid search is a powerful tool for hyperparameter tuning, but to make the most of it and avoid common pitfalls, consider the following tips:

- Start with a Coarse Grid: Begin with a coarse grid that covers a wide range of hyperparameter values. This initial exploration helps you identify promising regions of the hyperparameter space without investing excessive computational resources. Once you’ve found a promising range, you can refine the search with a finer grid.

- Prioritize Important Hyperparameters: Not all hyperparameters are equally critical. Focus on tuning the hyperparameters that are likely to have the most significant impact on your model’s performance. Spend more time exploring options for these key hyperparameters, and use wider ranges for less influential ones.

- Use Domain Knowledge: Leverage your domain knowledge and insights about the problem to make informed choices about hyperparameter ranges. Understanding the characteristics of your data and model can help you narrow down the search space effectively.

- Select an Appropriate Scoring Metric: Choose an evaluation metric that aligns with your specific machine learning task. For example, if you’re working on a classification problem with imbalanced classes, consider metrics like F1 score or area under the ROC curve (AUC) rather than accuracy. The chosen metric should reflect the true performance of your model.

- Implement Parallelization: Grid search can be computationally expensive, especially with large datasets and complex models. Take advantage of parallel processing if available, as it can significantly reduce the time required to complete the search. Libraries like scikit-learn provide options for parallel grid search.

- Utilize Randomized Search: If the grid search space is extensive, consider using randomized search in addition to or instead of grid search. Randomized search randomly samples hyperparameter combinations from predefined ranges, which can be more efficient and often leads to good results.

- Regularly Monitor Progress: Keep an eye on the progress of your grid search as it runs. Many machine learning libraries provide progress indicators and early stopping options. If you notice that certain hyperparameter combinations consistently perform poorly, you can halt the search early to save time.

- Log and Document Results: Maintain detailed logs of the grid search results, including the hyperparameter combinations and corresponding evaluation scores. This documentation helps you keep track of the search progress and facilitates comparisons between different configurations.

- Visualize Results: Visualizations can provide valuable insights into the grid search process. Plotting hyperparameter values against evaluation metrics can help you identify trends and relationships, aiding in the selection of optimal configurations.

- Consider Advanced Techniques: Explore advanced techniques like Bayesian optimization, which can be more efficient than grid search for high-dimensional and non-linear hyperparameter spaces. Libraries like “scikit-optimize” provide tools for Bayesian optimization.

- Cross-Validation: Always perform cross-validation within each grid search iteration to obtain more robust performance estimates. Cross-validation helps ensure that the hyperparameters generalize well to unseen data.

- Regularization and Early Stopping: When applicable, consider adding regularization techniques to your model, and implement early stopping during training. These practices can help prevent overfitting, reducing the need for extensive hyperparameter tuning.

By following these tips, you can conduct an effective grid search that systematically explores hyperparameter configurations, leading to improved machine learning model performance while managing computational resources efficiently.

What are the common pitfalls and challenges of Grid Search?

Grid search, although a powerful technique for hyperparameter tuning, presents several challenges and potential pitfalls that practitioners should be aware of. These challenges can impact the effectiveness and efficiency of the tuning process, potentially leading to suboptimal results.

One significant challenge is the Combinatorial Explosion. Grid search systematically explores all possible combinations of hyperparameters within the defined ranges. As the number of hyperparameters and their potential values increase, the search space grows exponentially. This can result in a vast number of model evaluations, making grid search computationally expensive and time-consuming.

Furthermore, grid search can be Resource Intensive. When applied to large datasets or complex models, the computational requirements can become substantial. Evaluating numerous combinations of hyperparameters using cross-validation may demand significant computational power and memory.

Another issue is the Lack of Granularity. The grid of hyperparameters you define may not precisely capture the optimal values. If the true optima fall between the grid points, grid search may miss them. To address this, it might be necessary to perform a more refined search around promising regions after an initial grid search.

One critical consideration is the potential for Overfitting to Validation Data. During the grid search process, there is a risk of selecting hyperparameters that yield the best performance on the validation data. Repeatedly evaluating performance on the validation set can lead to overfitting, where the model becomes too specialized for the validation data but performs poorly on new, unseen data.

In high-dimensional spaces, grid search can face the challenge of the Curse of Dimensionality. As you add more hyperparameters to tune, the dimensionality of the search space increases. In high-dimensional spaces, grid search becomes less effective due to the sparsity of data points, making it challenging to find the true optima.

Additionally, grid search can be Time-Consuming. A comprehensive grid search with a large dataset and many hyperparameters may take an impractical amount of time, especially if you have limited computational resources.

It’s essential to avoid the pitfall of Neglecting Domain Knowledge. Grid search is a brute-force method that doesn’t take into account domain-specific knowledge. Tuning hyperparameters blindly without considering the characteristics of your data or the problem domain can lead to suboptimal results.

Traditional grid search is usually Sequential, evaluating one hyperparameter combination at a time. This lack of parallelism can be inefficient, particularly if you have access to multiple computing resources. Parallelizing the search can significantly speed up the process.

Lastly, grid search can be Inefficient in Exploration. It explores hyperparameter combinations uniformly, regardless of the results of previous evaluations. This can be inefficient if promising regions of the hyperparameter space are found early in the search, as the algorithm will continue to explore less promising areas.

To mitigate these challenges, practitioners can consider alternative hyperparameter optimization methods, such as Random Search or Bayesian optimization. Random Search randomly samples hyperparameters from predefined ranges, which can be more efficient in high-dimensional spaces. Bayesian optimization employs probabilistic models to guide the search toward promising regions of the hyperparameter space, often requiring fewer evaluations than grid search.

In conclusion, while grid search is a valuable tool for hyperparameter tuning, it’s essential to be mindful of its potential pitfalls and limitations. Careful planning, efficient resource utilization, and the consideration of alternative optimization methods can help practitioners overcome these challenges and find the best hyperparameters for their machine learning models.

How can you use grid search in Python?

Grid search is a widely used technique for hyperparameter tuning in Python, and it can be easily implemented using popular machine learning libraries such as scikit-learn. Here’s a step-by-step guide on how to perform grid search in Python using an example dataset:

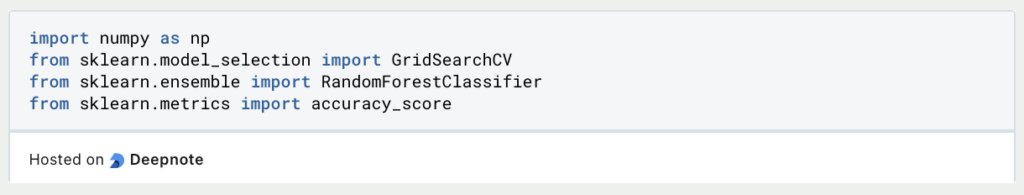

- Import Necessary Libraries: To get started, import the required libraries. You’ll need scikit-learn for machine learning tasks and other libraries for data manipulation and evaluation.

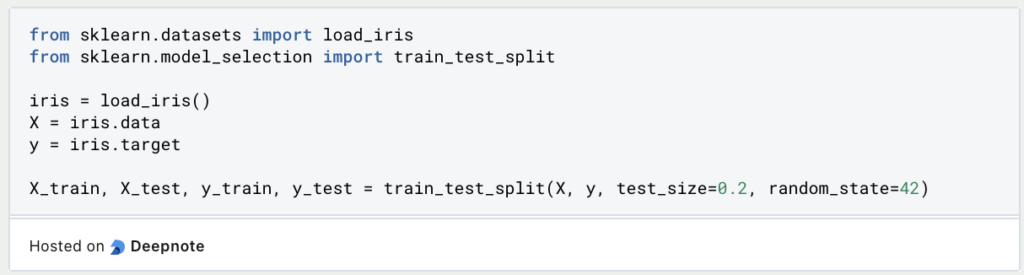

- Prepare Your Data: Load your dataset and split it into training and testing sets, as you would for any machine learning task. For this example, let’s consider the popular Iris dataset.

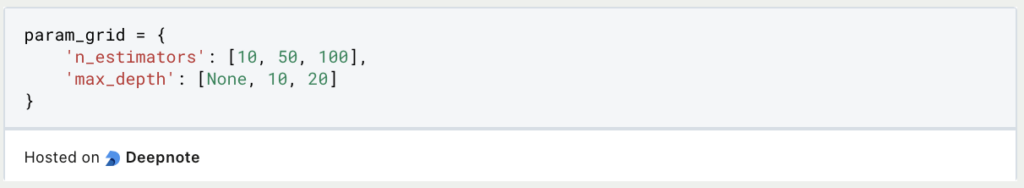

- Define the Hyperparameter Grid: Create a dictionary where keys are the hyperparameter names, and values are lists of possible values to be explored during the grid search. For instance, if you’re tuning a Random Forest Classifier, you might define a grid for hyperparameters like ‘n_estimators’ and ‘max_depth.’

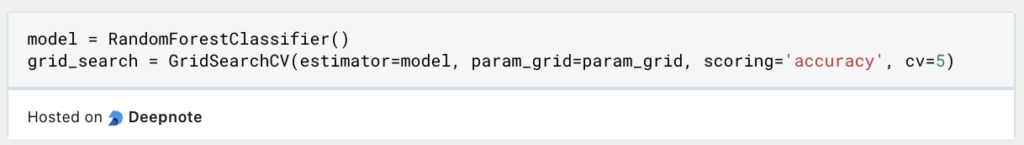

- Initialize the Model and Grid Search: Create an instance of the machine learning model you want to tune (e.g., RandomForestClassifier) and the GridSearchCV object, specifying the model, parameter grid, and evaluation metric.

- Fit the Grid Search: Fit the grid search object to your training data. This will systematically evaluate all possible combinations of hyperparameters using cross-validation.

- Get the Best Parameters: After the grid search is complete, you can access the best hyperparameters found during the search.

- Evaluate the Model: Use the model with the best hyperparameters to make predictions on your test data and evaluate its performance.

Grid search in Python simplifies the process of hyperparameter tuning by systematically exploring different combinations. It’s a valuable tool for finding the best hyperparameters for your machine learning models, ultimately leading to improved model performance.

This is what you should take with you

- Grid search is a powerful technique for hyperparameter tuning in machine learning.

- It systematically explores combinations of hyperparameters to find the best configuration.

- Grid search helps improve model performance by optimizing hyperparameters.

- Careful consideration of the grid size and parameter ranges is essential for efficient tuning.

- Cross-validation ensures reliable performance evaluation during grid search.

- Grid search can face challenges like the combinatorial explosion, resource intensity, and overfitting.

- Alternative methods like random search and Bayesian optimization offer efficient alternatives.

- Successful grid search requires a balance between exploration and exploitation.

- It’s a valuable tool for finding optimal hyperparameters and enhancing model performance.

- Combining grid search with domain knowledge can lead to superior results in machine learning tasks.

What is a Boltzmann Machine?

Unlocking the Power of Boltzmann Machines: From Theory to Applications in Deep Learning. Explore their role in AI.

What is the Gini Impurity?

Explore Gini impurity: A crucial metric shaping decision trees in machine learning.

What is the Hessian Matrix?

Explore the Hessian matrix: its math, applications in optimization & machine learning, and real-world significance.

What is Early Stopping?

Master the art of Early Stopping: Prevent overfitting, save resources, and optimize your machine learning models.

What is RMSprop?

Master RMSprop optimization for neural networks. Explore RMSprop, math, applications, and hyperparameters in deep learning.

What is the Conjugate Gradient?

Explore Conjugate Gradient: Algorithm Description, Variants, Applications and Limitations.

Other Articles on the Topic of Grid Search

Here is the documentation for Grid Search in Scikit-Learn.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.