Docker is a software tool from 2013 that supports the creation, testing and deployment of applications. The innovation of this tool is that the application is split into smaller so-called containers that run independently of each other. This makes the entire system leaner, more efficient and, above all, more resource-efficient.

How do Docker Containers work?

At the heart of the Docker open source project are the so-called containers. Containers are stand-alone units that can be executed independently of each other and always run the same way. We can actually think of Docker containers as relatively practical, like a cargo container. Let’s assume that in this container three people are working on a certain task (I know that this probably violates every applicable occupational health and safety law, but it fits our example very well).

In this container they find all the resources and machines they need for their task. They receive the raw materials they need via a certain hatch in the container and release the finished product via another hatch. Our shipping container can thus operate undisturbed and largely self-sufficiently. The people inside will not notice whether the ship including the container is currently in the port of Hamburg, in Brazil or somewhere in a calm sea on the open sea. As long as they are continuously supplied with raw materials, they will carry out their task no matter where they are.

It is the same with Docker Containers in the software environment. They are precisely defined, self-contained applications that can run on different machines. As long as they continuously receive the defined inputs, they continue to work continuously.

How is it different from Virtual Machines?

The idea of splitting an application into different subtasks and possibly running them on different computers was not new in 2013. Previously, virtual machines (VMs) were used for such tasks.

Virtual machines can be thought of (in a very simplified way), as computer access a few years ago, when computers were not as common as they are today. Some may remember that there was only one computer per family and different users were created in Windows. Each family member had his own user and stored files there or installed software, such as games, which were not accessible to the others.

Virtual machines, simply put, use the same principle, creating on a single physical device, with different users, the (VMs). For the users, it seems as if they are using a hardware technically isolated computer system, although all users are using the same physical computer with the same resources, such as CPUs, memory, etc. This architecture of virtualization has various advantages since the hardware components are bundled and thus can be used more efficiently, for example, in terms of cooling the system. Users, on the other hand, only need a relatively low-power device, such as a laptop, to connect to the virtual machine and can still perform compute-intensive tasks since they are using the VM’s hardware.

For example, the most practical way to think of virtual machines is as a single laptop with Windows, macOS, and Linux operating systems installed.

Docker containers differ from virtual machines in that they make individual functions within an application separable. This means that several of these containers can run on one virtual machine. Furthermore, the containers are simple, self-contained packages that are correspondingly easy to move.

What are the advantages of Docker Containers?

- Containers are very efficient and resource-saving, for example, because they are much easier to handle and manage.

- When developing applications in a team, containers help because they can be transported between systems very easily and are executable. This makes it very easy to transport development statuses between colleagues and they work the same way for all team members.

- Containers make an application scalable. If a function is called frequently, for example on a website, the corresponding containers can be started up in a larger number and can be shut down again as soon as the rush is over.

How to make an application fit for Docker?

Dockerizing an application involves creating an image that contains all the necessary dependencies, libraries, and configuration files required to run the application. Here are the general steps to Dockerize an application:

- Write a Dockerfile: This is a script that contains instructions on how to create an image. It specifies the base image to use, the commands to execute to install the required dependencies and configure the environment, and the files to include in the image.

- Build the image: Run the docker build command to build the image using the Docker file.

- Start the container: Run the docker run command to create a container from the image. You can specify port mapping, environment variables, and other configuration options using command line arguments.

- Test the container: Once the container is up and running, test the application by accessing it through a web browser or command line tool.

Below are some tips to keep in mind when Dockerizing an application:

- Use an official base image from the Hub that matches the programming language and version of the application.

- Minimize the number of layers in the image to reduce its size and improve performance.

- Use environment variables to pass sensitive information such as passwords and API keys to the Docker container.

- Use Docker volumes to store application-generated data outside the container.

- Use Docker Compose to orchestrate multiple containers that form a complex application.

When do we use containers and when virtual machines?

| Docker Container | Virtual Machine |

| – Use of microservice architectures (many small applications) – Easy transport of applications from test environment to productive environment is required | – An operating system is to be usable in another operating system – A “large” application is to be executed, so-called monoliths – Hardware resources, such as memory, networks, or servers, are to be provisioned |

What do the Docker images do?

The Docker images are the small files in which the configuration of the container is defined and stored. They contain all the information needed to start a container. They can therefore be easily moved back and forth between the systems and the container can then be started from them.

Based on this, we can use Kubernetes, for example, to simplify and automate the management of containers.

What is the Docker Hub?

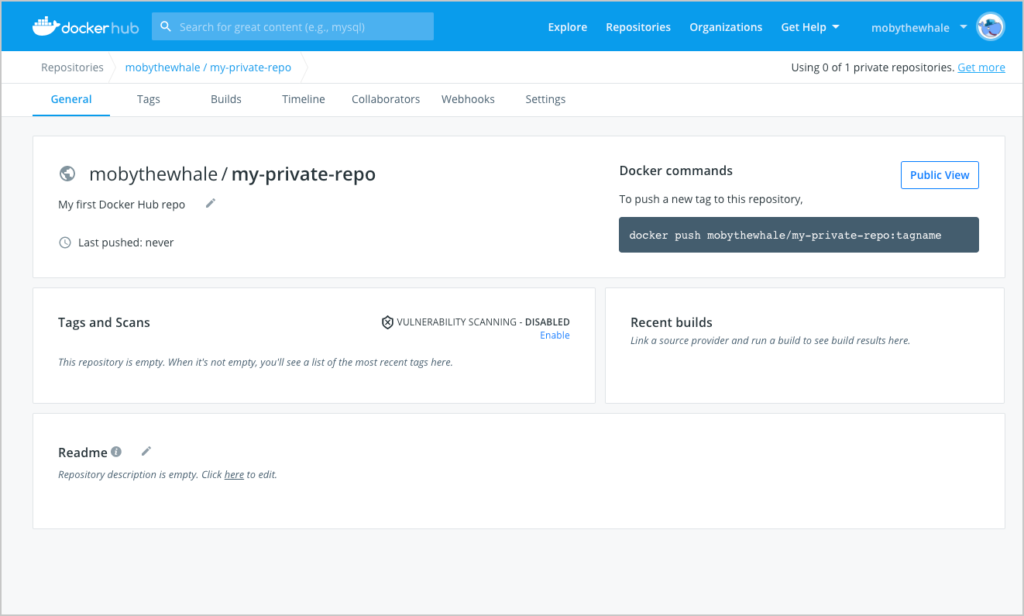

Docker Hub is an online platform where you can both upload and download images. This means that code assets can be distributed and shared within a team or an organization. It works similarly to GitHub, for example, but with the difference that Docker images are distributed via it.

In return, many functionalities are offered, which are supposed to facilitate the work in the team. For example, workgroups and teams can be created and the editing of images can be restricted to individual teams. To use Docker Hub, you have to create an account on their website and log in. After logging in, the first repositories can then be created and used.

How to use Docker Compose?

For most complex applications, a single Docker image is not enough to embed all functions there. Docker Compose, therefore, helps to set up and connect different images and provides a YAML file to configure the services of the different applications.

The YAML file contains various information. On the one hand, all services and their images that are to be connected are enumerated. The defined “Volumes” determine which memory data can be stored. In the “Network” section, the communication rules between the containers and between a container and the host are defined.

What are real-world applications of Docker?

There are numerous applications where Docker is being used to improve and streamline various aspects of software development and deployment. Here are a few examples:

- PayPal: PayPal was able to reduce deployment time from weeks to minutes by containerizing its applications. This has allowed them to scale their services more easily and reduce the risk of downtime during deployment.

- GE: General Electric (GE) uses the software to deploy microservices across its various business units. This has enabled the company to modernize its infrastructure and reduce its reliance on legacy systems.

- Spotify: Spotify has been using it to containerize its applications for several years. This has allowed the company to speed up deployment and reduce the risk of downtime during upgrades. It has also reduced infrastructure costs by running applications on fewer servers.

- eBay: eBay uses Docker to containerize its applications and make the development process more efficient. The company was able to reduce the time it takes to deploy new environments from weeks to minutes.

- ING: ING, a Dutch multinational banking and financial services company, is using it to containerize its applications and make its infrastructure more flexible and scalable. This has enabled the company to reduce time-to-market for new products and services.

These case studies demonstrate the versatility and usefulness of different industries and applications.

This is what you should take with you

- Docker containers make it possible to develop small, self-contained applications that can be transported between different systems and run reliably.

- They are mainly used when applications are to run in the cloud or are to be moved between different environments quickly and easily.

- Docker containers are significantly more resource-efficient and efficient than virtual machines.

- The configuration of the containers is stored and defined in the Docker images.

What is the Univariate Analysis?

Master Univariate Analysis: Dive Deep into Data with Visualization, and Python - Learn from In-Depth Examples and Hands-On Code.

What is OpenAPI?

Explore OpenAPI: A Comprehensive Guide to Building and Consuming RESTful APIs. Learn How to Design, Document, and Test APIs.

What is Data Governance?

Ensure the quality, availability, and integrity of your organization's data through effective data governance. Learn more here.

What is Data Quality?

Ensuring Data Quality: Importance, Challenges, and Best Practices. Learn how to maintain high-quality data to drive better business decisions.

What is Data Imputation?

Impute missing values with data imputation techniques. Optimize data quality and learn more about the techniques and importance.

What is Outlier Detection?

Discover hidden anomalies in your data with advanced outlier detection techniques. Improve decision-making and uncover valuable insights.

Other Articles on the Topic of Docker

- Here you can find the documentation of Docker.

- This article by UFS Explorer goes deeper into the topic of virtual machines.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.