Outlier detection, also known as anomaly detection, is a technique in data analysis used to identify data points that deviate significantly from the expected patterns or behavior of a dataset. Outliers can represent errors in data collection, measurement, or processing, or they may indicate rare events or phenomena of particular interest to researchers or practitioners. Outlier detection is used in various fields, including finance, healthcare, engineering, and security, to detect fraud, diagnose medical conditions, monitor machine performance, and identify potential security threats.

In this article, we will explore the techniques, algorithms, and applications of outlier detection and their limitations and challenges.

What are the types of Outliers?

Outliers are observations in a dataset that are significantly different from other observations. There are several types of outliers that can occur in a dataset:

- Point outliers: These are individual data points that are significantly different from other data points in the dataset. For example, in a dataset of student grades, a student who scores much higher or lower than the other students could be a point outlier.

- Contextual outliers: These are data points that are significantly different from other data points in a specific context, but not necessarily in the overall dataset. For example, in a dataset of daily temperatures, a temperature that is much higher or lower than usual for a specific location and time of year could be a contextual outlier.

- Collective outliers: These are groups of data points that are significantly different from other groups of data points in the dataset. For example, in a dataset of housing prices, a group of houses in a specific neighborhood that are significantly more or less expensive than houses in other neighborhoods could be a collective outlier.

- Continuous outliers: These are data points that deviate significantly from the expected pattern in a continuous fashion. For example, in a dataset of stock prices, a stock that experiences a continuous decline or rise over a period of time could be a continuous outlier.

- Masked outliers: These are outliers that are hidden within the noise of the dataset and are difficult to detect. For example, in a dataset of patient health records, a patient who has a rare and undiagnosed condition could be a masked outlier.

Understanding the type of outlier in a dataset is important for selecting an appropriate outlier detection method.

Why is Data Preprocessing important in Outlier Detection?

Data preprocessing is a crucial step in outlier detection as it helps ensure the quality and reliability of the data. Preprocessing techniques involve various steps, including data cleaning, transformation, and normalization, which aim to enhance the accuracy and effectiveness of outlier detection algorithms.

One essential aspect of data preprocessing is data cleaning, which involves handling missing values, outliers, and noise. Missing values can distort the analysis, so they need to be appropriately handled through techniques such as imputation or deletion. Outliers, which are observations significantly different from the majority, can have a significant impact on outlier detection algorithms. Identification and treatment of outliers using statistical methods or domain knowledge are essential to obtain reliable results. Noise, which refers to random variations or errors in the data, can also affect the accuracy of outlier detection algorithms. Applying smoothing or filtering techniques can help reduce noise and improve the quality of the data.

Data transformation is another important step in preprocessing for outlier detection. It involves converting the data into a suitable form that meets the assumptions of outlier detection algorithms. Common transformations include logarithmic, square root, or Box-Cox transformations, which can help normalize the data and improve its distribution properties. Transforming skewed or non-normal data can contribute to more accurate outlier detection results.

Normalization is also a critical preprocessing step that ensures the data is on a consistent scale. It helps eliminate the influence of varying units or scales on the outlier detection process. Techniques such as min-max scaling or z-score normalization are commonly used to normalize the data and bring it within a specific range or distribution.

Overall, data preprocessing plays a vital role in outlier detection by ensuring the data is clean, transformed appropriately, and normalized. These steps help enhance the accuracy and reliability of outlier detection algorithms, enabling the identification of meaningful outliers and valuable insights in the data.

Which methods are used for Outlier Detection?

Outlier detection is an essential technique in data mining and statistical analysis. It helps in identifying the anomalous data points that do not conform to the expected behavior of the majority of the data. Outliers can be present in various forms, such as errors, noise, or exceptional values, which can distort the statistical properties of the dataset. Therefore, detecting outliers is crucial for improving the accuracy and reliability of the analysis results. There are several outlier detection techniques available, including:

- Statistical Methods: Statistical methods involve analyzing the distribution of the data and identifying the data points that lie outside the normal distribution range. Some of the statistical methods used for outlier detection include the z-score method, Tukey’s method, and the box plot method.

- Distance-Based Methods: Distance-based methods measure the similarity between data points and identify outliers that have a significant distance from the rest of the data. Examples of distance-based methods include the k-nearest neighbors method and the Local Outlier Factor (LOF) method.

- Density-Based Methods: Density-based methods identify outliers based on the density of the data points. These methods can identify outliers in both low and high-density regions of the data. Examples of density-based methods include the DBSCAN method and the OPTICS method.

- Machine Learning Methods: Machine learning methods use algorithms to learn the patterns in the data and identify the outliers based on the learned models. Examples of Machine Learning methods include the Isolation Forest method and the One-class SVM method.

The choice of the outlier detection technique depends on the type of data, the nature of outliers, and the specific requirements of the analysis. Each technique has its advantages and limitations, and it is essential to choose the most suitable method for the analysis.

In the following sections, we will discuss each of these techniques in detail and their application in outlier detection. We will also explore the evaluation metrics used to measure the performance of these techniques and the challenges associated with outlier detection.

How to evaluate Outlier Detection Methods?

Outlier detection methods can be evaluated based on various factors such as their effectiveness, efficiency, interpretability, and scalability. In order to evaluate the performance of an outlier detection method, it is necessary to have a dataset with known anomalies, which can be used to calculate various evaluation metrics.

One common evaluation metric for outlier detection is the area under the receiver operating characteristic (ROC) curve. The ROC curve plots the true positive rate against the false positive rate for different threshold values. The area under the curve (AUC) provides a measure of the overall performance of the method, with a higher AUC indicating better performance.

Another evaluation metric is the precision-recall (PR) curve, which plots the precision (positive predictive value) against the recall (sensitivity) for different threshold values. The area under the PR curve can also be used as a measure of the overall performance of the method, particularly when the dataset is imbalanced with a small number of anomalies.

Other evaluation metrics include the F1 score, which combines precision and recall into a single metric, and the lift at K metric, which measures the increase in the proportion of anomalies identified in the top K-ranked instances.

In addition to quantitative evaluation metrics, it is also important to consider the interpretability of the method and the ability to explain the detected outliers. Finally, the scalability of the method should also be considered, particularly for large datasets where efficiency becomes a critical factor in the selection of an appropriate outlier detection method.

How do you visualise outliers in diagrams?

Interpreting and visualizing outliers plays a crucial role in understanding their nature, identifying potential causes, and gaining insights from the data. Python provides a rich ecosystem of libraries and tools for outlier interpretation and visualization. In this section, we will explore some techniques and examples to effectively interpret and visualize outliers using Python.

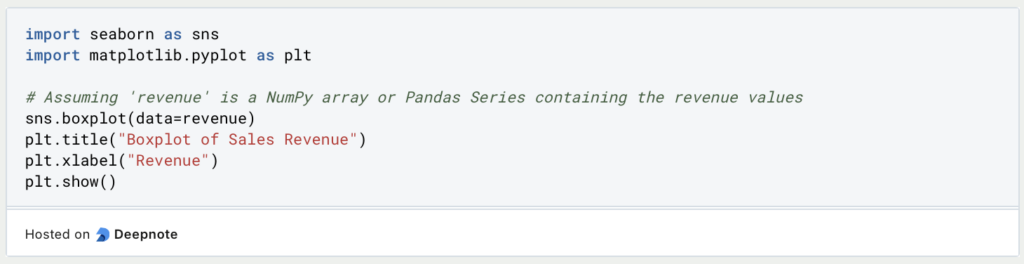

- Boxplots and Scatter Plots: Boxplots and scatter plots are commonly used visualization techniques to identify and interpret outliers. The Seaborn and Matplotlib libraries in Python offer easy-to-use functions for creating such plots. Example:

Let’s consider a dataset containing information about the sales revenue of various stores. We can create a boxplot to visualize the distribution of revenue and identify potential outliers:

The boxplot will display the quartiles, median, and any potential outliers beyond the whiskers. Outliers can be identified as points lying outside the whiskers.

Additionally, scatter plots can be used to visualize relationships between variables and identify potential outliers. Outliers often appear as data points deviating significantly from the general trend in scatter plots.

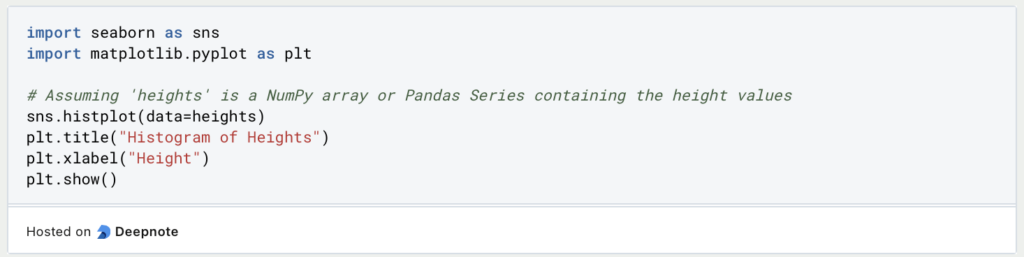

- Histograms and Density Plots: Histograms and density plots provide insights into the distribution of data and can help detect outliers. Python libraries such as Matplotlib and Seaborn offer functions for creating these visualizations. Example:

Suppose we have a dataset containing the heights of individuals. We can create a histogram to visualize the distribution of heights and identify potential outliers:

Outliers in the histogram may appear as values that deviate significantly from the main distribution.

- Interactive Visualizations: Python libraries such as Plotly and Bokeh provide interactive visualization capabilities, allowing users to explore and interact with data visually. These libraries offer features like zooming, panning, and hovering over data points to obtain detailed information. Example:

Let’s consider a dataset containing housing prices. We can create an interactive scatter plot using Plotly to explore the relationship between the area of the house and its price, while also identifying outliers:

The interactive scatter plot allows us to hover over data points to obtain specific information about each point, including potential outliers.

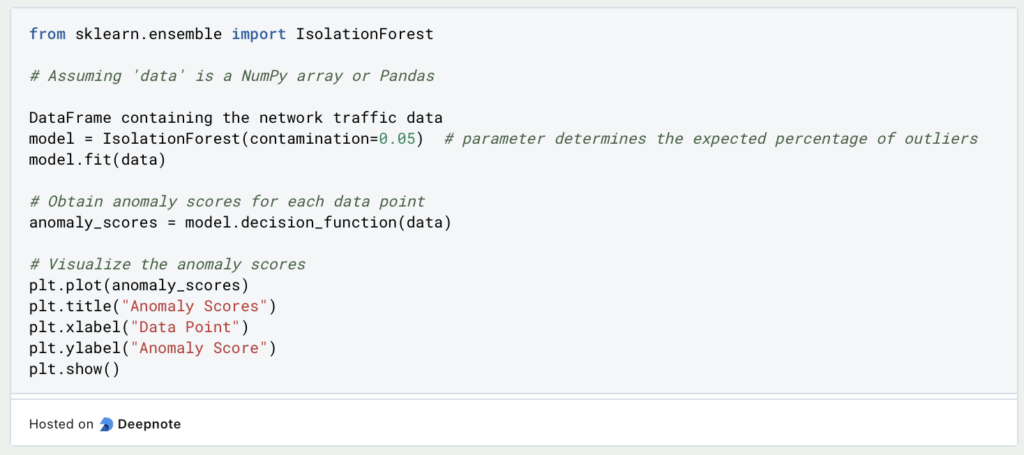

- Anomaly Detection Algorithms: Python libraries such as scikit-learn and PyOD provide implementations of various anomaly detection algorithms. These algorithms not only identify outliers but also provide additional information about the anomaly scores or the degree of abnormality associated with each data point. Example:

Let’s consider a dataset of network traffic data. We can use the Isolation Forest algorithm from scikit-learn to detect outliers:

Plotting the anomaly scores allows us to identify data points with higher scores as potential outliers.

These are just a few examples of how Python can be used to interpret and visualize outliers. Depending on the specific requirements and characteristics of the data, other visualization techniques and algorithms may be more appropriate. Exploring the Python documentation and community resources will provide further insights into outlier interpretation and visualization in Python.

What are the applications of Outlier Detection?

Outlier detection techniques have applications in various fields, including finance, healthcare, fraud detection, quality control, and more. Here are some examples of how outlier detection can be used:

- Finance: In finance, outlier detection techniques can help identify anomalies in trading data, such as unusual trading activity, fraudulent transactions, and market manipulations. It can also be used to identify abnormal patterns in stock prices or trading volumes, which can help investors make informed decisions.

- Healthcare: In healthcare, outlier detection can be used to identify unusual patient behavior or medical events, such as unexpected adverse reactions to medication, high-risk patients who may require special attention, and anomalies in medical test results.

- Fraud detection: Outlier detection can be used to detect fraudulent activities, such as credit card fraud, insurance fraud, and identity theft. By identifying unusual patterns or behaviors, the system can alert investigators to investigate further.

- Quality control: Outlier detection techniques can help identify defects in manufacturing processes, such as faulty machinery, human error, and other process-related issues. By detecting anomalies, manufacturers can improve the quality of their products and reduce waste.

- Social network analysis: Outlier detection can be used in social network analysis to identify influential nodes or detect fake accounts created to spread false information.

- Image analysis: In image analysis, outlier detection can help identify anomalies in medical images, such as tumors or abnormal growths, or detect unusual features in satellite images or surveillance footage.

- Cybersecurity: Outlier detection can be used to identify anomalies in network traffic, such as unusual patterns of activity, suspicious IP addresses, or attempts to breach security protocols.

Overall, outlier detection techniques can help organizations detect and address anomalies in their data, improve decision-making, and reduce risks.

What are challenges and the future directions of Outlier Detection?

Outlier detection is a complex task that continuously evolves alongside advancements in data analysis techniques and application domains. While significant progress has been made in the field, there are still several challenges to overcome and promising directions for future research.

One of the main challenges is dealing with imbalanced datasets where outliers are rare compared to normal data points. This class imbalance poses difficulties for both model training and evaluation. Additionally, obtaining reliable outlier labels can be subjective or even unavailable, making it challenging to apply supervised learning-based approaches. Future research should focus on developing robust outlier detection techniques capable of handling imbalanced data and exploring methods for obtaining reliable outlier labels.

Real-world data streams are dynamic, and outliers can change over time due to concept drift or the emergence of new types of outliers. Traditional outlier detection methods, designed for stationary data, may struggle to adapt to evolving outliers. Future research should concentrate on developing adaptive and online outlier detection algorithms that can detect and handle concept drift and evolving outliers in dynamic environments.

With the increasing availability of high-dimensional data, traditional outlier detection techniques face challenges in effectively capturing outliers in high-dimensional feature spaces. The curse of dimensionality, sparsity, and the presence of irrelevant features can impact the performance of outlier detection algorithms. Future research should explore dimensionality reduction techniques, feature selection methods, and ensemble-based approaches to address the challenges posed by high-dimensional data.

Outliers often exhibit contextual dependencies and collective behavior. Detecting outliers within a specific context or considering the collective behavior of a group of data points can provide more meaningful insights. Future research should focus on developing outlier detection techniques that incorporate contextual information, dependencies among data points, and collective outlier patterns to enhance the accuracy and interpretability of outlier detection results.

While supervised outlier detection techniques require labeled data, unsupervised and semi-supervised methods can alleviate the need for labeled outliers, making them more practical in many scenarios. Future research should explore novel unsupervised and semi-supervised approaches that leverage the intrinsic structure of the data, utilize clustering techniques, or leverage weak forms of supervision to improve outlier detection performance.

Interpreting and explaining outlier detection results are crucial for building trust and understanding in real-world applications. Future research should focus on developing methods to provide explanations for detected outliers, identify the factors contributing to their outlying behavior, and enable stakeholders to make informed decisions based on the outlier detection results.

In conclusion, outlier detection faces various challenges, including imbalanced data, evolving outliers, high-dimensional data, contextual dependencies, interpretability, and domain-specific requirements. Addressing these challenges and exploring future research directions will lead to more effective and reliable outlier detection techniques capable of handling complex data scenarios and supporting diverse application domains.

This is what you should take with you

- Outlier detection is an important task in data analysis, as outliers can have a significant impact on statistical measures and model performance.

- There are different types of outliers, including global, contextual, and collective outliers.

- Several techniques can be used for outlier detection, such as distance-based methods, density-based methods, and model-based methods.

- The evaluation of outlier detection methods is challenging, as there is often no ground truth available and different evaluation metrics may be used.

- Outlier detection has various applications in different fields, including finance, healthcare, and cybersecurity.

- However, outlier detection also has limitations, such as the potential for false positives and the challenge of identifying outliers in high-dimensional data.

- Overall, outlier detection is a crucial task for identifying unusual observations in data and can provide valuable insights into the underlying data distribution and potential data quality issues.

What is the Univariate Analysis?

Master Univariate Analysis: Dive Deep into Data with Visualization, and Python - Learn from In-Depth Examples and Hands-On Code.

What is OpenAPI?

Explore OpenAPI: A Comprehensive Guide to Building and Consuming RESTful APIs. Learn How to Design, Document, and Test APIs.

What is Data Governance?

Ensure the quality, availability, and integrity of your organization's data through effective data governance. Learn more here.

What is Data Quality?

Ensuring Data Quality: Importance, Challenges, and Best Practices. Learn how to maintain high-quality data to drive better business decisions.

What is Data Imputation?

Impute missing values with data imputation techniques. Optimize data quality and learn more about the techniques and importance.

What is the Bivariate Analysis?

Unlock insights with bivariate analysis. Explore types, scatterplots, correlation, and regression. Enhance your data analysis skills.

Other Articles on the Topic of Outlier Detection

Scikit-Learn provides an interesting article about Outlier and Novelty Detection in Python.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.