In the ever-expanding landscape of data-driven technologies, the demand for high-quality, diverse datasets has become paramount. However, real-world data often comes with constraints such as privacy concerns, limited access, or simply insufficient quantity. This is where the innovative realm of synthetic data generation steps in, offering a transformative solution to bridge these gaps.

Synthetic data, artificially created to mimic the characteristics of real-world datasets, has emerged as a game-changer in various industries. From healthcare and finance to autonomous vehicles and machine learning, the ability to generate data that closely resembles authentic information opens new avenues for innovation and development.

This article delves into the dynamic world of synthetic data generation, exploring its benefits, methodologies, and applications across diverse sectors. We unravel the techniques behind creating synthetic datasets, examine their practical implications, and navigate the challenges that come with this groundbreaking approach. Join us on a journey into the future of data science, where synthetic data paves the way for enhanced privacy, improved model training, and accelerated advancements in artificial intelligence.

What is Synthetic Data?

Synthetic data refers to artificially generated datasets designed to replicate the statistical properties and patterns found in real-world data. Unlike actual data collected from observations or experiments, synthetic data is crafted using algorithms and models. This imitation aims to capture the complexity and diversity of authentic datasets, serving as a powerful tool in scenarios where obtaining or utilizing real data proves challenging.

The process involves generating data points that share similar characteristics with the original dataset but do not represent actual observations. Various techniques, including Generative Adversarial Networks (GANs), rule-based methods, and statistical models, are employed to produce synthetic data that mirrors the features of the genuine counterpart.

Synthetic data finds applications across diverse domains, including machine learning, where it aids in training and validating models without compromising sensitive information. Its role extends to addressing privacy concerns, overcoming data scarcity, and facilitating innovation in scenarios where access to authentic data is restricted.

In essence, synthetic data acts as a virtual counterpart, offering a valuable resource for researchers, developers, and data scientists to navigate challenges associated with limited data availability, privacy preservation, and algorithmic exploration.

What are the benefits of Synthetic Data?

Synthetic data emerges as a transformative ally in the realm of data science, offering a plethora of advantages that address critical challenges faced in various industries. In situations where authentic data is limited or hard to obtain, synthetic data steps in to fill the void. This proves especially valuable during the early stages of model development or in niche domains where real-world data is scarce.

One of its key advantages lies in privacy preservation, allowing the creation of privacy-preserving datasets, crucial in fields like healthcare and finance, where safeguarding sensitive information is paramount. Researchers and analysts can simulate realistic scenarios without compromising individual privacy. Synthetic datasets also provide a controlled environment for rigorous testing and development of machine learning algorithms. Researchers can manipulate variables, introduce specific scenarios, and assess algorithmic responses, accelerating the innovation cycle.

Moreover, synthetic data allows for the customization of datasets to meet specific requirements. This includes generating data with varying characteristics, distribution shifts, or specific anomalies, enabling researchers to test the resilience of algorithms in diverse scenarios. In situations where collecting large amounts of real data is resource-intensive, synthetic data offers a more efficient alternative. It reduces the need for extensive data collection efforts and associated costs while still providing valuable insights.

Synthetic data proves invaluable in fields like autonomous vehicles, where exposure to a wide range of driving conditions is crucial for training robust models. It also addresses ethical concerns related to the use of real data, especially when dealing with sensitive or proprietary information. This ensures responsible and ethical experimentation in various fields. In essence, the benefits of synthetic data extend far beyond conventional data limitations, paving the way for innovation, privacy-conscious research, and accelerated advancements in the data science landscape.

Which methods are used for Synthetic Data Generation?

The art of synthetic data generation involves deploying sophisticated methods to replicate the intricacies of real-world datasets. At the forefront of these techniques are Generative Adversarial Networks (GANs), a cutting-edge approach where a generator and discriminator engage in a dynamic interplay. The generator creates synthetic data, aiming to deceive the discriminator, which in turn evolves to distinguish between real and synthetic samples. This adversarial process refines the synthetic data, continually enhancing its resemblance to authentic datasets.

Complementing GANs are Variational Autoencoders (VAEs), which employ probabilistic models to capture the underlying structure of the input data. VAEs focus on encoding and decoding information, generating synthetic data points by sampling from the encoded distribution. This method introduces variability, crucial for simulating the diversity observed in real-world datasets.

Rule-based approaches represent another facet of synthetic data generation. By incorporating predefined rules and constraints, these methods emulate specific patterns or characteristics present in authentic datasets. While less flexible than machine learning-based methods, rule-based approaches offer precision in controlling the generated data’s properties.

Statistical models, embracing techniques such as bootstrapping and Monte Carlo simulations, contribute to the synthetic data landscape. These methods leverage statistical properties derived from existing datasets to generate new samples. Bootstrapping involves resampling with replacement, capturing the variability in the original data, while Monte Carlo simulations simulate random processes, providing a statistical foundation for synthetic data.

These diverse methods cater to varying data generation requirements and challenges. GANs and VAEs excel in capturing complex patterns and distributions, while rule-based and statistical approaches offer explicit control and customization. The synergy of these methods fuels the dynamic field of synthetic data generation, enabling researchers and data scientists to create datasets that mirror the richness and complexity of the real world.

What are the applications of Synthetic Data Generation in different industries?

The applications of synthetic data generation reverberate across diverse industries, reshaping conventional approaches and unlocking new possibilities.

Healthcare:

In the realm of healthcare, synthetic data plays a pivotal role in addressing privacy concerns associated with patient records. It facilitates the development and testing of medical algorithms without compromising sensitive information. From training diagnostic models to advancing personalized medicine, synthetic healthcare data emerges as a cornerstone in transformative medical research.

Finance:

The financial sector embraces synthetic data to fortify risk management and fraud detection strategies. Synthetic financial datasets simulate diverse market scenarios, allowing institutions to refine their models and algorithms. This proactive approach enhances the robustness of financial systems, enabling better decision-making and risk mitigation.

Autonomous Vehicles:

Synthetic data proves indispensable in training algorithms for autonomous vehicles. Simulated driving scenarios, generated through synthetic datasets, provide a safe and controlled environment for testing and refining vehicle systems. This accelerates the development of reliable and adaptive autonomous driving technologies.

eCommerce:

In the eCommerce landscape, synthetic data aids in crafting personalized customer experiences. By generating synthetic customer profiles and transaction data, businesses can optimize marketing strategies, enhance recommendation systems, and tailor their services to individual preferences. This data-driven approach boosts customer engagement and satisfaction.

Manufacturing:

The manufacturing industry leverages synthetic data to streamline and optimize production processes. Simulating various manufacturing scenarios helps identify potential bottlenecks, optimize supply chain logistics, and enhance overall operational efficiency. This data-driven insight enables manufacturers to make informed decisions for process improvement.

Cybersecurity:

Synthetic data proves instrumental in fortifying cybersecurity measures. By simulating cyber threats and attacks, organizations can train and test their security systems effectively. Synthetic datasets create realistic scenarios, allowing cybersecurity professionals to proactively identify vulnerabilities and enhance the resilience of digital infrastructures.

These applications represent a fraction of the expansive impact of synthetic data across industries. As technology advances, the versatility and adaptability of synthetic datasets continue to redefine the boundaries of innovation, offering tailored solutions to industry-specific challenges.

What are the challenges in Synthetic Data Generation?

While synthetic data generation opens doors to innovation, it also encounters a spectrum of challenges and limitations that necessitate careful consideration.

- Complexity and Realism: Crafting synthetic datasets that authentically capture the complexity of real-world scenarios remains a formidable challenge. Models may struggle to replicate intricate patterns and dependencies present in diverse datasets, leading to a potential gap between synthetic and authentic data.

- Biases and Generalization: Synthetic data, despite sophisticated algorithms, can inadvertently introduce biases or fail to generalize well across all real-world scenarios. Ensuring that synthetic datasets accurately represent the diversity of authentic data remains a persistent challenge, impacting the reliability of models trained on such data.

- Evaluation Metrics: Establishing comprehensive evaluation metrics for synthetic data quality proves challenging. Quantifying how well synthetic datasets emulate the statistical properties of real data requires nuanced metrics, and the absence of standardized measures can hinder effective assessment.

- Domain-Specific Challenges: Certain domains present unique challenges for synthetic data generation. Industries such as healthcare demand meticulous attention to privacy preservation, making it challenging to balance the creation of realistic data with the imperative to protect sensitive information.

- Limited Real-World Variability: Synthetic datasets may struggle to capture the full variability of real-world data. This limitation can affect the performance of models in unpredictable scenarios not adequately represented during the synthetic data generation process.

- Ethical Considerations: The use of synthetic data raises ethical questions, especially when applied in sensitive domains. Balancing the need for realistic data with ethical considerations, such as the potential impact on marginalized groups, requires careful scrutiny and adherence to ethical guidelines.

- Resource Intensiveness: Certain methods of synthetic data generation, particularly those leveraging advanced machine learning models, can be resource-intensive. Training complex models may require significant computational power and time, posing challenges for organizations with limited resources.

- Niche Domain Challenges: In specialized domains, creating accurate and representative synthetic datasets becomes more challenging. Niche industries may lack the extensive real-world data needed for effective synthetic data generation, limiting its applicability.

Acknowledging these challenges is crucial for practitioners and researchers working with synthetic data. By addressing these limitations, the field can evolve towards more robust methodologies and practices, fostering greater trust in the application of synthetic data across various domains.

Which tools and platforms can be used for Synthetic Data Generation?

Within the expansive landscape of synthetic data generation, a diverse array of tools and platforms has emerged, addressing the nuanced needs of data scientists and researchers engaged in crafting realistic and varied datasets for a multitude of applications.

In the realm of dedicated synthetic data platforms, offerings such as Syntho and Mostly AI provide comprehensive end-to-end solutions. These platforms stand out for incorporating advanced algorithms and robust privacy-preserving mechanisms, ensuring the generation of synthetic datasets that not only mirror real-world scenarios but also adhere to stringent privacy standards.

Python’s Faker library is a noteworthy contender, celebrated for its versatility in creating realistic yet fictitious data. With support for various data types, Faker proves instrumental in scenarios where privacy preservation is paramount, offering a straightforward and accessible approach to synthetic data generation.

For practitioners delving into the realm of deep learning, frameworks like TensorFlow and Keras provide a robust foundation for the implementation of Generative Adversarial Networks (GANs). GANs, renowned for their capability to capture intricate data distributions, open avenues for sophisticated synthetic data generation that aligns closely with the complexities of real-world datasets.

PyTorch, another powerful deep learning framework, shines when working with probabilistic models like Variational Autoencoders (VAEs). Offering flexibility in encoding and decoding data distributions, PyTorch enables the creation of synthetic datasets with nuanced structures and relationships.

Data augmentation libraries further enrich the synthetic data generation toolkit. imgaug, tailored for images, and nlpaug, specializing in text, play pivotal roles in introducing variations to datasets, expanding their scope, and enhancing the robustness of machine learning models.

Privacy-focused tools, such as Mimic and OpSynth, prioritize the protection of sensitive information. These tools enable the creation of synthetic datasets that mirror real-world scenarios while safeguarding privacy, making them particularly valuable in sectors like healthcare and finance.

DataRobot Paxata represents a seamless integration of data preparation and synthetic data generation. By combining visual data profiling with automated data preparation, this platform streamlines the synthetic data generation process, catering to users seeking efficiency and user-friendly workflows.

H2O.ai offers solutions that emphasize simplicity and efficiency in creating synthetic datasets tailored for machine learning and AI applications. The platform’s approach ensures that practitioners can easily generate diverse synthetic datasets that align with the unique requirements of their projects.

The diverse array of available tools ensures that practitioners can tailor their approach to meet the specific use cases, data types, and privacy considerations inherent to their projects. This richness in the synthetic data generation toolkit enables the creation of datasets that not only meet technical demands but also align closely with ethical and privacy standards, fostering trust in the application of synthetic data across diverse domains.

How can you use Synthetic Data Generation in Python?

In Python, the versatility of synthetic data generation comes to life through various libraries, offering functionalities tailored to different requirements. Let’s delve into a practical example using a public dataset and demonstrate the steps involved:

1. Data Exploration:

- Choose a Public Dataset:

Select a public dataset relevant to your domain. For instance, consider the “Iris” dataset, a classic dataset in machine learning, available in scikit-learn. This dataset contains measurements of sepal length, sepal width, petal length, and petal width for three different species of iris flowers.

2. Data Generation with Faker:

- Install Faker Library:

Begin by installing the Faker library if not already installed. Use the following command:

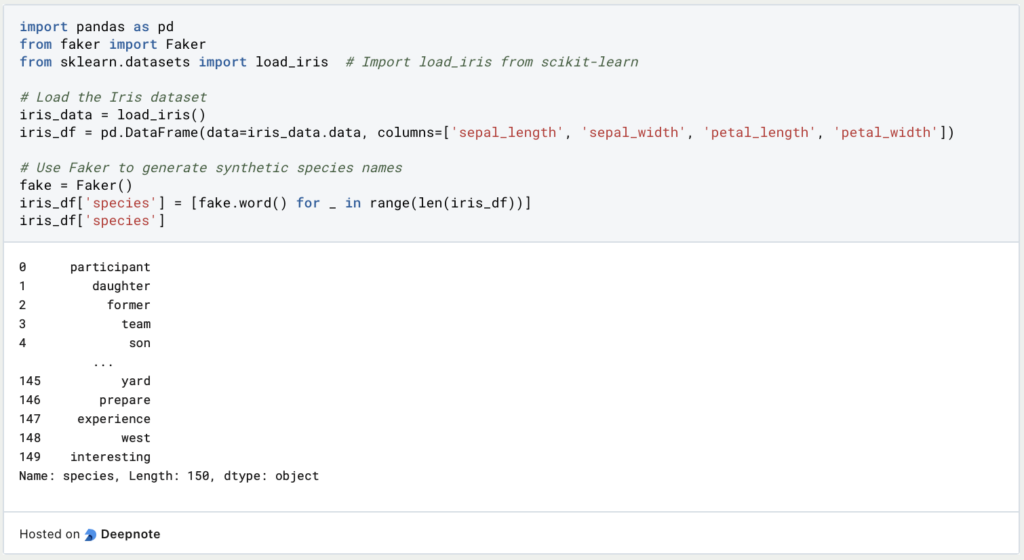

- Generate Synthetic Data:

Import the necessary libraries and create synthetic data using Faker. In this example, we’ll simulate additional data for the “Iris” dataset, generating fake names for the flowers’ species:

3. Data Analysis:

- Inspect the Synthetic Data:

Analyze the synthetic data alongside the original dataset to observe the introduced variations. This step ensures that the generated data aligns with the characteristics of the real-world dataset.

4. Data Integration:

- Combine Real and Synthetic Data:

Merge the real and synthetic datasets to create a comprehensive dataset for training machine learning models. This integration enhances dataset diversity, potentially improving model generalization.

This example showcases the seamless integration of synthetic data generation using the Faker library with a well-known public dataset. While the Iris dataset is relatively simple, the principles apply to more complex datasets across various domains, illustrating the practical implementation of synthetic data in Python.

This is what you should take with you

- The synthetic data generation landscape offers a diverse toolkit, ranging from dedicated platforms to versatile libraries, catering to the varied needs of data scientists and researchers.

- Privacy-focused tools, including Mimic and OpSynth, exemplify a commitment to safeguarding sensitive information, making synthetic data generation a viable solution for privacy-conscious applications.

- The integration of deep learning frameworks like TensorFlow, Keras, and PyTorch enables the implementation of sophisticated techniques such as GANs and VAEs, enhancing the capacity to replicate intricate real-world data distributions.

- Synthetic data generation finds applications across diverse domains, from healthcare to finance, showcasing its versatility in creating datasets that mirror real-world scenarios.

- Platforms like DataRobot Paxata and solutions from H2O.ai streamline the process by integrating data preparation with synthetic data generation, emphasizing efficiency and user-friendly workflows.

- The toolkit allows practitioners to strike a balance between creating realistic datasets and incorporating flexibility, ensuring the synthetic data aligns with the complexities and nuances of real-world datasets.

- Synthetic data generation contributes to ethical data practices by providing alternatives for scenarios where real data may pose privacy concerns, promoting responsible and transparent use of data.

What is Random Search?

Optimize Machine Learning Models: Learn how Random Search fine-tunes hyperparameters effectively.

What is the Lasso Regression?

Explore Lasso regression: a powerful tool for predictive modeling and feature selection in data science. Learn its applications and benefits.

What is the Omitted Variable Bias?

Understanding Omitted Variable Bias: Causes, Consequences, and Prevention in Research." Learn how to avoid this common pitfall.

What is the Adam Optimizer?

Unlock the Potential of Adam Optimizer: Get to know the basucs, the algorithm and how to implement it in Python.

What is One-Shot Learning?

Mastering one shot learning: Techniques for rapid knowledge acquisition and adaptation. Boost AI performance with minimal training data.

What is the Bellman Equation?

Mastering the Bellman Equation: Optimal Decision-Making in AI. Learn its applications & limitations. Dive into dynamic programming!

Other Articles on the Topic of Synthetic Data Generation

Here you can find an article about Synthetic Data Generation in Microsoft.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.