Genetic algorithms are a powerful optimization technique inspired by the process of natural selection. They are widely used in a variety of fields, including engineering, finance, and medicine. At their core, genetic algorithms mimic the process of evolution by generating a population of potential solutions to a problem and iteratively refining these solutions over multiple generations. This allows genetic algorithms to find optimal solutions to complex problems that would be difficult or impossible to solve using traditional optimization techniques.

In this article, we will explore the principles of genetic algorithms, their applications, and their strengths and weaknesses. We will also discuss how to choose the parameters of a genetic algorithm and how to adapt the technique to different problem domains. By the end of this article, readers will have a thorough understanding of genetic algorithms and how to apply them to solve real-world optimization problems.

How is the evolutionary process included in algorithms?

Genetic algorithms are optimization techniques inspired by the process of natural selection. At their core, genetic algorithms mimic the process of evolution by generating a population of potential solutions to a problem and iteratively refining these solutions over multiple generations.

The evolutionary process in genetic algorithms can be broken down into several key steps. First, the algorithm initializes by generating an initial population of potential solutions. Each solution is represented as a chromosome, which consists of a string of genes that encode different aspects of the solution.

The algorithm then selects a subset of the population to serve as parents for the next generation, based on the fitness of each solution as determined by a fitness function. The parents are then combined through a process called crossover, in which portions of their chromosomes are swapped to create new offspring. The mutation is then introduced, where random changes are made to the offspring’s chromosomes to introduce new genetic material and prevent the algorithm from getting stuck in local optima.

The fitness of the offspring is evaluated using the fitness function, and the best solutions are selected to form the next generation of the population. This process continues for a fixed number of generations or until a stopping criterion is met, such as reaching a certain fitness threshold or running out of time.

The evolutionary process in genetic algorithms is a powerful way to search the space of potential solutions to a problem and converge on optimal or near-optimal solutions over time. However, the success of the algorithm depends on several factors, such as the choice of the fitness function, the size of the population, and the mutation and crossover rates.

What are the different variations of genetic algorithms?

There are several variations of genetic algorithms that have been developed to address specific types of problems and to improve the performance of the algorithm. Here are some of the most common variations:

- Niching: Niching is a technique used to maintain diversity within the population by encouraging the development of multiple solutions to a problem. This is particularly useful in problems where there are multiple optimal solutions, or where the population tends to converge too quickly.

- Multi-objective optimization: Multi-objective optimization involves optimizing multiple objectives simultaneously, such as maximizing performance while minimizing cost. This variation of genetic algorithms uses techniques such as Pareto optimality and crowding to generate a set of solutions that are optimal in different ways.

- Co-evolution: Co-evolution involves evolving two or more populations simultaneously, where each population interacts with the other populations. This is useful for problems where the fitness of one population depends on the behavior of another population, such as in predator-prey relationships or in game theory.

- Hybridization: Hybridization involves combining genetic algorithms with other optimization techniques, such as simulated annealing or gradient descent. This can improve the performance of the algorithm by taking advantage of the strengths of different optimization techniques.

- Cultural algorithms: Cultural algorithms involve incorporating cultural knowledge and learning into the genetic algorithm. This can include knowledge about the problem domain, as well as learning from the behavior of the population over time.

These variations of genetic algorithms are just a few examples of the many techniques that have been developed to improve the performance of the algorithm and address specific types of problems. By combining these techniques and developing new ones, researchers continue to push the boundaries of what genetic algorithms can achieve.

Which parameters can be adapted to improve the result?

Genetic algorithms have several parameters that can be adjusted to optimize their performance. These parameters include the population size, the mutation rate, the crossover rate, the selection method, and the termination criterion. Adjusting these parameters can have a significant impact on the performance and efficiency of the algorithm.

The population size refers to the number of potential solutions in each generation. A larger population size increases the diversity of the solutions and the likelihood of finding the optimal solution but also increases the computational cost of evaluating each solution.

The mutation rate determines the probability that a gene in a chromosome will be randomly altered. A higher mutation rate can increase the diversity of the population and prevent the algorithm from getting stuck in local optima, but too high of a mutation rate can prevent the algorithm from converging on a solution.

The crossover rate determines the probability that two chromosomes will be combined through the crossover. A higher crossover rate can increase the diversity of the population and speed up the convergence of the algorithm, but can also lead to premature convergence and suboptimal solutions.

The selection method determines how parents are chosen for the next generation. Popular methods include tournament selection, roulette wheel selection, and rank-based selection. Each method has its advantages and disadvantages, and the choice depends on the problem domain and the desired balance between exploration and exploitation.

The termination criterion determines when the algorithm should stop running. Common termination criteria include reaching a certain fitness threshold, reaching a fixed number of generations, or running out of computational resources. The choice of termination criterion depends on the problem domain and the desired level of precision.

It is important to note that adjusting these parameters is not a one-size-fits-all solution, and the optimal values can vary depending on the specific problem being solved. Therefore, experimentation and careful tuning of the parameters is often necessary to achieve the best results.

Which applications use these algorithms?

Genetic algorithms have found applications in a wide range of fields, from engineering to economics, and from medicine to game design. Some of the most common applications of genetic algorithms include:

- Optimization problems: Genetic algorithms are often used to optimize complex systems that have many variables and constraints. These systems can include engineering designs, financial portfolios, or logistics planning.

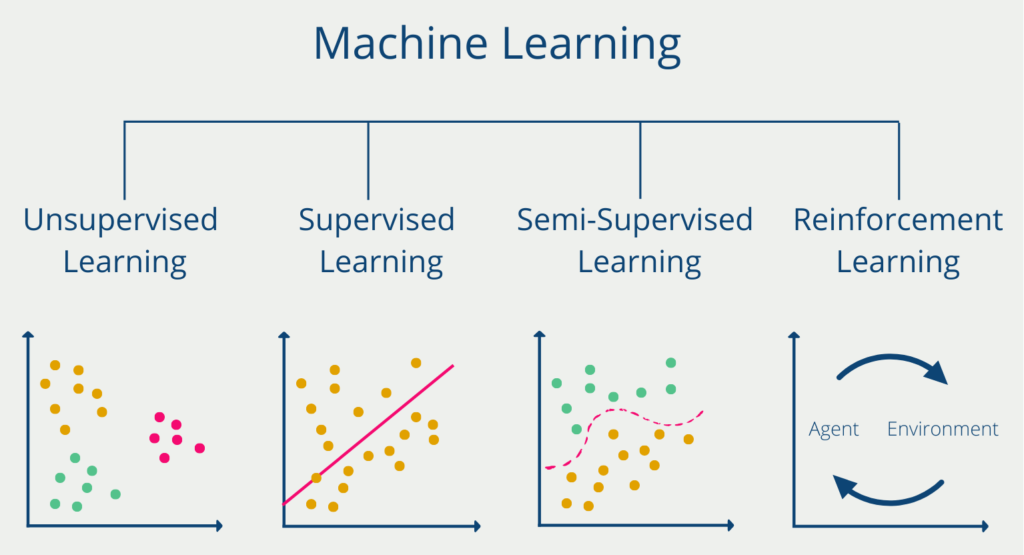

- Machine Learning: Genetic algorithms can be used to train machine learning models and to perform feature selection, which involves selecting the most relevant features from a large set of possible features.

- Robotics: Genetic algorithms can be used to optimize the control and behavior of robots, and to generate new designs for robotic systems.

- Image and signal processing: These algorithms can be used to enhance and analyze images and signals, such as in image segmentation, noise reduction, and feature extraction.

- Game design: Genetic algorithms can be used to generate new game content, such as levels, characters, and puzzles.

- Bioinformatics: Genetic algorithms can be used to analyze biological data, such as DNA sequences, and to identify patterns and relationships between different data sets.

- Marketing: Genetic algorithms can be used to optimize marketing strategies and to analyze consumer behavior, such as in market segmentation and target audience selection.

These are just a few examples of the many applications of genetic algorithms. As the technology continues to develop, new and innovative applications are likely to emerge in a variety of fields.

What are the advantages and disadvantages of these algorithms?

Genetic algorithms are a powerful optimization technique that has been successfully applied to a wide range of problems in various domains. Like any other optimization technique, genetic algorithms have their own set of advantages and disadvantages:

Advantages:

- Global optimization: The algorithms are capable of finding globally optimal solutions to complex optimization problems, even when there are many variables and constraints involved.

- Adaptability: Genetic algorithms are adaptable to a wide range of problem domains and can handle both continuous and discrete variables.

- Non-deterministic: They are non-deterministic, meaning that they can explore multiple solutions simultaneously and can avoid getting stuck in local optima.

- Parallel processing: The method is well-suited to parallel processing, which can speed up the optimization process.

- No requirement for a gradient: Unlike other optimization techniques, genetic algorithms do not require the gradient of the function being optimized, making them suitable for non-differentiable functions.

Disadvantages:

- Slow convergence: Genetic algorithms can be slow to converge to an optimal solution, particularly for complex problems with a large number of variables.

- Premature convergence: The algorithms can sometimes converge too quickly to a suboptimal solution, particularly if the population size is too small or the mutation rate is too low.

- Parameter tuning: Genetic algorithms require careful tuning of parameters, such as population size, crossover rate, and mutation rate, in order to achieve good performance.

- Limited understanding of solution space: They may find a solution that is optimal within the search space, but this may not be optimal within the problem domain.

- Lack of guarantees: Genetic algorithms do not provide guarantees of finding an optimal solution, and the quality of the solution found depends on the quality of the initial population and the optimization parameters.

This is what you should take with you

- Genetic algorithms are a powerful optimization technique used across various domains.

- They are capable of finding globally optimal solutions and can be adapted to different problem domains.

- They do not require the gradient of the function being optimized, making them suitable for problems where other techniques may not be effective or feasible.

- However, they have some limitations, such as slow convergence, premature convergence, and the need for careful tuning of parameters.

- Despite these limitations, genetic algorithms remain a popular choice for optimization problems.

- With ongoing advancements in computer hardware and algorithms, genetic algorithms are likely to remain an important tool for solving complex optimization problems in the future.

What is Random Search?

Optimize Machine Learning Models: Learn how Random Search fine-tunes hyperparameters effectively.

What is the Lasso Regression?

Explore Lasso regression: a powerful tool for predictive modeling and feature selection in data science. Learn its applications and benefits.

What is the Omitted Variable Bias?

Understanding Omitted Variable Bias: Causes, Consequences, and Prevention in Research." Learn how to avoid this common pitfall.

What is the Adam Optimizer?

Unlock the Potential of Adam Optimizer: Get to know the basucs, the algorithm and how to implement it in Python.

What is One-Shot Learning?

Mastering one shot learning: Techniques for rapid knowledge acquisition and adaptation. Boost AI performance with minimal training data.

What is the Bellman Equation?

Mastering the Bellman Equation: Optimal Decision-Making in AI. Learn its applications & limitations. Dive into dynamic programming!

Other Articles on the Topic of Genetic Algorithms

This is an interesting paper on how genetic algorithms are used to find new alloys.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.