Singular value decomposition (SVD) is a mathematical technique that has become an essential tool for data analysis in various fields, including statistics, computer science, and engineering. It is used to reduce the dimensionality of large datasets, extract important features, and uncover hidden patterns in the data.

In this article, we will explore what SVD is, how it works, and some of its key applications.

What is the Singular Value Decomposition?

The Singular Value Decomposition is a powerful matrix factorization technique that provides valuable insights into the underlying structure and properties of a matrix. It decomposes a matrix into three separate matrices: the left singular vectors, singular values, and right singular vectors. Together, these components reveal important aspects of the original matrix and offer various applications in data analysis and beyond.

The left and right singular vectors obtained from the SVD represent the directions of maximum variation in the data. These vectors form an orthonormal basis that captures the relationship between the rows and columns of the matrix, respectively. They help uncover the fundamental patterns and structures present in the data, making them essential for tasks such as dimensionality reduction, feature extraction, and data visualization.

The singular values, on the other hand, indicate the importance or significance of each direction of variation. They are arranged in descending order of magnitude, with the largest values corresponding to the most influential directions. By selecting a subset of the largest singular values and their associated singular vectors, we can effectively reduce the dimensionality of the data while preserving the most important information. This dimensionality reduction technique is widely used in applications such as data compression, noise reduction, and feature selection.

What are the components of the Singular Value Decomposition?

In Singular Value Decomposition, the decomposition of a matrix into its constituent components provides valuable insights into the underlying structure of the data. The SVD comprises three key components: the left singular vectors, the singular values, and the right singular vectors.

The left singular vectors represent the directions in the input space that are most important for explaining the variance in the data. These vectors form an orthonormal basis, providing a new coordinate system to represent the data.

The singular values quantify the importance of each left singular vector. They indicate the amount of variance in the data that can be explained by each vector. The singular values are arranged in descending order, allowing for the identification of the most significant components.

The right singular vectors represent the directions in the output space that are most important for explaining the variance in the transformed data. They provide insights into the relationships between the original features and the principal components.

Understanding these components of SVD is crucial for extracting meaningful information from the data. By analyzing the left singular vectors, singular values, and right singular vectors, we can identify dominant patterns, reduce dimensionality, and gain deeper insights into the underlying structure of the data.

How does SVD work?

The Singular Value Decomposition is a matrix factorization technique that decomposes a matrix into three separate matrices: U, Σ, and V^T. These matrices represent the key components of the original matrix and provide valuable insights into its structure and properties. Understanding how SVD works is essential for effectively utilizing this powerful algorithm in various applications.

The algorithm can be summarized as follows:

- Given an input matrix A of size m x n, SVD finds the factorization A = UΣV^T, where:

- U is an m x m orthogonal matrix containing the left singular vectors.

- Σ is an m x n diagonal matrix containing the singular values.

- V^T is the transpose of an n x n orthogonal matrix containing the right singular vectors.

- The singular values in Σ are sorted in descending order, indicating their significance in representing the data.

- The singular vectors in U and V^T are orthonormal, meaning they are perpendicular to each other and have unit norm. They represent the directions or axes along which the original matrix A is stretched or transformed.

- The singular values in Σ provide information about the magnitude or importance of each singular vector. Larger singular values indicate more significant contributions to the original matrix.

- By selecting a subset of the most significant singular values and corresponding singular vectors, it is possible to approximate the original matrix A with reduced dimensions. This is useful for dimensionality reduction and data compression.

- SVD can be used to solve linear systems of equations, perform matrix inversion, and compute matrix pseudoinverses.

Implementing SVD in Python can be done using various libraries such as NumPy or SciPy. These libraries provide efficient implementations of the algorithm and offer additional functionalities for working with the resulting singular values and vectors.

Overall, understanding how SVD works allows us to decompose a matrix into its fundamental components and gain insights into the underlying structure of the data. This knowledge enables us to perform a wide range of tasks such as dimensionality reduction, data analysis, and machine learning applications.

How to implement the algorithm in Python?

The Singular Value Decomposition algorithm is a powerful mathematical technique used to decompose a matrix into its constituent components. It is widely used in various applications such as data compression, dimensionality reduction, and matrix approximation. In this section, we will explore the algorithmic steps involved in SVD and demonstrate its implementation in Python using code snippets.

In Python, you can implement it using the following steps:

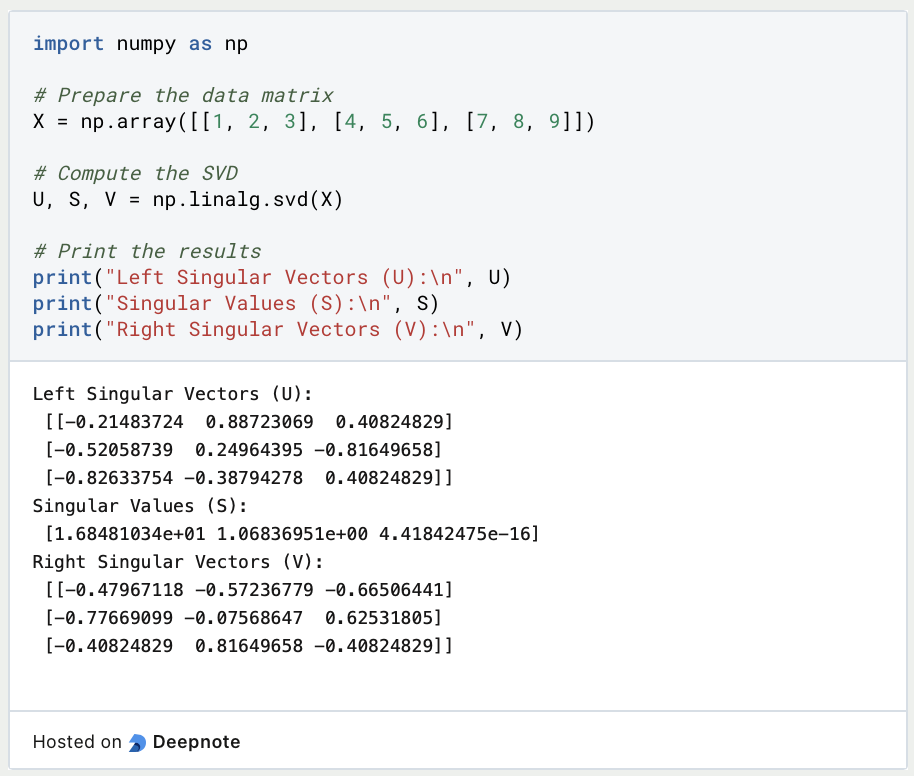

- Import the required libraries:

- Prepare the data matrix:

- Compute the SVD using NumPy:

- Interpret the results:

- The matrix U represents the left singular vectors.

- The vector S contains the singular values.

- The matrix V represents the right singular vectors.

Here is an example implementation of the algorithm in Python:

By running this code, you will obtain the left singular vectors, singular values, and right singular vectors of the input matrix X. These components can be further analyzed and utilized for various purposes, such as dimensionality reduction or matrix reconstruction.

The implementation of SVD in Python using libraries like NumPy provides a convenient and efficient way to perform matrix decomposition and utilize the resulting components for further analysis and applications.

What are the properties and insights from SVD?

The Singular Value Decomposition provides several important properties and insights that are valuable in understanding and analyzing data. By decomposing a matrix into its singular values and vectors, SVD offers a deeper understanding of the underlying structure and relationships within the data.

Here are some key properties and insights:

- Rank: The rank of a matrix is determined by the number of non-zero singular values in the diagonal matrix Σ. It represents the intrinsic dimensionality or the maximum number of linearly independent rows or columns in the matrix. The rank is useful for dimensionality reduction and determining the essential features of the data.

- Orthogonality: The left singular vectors (columns of matrix U) and the right singular vectors (rows of matrix V^T) are orthogonal to each other. This orthogonality property implies that the singular vectors represent distinct directions or axes along which the original matrix is transformed.

- Principal Components: The singular vectors associated with the largest singular values capture the most significant variation in the data. These vectors, known as the principal components, provide insights into the major patterns and structures present in the data. They can be used for dimensionality reduction, data visualization, and feature selection.

- Data Compression: SVD enables data compression by approximating the original matrix using a subset of singular values and vectors. By selecting the most important components, it is possible to represent the data with reduced dimensions while retaining a significant amount of information. This is useful for efficient storage and transmission of large datasets.

- Matrix Reconstruction: SVD allows for the reconstruction of the original matrix using the selected singular values and vectors. This reconstruction can be used to fill in missing values, denoise data, or recover the original matrix from its low-rank approximation.

- Matrix Inversion and Linear Systems: It provides a convenient way to compute the inverse of a matrix and solve linear systems of equations. By manipulating the singular values and vectors, it is possible to efficiently perform these operations.

The properties and insights from SVD have applications in various fields, including data analysis, image processing, recommendation systems, and signal processing. By understanding these properties, we can leverage the power to gain valuable insights, reduce data dimensionality, and solve complex problems efficiently.

What are the applications of SVD?

SVD has many applications in data analysis, including:

- Image compression: SVD is used to reduce the size of digital images while maintaining their quality. By keeping only the most important singular values, we can significantly reduce the size of the image without losing much of its detail.

- Data compression: The Singular Value Decomposition can be used to compress large datasets, making them easier to store and analyze. By keeping only the most important singular values, we can reduce the dimensionality of the data while retaining most of its important features.

- Feature extraction: It is used to extract important features from high-dimensional datasets. By keeping only the most important singular vectors and corresponding singular values, we can reduce the dimensionality of the data while retaining most of its important features.

- Recommendation systems: The algorithm is used to discover latent features in user-item matrices that can be used to make personalized recommendations. By keeping only the most important singular vectors and corresponding singular values, we can identify patterns in the data that can be used to make accurate recommendations.

What are the extensions and variations of the SVD?

Singular Value Decomposition has been extended and modified in various ways to address specific requirements and challenges in different domains. Some notable extensions and variations of SVD include:

Truncated SVD, which retains only the top-k singular values and their corresponding singular vectors, allowing for dimensionality reduction and approximation of the original matrix while preserving important information.

Randomized SVD, an efficient approximation algorithm that uses randomized sampling techniques to compute an approximate SVD. It provides faster computation times and is suitable for large-scale datasets.

Probabilistic SVD, which introduces probabilistic models to estimate the SVD. It combines concepts from probabilistic graphical models and matrix factorization to handle missing data and incorporate uncertainty in the decomposition.

Non-negative Matrix Factorization (NMF), a variation of SVD that restricts the factor matrices to have non-negative entries. It is useful for non-negative data, such as text data or image data with non-negative pixel values, and provides interpretable factorizations.

Robust Principal Component Analysis (PCA), an extension of SVD that handles outliers and data corruption by decomposing a matrix into low-rank and sparse components, separating the underlying structure from outliers or noise.

Incremental SVD, which allows for updating the decomposition of a matrix when new data points or columns are added. It efficiently updates the SVD without recomputing the entire decomposition, making it suitable for evolving data scenarios.

Kernel PCA, an extension of SVD to non-linear mappings by applying the kernel trick. It enables non-linear dimensionality reduction and is commonly used in machine learning for feature extraction.

Collaborative Filtering, an application of matrix factorization, including SVD, for recommender systems. It uses user-item rating matrices to predict user preferences and provide personalized recommendations.

These extensions and variations of SVD offer enhanced capabilities and address specific challenges in different domains, providing flexibility, efficiency, and improved interpretability. They expand the range of applications where SVD and its variants can be effectively utilized.

What are the limitations of SVD?

While Singular Value Decomposition is a powerful technique for analyzing and extracting information from data, it also has certain limitations and considerations that need to be taken into account:

- Computational Complexity: SVD can be computationally expensive, especially for large matrices. The time and memory requirements increase with the size of the matrix, making it impractical for extremely large datasets. Various techniques, such as randomized SVD, have been developed to mitigate this issue.

- Interpretability of Components: While it provides a decomposition of the data into singular values and vectors, the interpretation of these components may not always be straightforward. The singular vectors do not have a direct mapping to the original features, and their meaning can be challenging to interpret in complex datasets.

- Sensitivity to Noise: SVD is sensitive to noise in the data. Outliers or measurement errors can significantly impact the decomposition and affect the accuracy of the results. Preprocessing techniques like data cleaning and normalization can help mitigate this issue.

- Rank Approximation: In some cases, determining the optimal rank for matrix approximation can be challenging. The selection of the number of singular values to retain requires careful consideration and may involve trade-offs between preserving information and reducing dimensionality.

- Data Interpretation: SVD can uncover hidden patterns and structures in the data, but it does not provide explicit interpretations of those patterns. Additional domain knowledge and context are often necessary to understand the underlying meaning of the decomposed components.

- Non-uniqueness: It is not unique as multiple decompositions can exist for a given matrix. Different implementations or variations in the algorithm can produce slightly different results. It is important to understand this non-uniqueness and assess the stability of the decomposition.

- Missing Data Handling: SVD does not handle missing data directly. Imputation techniques need to be applied beforehand to handle missing values, which can introduce additional uncertainty and potential biases in the analysis.

- Scalability: SVD may face scalability issues when dealing with high-dimensional data. The curse of dimensionality can affect the performance and accuracy of the decomposition. Dimensionality reduction techniques, such as PCA, can be applied to address this challenge.

Understanding the limitations of SVD helps in making informed decisions when applying this technique to real-world problems. It is crucial to consider these factors and evaluate whether this approach is suitable for the specific dataset and analysis objectives.

This is what you should take with you

- Singular Value Decomposition (SVD) is a powerful matrix factorization technique used for dimensionality reduction, data compression, and extracting latent features.

- It provides a compact representation of the data by decomposing a matrix into three matrices: U, Σ, and V.

- The singular values in Σ represent the importance of the corresponding singular vectors in U and V, capturing the variability and patterns in the data.

- The algorithm has a wide range of applications, including image processing, text mining, recommendation systems, and data analysis.

- It offers insights into the structure and relationships within the data, enabling better understanding and interpretation.

- SVD has limitations, such as computational complexity for large datasets and challenges in interpreting the components.

- Careful consideration of preprocessing, noise handling, and rank selection is necessary for accurate and meaningful results.

- Despite its limitations, SVD remains a valuable tool in data analysis and provides a foundation for other techniques like Principal Component Analysis (PCA).

- Ongoing research and advancements continue to improve the algorithms and its applications in various domains.

- Overall, SVD is a fundamental technique in linear algebra and data analysis, offering valuable insights and opportunities for knowledge extraction.

What is the Lasso Regression?

Explore Lasso regression: a powerful tool for predictive modeling and feature selection in data science. Learn its applications and benefits.

What is the Omitted Variable Bias?

Understanding Omitted Variable Bias: Causes, Consequences, and Prevention in Research." Learn how to avoid this common pitfall.

What is the Adam Optimizer?

Unlock the Potential of Adam Optimizer: Get to know the basucs, the algorithm and how to implement it in Python.

What is One-Shot Learning?

Mastering one shot learning: Techniques for rapid knowledge acquisition and adaptation. Boost AI performance with minimal training data.

What is the Bellman Equation?

Mastering the Bellman Equation: Optimal Decision-Making in AI. Learn its applications & limitations. Dive into dynamic programming!

What is the Poisson Regression?

Learn about Poisson regression, a statistical model for count data analysis. Implement Poisson regression in Python for accurate predictions.

Other Articles on the Topic of Singular Value Decomposition

The Massachusetts Institute of Technology (MIT) also published an interesting article on the topic.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.