Scikit-Learn (also known as sklearn for short) is a Python library with which Machine Learning applications can be easily implemented. The library is based on common data structures in Python, such as Numpy, and is therefore very compatible with other modules. The source code of this library can be found on GitHub.

What is Scikit-Learn?

The Scikit-Learn software library enables the use of AI models in the programming language and saves the user a lot of programming effort by integrating common models, such as decision trees or K-Mean clustering, via a few lines of code.

Among the best-known prerequisites for using sklearn are Numpy and SciPy, on which the library is largely based. There are also dependencies on joblib and threadpoolctl. The project was created in 2007 and has since been available on GitHub under the “3-Clause BSD” license.

Which Applications can be implemented with the Library?

Scikit-Learn can be used to implement a wide variety of AI models, from both supervised and unsupervised learning. In general, the models can be divided into the following groups:

- Classification

- Regressions

- Dimension reduction

- Data preprocessing and visualization

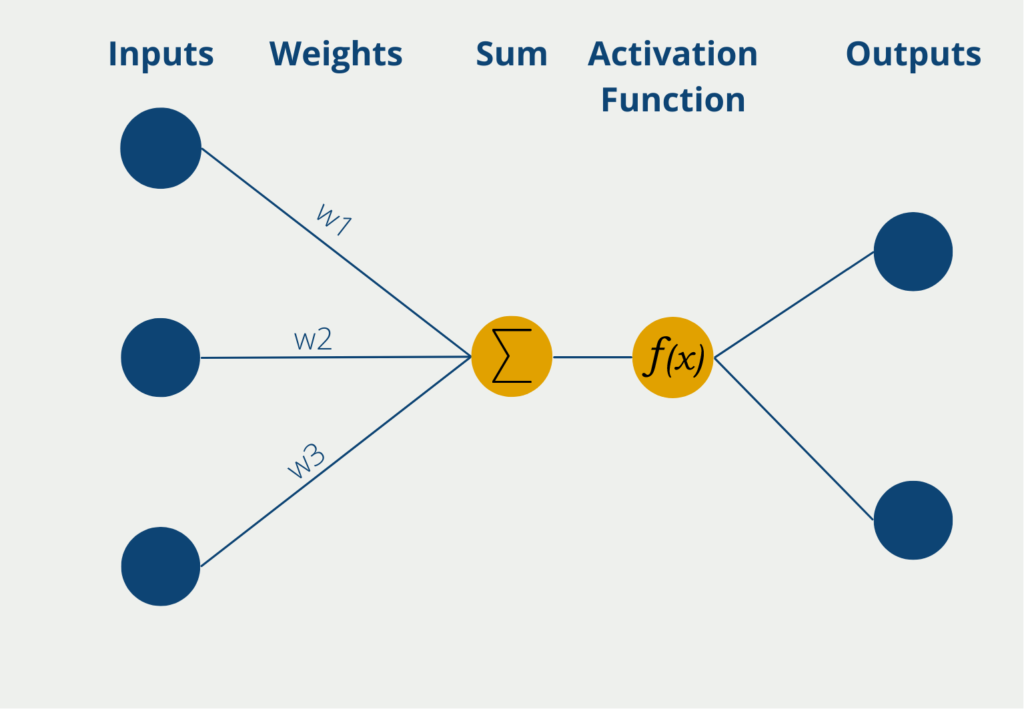

In the artificial intelligence environment, the library has only lost a bit of popularity because neural networks have become more and more interesting. These can only be built in a very rudimentary way using Scikit-Learn, which is why many users are switching to Tensorflow, or this library is also becoming more relevant. In addition, neural networks have far surpassed the performance of common AI models.

Which Supervised Learning Algorithms are supported?

Scikit-learn is a Python library that provides a wide range of supervised learning models for Machine Learning tasks. Some of the models supported by scikit-learn include:

- Linear regression: A model that fits a linear equation to the data.

- Logistic regression: A model that uses a logistic function to estimate the probability of a binary outcome.

- Decision trees: A model that recursively partitions the data into subsets based on the most discriminative features.

- Random forests: An ensemble model that combines multiple decision trees to reduce overfitting.

- Support vector machines (SVM): A model that finds the hyperplane that best separates the data into different classes.

- Naive Bayes classifiers: A family of probabilistic models that use Bayes’ theorem to predict class probabilities.

- k-Nearest Neighbors (k-NN): A model that predicts the class of a sample based on the classes of its k nearest neighbors in the training data.

- Neural networks (including Multi-Layer Perceptron): A family of models inspired by the structure and function of the human brain that can learn complex patterns in the data.

- Gradient Boosting Machines (GBM): An ensemble model that sequentially fits weak models to the residuals of the previous model to improve accuracy.

- AdaBoost: An ensemble model that combines multiple weak models to create a strong model.

- Bagging: An ensemble model that fits multiple models on bootstrap samples of the data to reduce variance.

- Gradient Descent: An optimization algorithm that iteratively updates model parameters to minimize a loss function.

- Ridge and Lasso Regression: Regularized linear regression models that add a penalty term to the loss function to prevent overfitting.

- Elastic Net: A hybrid model that combines the L1 and L2 penalties of Ridge and Lasso regression to balance bias and variance.

Each model has its own strengths and weaknesses, making it important to choose the appropriate model for the specific task at hand. Scikit-learn also provides utilities for preprocessing, model selection, and evaluation to streamline the Machine Learning workflow.

Which Unsupervised Learning Algorithms are supported in Scikit-Learn?

Scikit-learn supports several unsupervised learning models, including:

- Clustering: which involves grouping similar instances together based on a given distance metric. Scikit-learn provides various clustering algorithms, including KMeans, DBSCAN, and hierarchical clustering.

- Dimensionality reduction: This involves reducing the number of features in a dataset while preserving the essential information. Scikit-learn provides Principal Component Analysis (PCA), t-distributed Stochastic Neighbor Embedding (t-SNE), and Non-negative Matrix Factorization (NMF) for dimensionality reduction.

- Anomaly detection: This involves identifying instances that deviate significantly from the norm in a dataset. Scikit-learn provides One-Class SVM and Local Outlier Factor (LOF) for anomaly detection.

- Density estimation: This involves estimating the probability density function of a dataset. Scikit-learn provides Gaussian Mixture Models (GMM) and Kernel Density Estimation (KDE) for density estimation.

Each of these unsupervised learning models has its own strengths and weaknesses and can be applied to various types of data analysis problems.

What are the Advantages of Scikit-Learn?

Benefits of the library include:

- simplified application of machine learning tools, data analytics, and data visualization

- Commercial use without licensing fees

- a high degree of flexibility in fine-tuning models

- based on common and powerful data structures from Numpy

- Usable in different contexts

In addition to all the advantages, however, it should be noted with such libraries that the use of machine learning models requires solid prior knowledge and can also simply lead to incorrect statements if used carelessly.

Sklearn makes the use of these models, particularly easy and thus accessible to many users. However, it is important to be clear about which models can be used and whether the data used is reliable.

How to use the Library in Python?

The Iris Dataset is a popular training dataset for creating a classification algorithm. It is an example from biology and deals with the classification of so-called iris plants. About each flower the length and width of the petal and the so-called sepal are available. Based on these four pieces of information, it is then to be learned which of the three iris types this flower is.

With the help of Skicit-Learn, a decision tree can be trained in just a few lines of code:

# Import Modules

from sklearn.datasets import load_iris

from sklearn import tree

# Load Iris Dataset

iris = load_iris()

# Define X and Y Variable

X, y = iris.data, iris.target

# Set up the Decision Tree Classifier

clf = tree.DecisionTreeClassifier()

# Train it on the Iris Data

clf = clf.fit(X, y)So we can train a decision tree relatively easily by defining the input variable X and the classes Y to be predicted, and training the decision tree from Skicit-Learn on them. With the function “predict_proba” and concrete values, a classification can then be made:

# Predict class for artificial values

clf.predict_proba([[4.5, 8.2, 2.1, 1.7]])

Out:

array([[1., 0., 0.]])So this flower with the made-up values would belong to the first class according to our Decision Tree. This genus is called “Iris Setosa”.

What is the future of Scikit-Learn?

Scikit-learn has been widely adopted in the machine learning community due to its user-friendly interface, a wide range of algorithms, and comprehensive documentation. The project has continued to evolve over the years, with new releases adding new features and improving performance.

As the field of machine learning continues to grow, scikit-learn is likely to play an increasingly important role in making these methods more accessible to researchers, developers, and businesses. Here are some potential areas where scikit-learn may see further development in the future:

- Deep learning integration: While scikit-learn already offers a variety of powerful algorithms, deep learning methods have become increasingly popular in recent years due to their ability to handle complex data. Scikit-learn could potentially integrate some of these methods, such as neural networks and convolutional neural networks, to provide a more complete set of tools for machine learning practitioners.

- Interpretability: As Machine Learning models are increasingly used in high-stakes applications such as healthcare and finance, the ability to interpret and understand their decisions becomes more important. Scikit-learn could incorporate methods for explaining how a model makes decisions, such as feature importance and partial dependence plots.

- Distributed computing: As datasets continue to grow in size, traditional machine learning algorithms can become slow and memory-intensive. Scikit-learn could potentially incorporate distributed computing frameworks such as Apache Spark to enable faster and more scalable processing.

- Automated machine learning: Automated machine learning (AutoML) is a rapidly growing field that aims to automate many of the steps involved in machine learning, such as feature selection and hyperparameter tuning. Scikit-learn could potentially integrate some of these methods to make machine learning even more accessible to non-experts.

- Privacy-preserving Machine Learning: With growing concerns around data privacy, methods for performing machine learning on sensitive data without revealing it are becoming more important. Scikit-learn could potentially incorporate privacy-preserving machine learning methods, such as federated learning, to provide more options for working with sensitive data.

Overall, scikit-learn is well-positioned to continue playing a prominent role in the Machine Learning ecosystem in the coming years. As the field continues to evolve, scikit-learn will likely continue to adapt and incorporate new methods and techniques to provide a comprehensive set of tools for machine learning practitioners.

This is what you should take with you

- Scikit-Learn (also known as sklearn for short) is a Python library with which Machine Learning applications can be implemented in just a few lines of code.

- The library can be used for various applications in the areas of classification, dimensionality reduction, or regression.

- Sklearn is very popular because it is based on Numpy, is easy to use, and offers a high degree of flexibility.

Python Programming Basics: Learn it from scratch!

Master Python Programming Basics with our guide. Learn more about syntax, data types, control structures, and more. Start coding today!

What are Python Variables?

Dive into Python Variables: Explore data storage, dynamic typing, scoping, and best practices for efficient and readable code.

What is Jenkins?

Mastering Jenkins: Streamline DevOps with Powerful Automation. Learn CI/CD Concepts & Boost Software Delivery.

What are Conditional Statements in Python?

Learn how to use conditional statements in Python. Understand if-else, nested if, and elif statements for efficient programming.

What is XOR?

Explore XOR: The Exclusive OR operator's role in logic, encryption, math, AI, and technology.

How can you do Python Exception Handling?

Unlocking the Art of Python Exception Handling: Best Practices, Tips, and Key Differences Between Python 2 and Python 3.

Other Articles on the Topic of Scikit-Learn

- This GitHub repository contains the source code of Scikit-Learn.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.