Bayesian networks are probabilistic graphical models based on probability theory and Bayes’ theorem, in particular. The aim is to model and illustrate the complex dependencies between individual components compactly and understandably. Bayesian networks are used in a wide variety of applications, such as medical diagnosis or risk assessment.

In this article, we will look at Bayesian networks in detail and understand their structure in more detail. To do this, we will not only look at Bayes’ theorem but also ask ourselves how to create such a network and draw conclusions from it. After we have built a Bayesian network in Python, we will compare this method with other similar methods.

What are Bayesian Networks?

Bayesian networks are graphical models that represent the probabilistic dependencies between variables in a data set. Nodes and edges are used for this, with nodes representing the individual variables and the edges describing the (conditional) probabilities between these variables. The aim here is to represent the complex dependencies and dynamics between the nodes and make them easier to understand.

Such models are extremely valuable in a wide variety of areas, especially when they support complex decisions where there is no absolute certainty and probabilities are therefore used. The following applications use Bayesian Networks:

- Machine Learning: Bayesian networks can be used in the field of machine learning for data classification, feature extraction or pattern recognition. In this way, they can help train models based on uncertain or incomplete data.

- Decision-making: Bayesian networks are advantageous in this area as they quantify the relationships between factors and can also clearly visualize causal relationships.

- Data Analysis: In data analysis, Bayesian networks help to recognize patterns in large amounts of data and also to make predictions that are influenced by random variations.

The advantage of Bayesian networks is that they make it possible to represent complex dependencies without making rigid assumptions.

What does Bayes’ Theorem say?

To understand the structure of a Bayesian network in detail, it is important to understand the mathematical and statistical principles. In this section, we will therefore familiarize ourselves with probability theory, conditional probabilities, and, finally, Bayes’ theorem.

In probability theory, we deal with the quantification of uncertainties. In other words, we try to describe events whose outcome is uncertain. The probability then indicates the degree of uncertainty with which the event will occur. Let’s assume we want to quantify for a residential area whether the event “burglary” will occur or not.

Over 10 years, it is possible that there will never be a burglary in the residential area, but it is just as possible that a burglary will occur. We can map this uncertainty as to whether the “burglary” event will occur with a probability. For example, a burglary probability of 10% means that there is a 10% probability of a burglary occurring in ten years. In contrast, the “burglary” event will not occur 90% of the time.

A special form of probability here is the so-called conditional probability, which simply expresses the probability of an event occurring under the condition that another event has already occurred with certainty.

Assuming we have two variables A and B, the conditional probability P(A|B) represents the probability that A will occur if B has already occurred with certainty. A simple example is an alarm system and the associated conditional probability P(Alarm|Burglary) or in other words: “What is the probability that the alarm system will go off if a burglary has certainly occurred?”

Bayes’ theorem, in turn, provides a way to change our assumptions of probabilities over time as new information or data becomes available. Generally speaking, the conditional probability between events A and B is given by the following formula:

\(\)\[P(A|B)\ =\ \frac{P(B|A)\ \cdot P(A)}{P(B)} \]

Here are:

- \(P(A|B)\) is the conditional probability that event A will occur if event B has already occurred with certainty.

- \(P(B|A)\) is the conditional probability that event B will occur if event A has already occurred with certainty.

- \(P(A)\) is the probability that event A will occur.

- \(P(B)\) is the probability that event B will occur.

In addition to updating probabilities, Bayes’ theorem is also often used when the conditional probability \(P(A|B)\) is unknown, but the conditional probability \(P(B|A)\) is much easier to determine.

Let’s return to our example with the alarm system and the burglaries. Let’s assume we have the following events:

- Event \(A\): The alarm system goes off.

- Event \(B\): A burglary has taken place.

Using Bayes’ theorem, we now want to calculate the conditional probability that a burglary has taken place \((B)\) when the alarm goes off \((A)\), i.e. \(P(B|A)\). Um das Bayes Theorem anwenden zu können benötigen wir die folgenden Wahrscheinlichkeiten:

- \(P(B)\) is the probability that a burglary will occur. We assume that the risk of a break-in is very low, i.e. \(P(B) = 0.01\), or the probability of a break-in is 1%.

- \(P(A|B)\) describes the probability that the alarm system will go off if a break-in occurs. This depends on many factors, such as whether all windows are covered by the system or how often there are power failures during which break-ins can occur but the alarm system cannot be triggered. For our example, we assume a probability of 90%, i.e. 0.9, so that break-ins are almost always detected by the alarm system.

- \(P(A)\) describes the probability that the alarm system will go off, regardless of whether a break-in has taken place or not. This probability is made up of two cases:

- First case: The alarm system goes off because there has been a break-in. This probability results from the probability that there was a break-in \(P(B)\), multiplied by the probability that the alarm system also went off due to the break-in, i.e. \(P(A|B)\).

- Second case: The alarm system goes off even though there has been no break-in. This probability results from the fact that there was no break-in, i.e. \(1 – P(B)\) multiplied by the probability that the alarm system had a false alarm, i.e. \(P(A|\neg B)\). We assume that the manufacturer has a false alarm rate of 5%, i.e. 0.05. This results in the probability \(P(A)\) that an alarm is triggered:

\(\)\[P(A)\ =\ P(A|B)\ \cdot (B)\ +\ P(A|\neg B)\ \cdot (\neg B)\ =\ (0,9\ \cdot 0,01)\ +\ (0,05\ \cdot 0,99)\ =\ 0,0585\]

With the help of these values, we can now calculate the conditional probability (P(B|A)) using Bayes’ theorem:

\(\)\[P(B|A)\ =\ \frac{P(A|B)\ \cdot P(B)}{P(A)}\ =\ \frac{0,9\ \cdot 0,01}{0,0585}\ =\ 0,1538\]

This means that the probability that there is a break-in when the alarm system goes off is around 15%. In this case, Bayes’ theorem helps to realistically estimate the probability of a break-in by taking into account how reliable the alarm system is and how often false alarms occur.

How is a Bayesian Network structured?

The structure of Bayesian Networks is based on the two basic concepts of nodes and edges, which can be used to build and visualize complex relationships between different random variables. In this section, we introduce the concepts and illustrate them with a simple example.

Nodes

The nodes in a Bayesian network represent random variables that can assume different states. Each node has the ability to take on either discrete values, such as “yes” or “no”, or continuous values, such as temperature. In our example, we consider three different nodes:

- Weather: This node is discrete and has different states, such as “sunny”, “rainy” or “snowy”.

- Traffic: This node describes the traffic situation on an individual’s way to work, which can assume the states “traffic jam” or “no traffic jam”.

- Going to work: The last node decides whether the individual drives to work or works in the home office. It can therefore assume the states “Yes” or “No”.

In order to describe the dependencies of these nodes and place them in a connection, we next need the edges that build the causal relationships.

Edges

The edges that run between the nodes represent the relationships between the random variables. It is important here that these relationships are directed and thus run from one node to another, whereby the direction has a meaning. For example, a directed edge from the “Weather” node to the “Traffic” node indicates that the weather has an influence on traffic, as cars drive more slowly when it is snowing and therefore the risk of traffic jams increases.

The directed edges are important for the structure of the network, as they define the logical dependencies and therefore have a decisive influence on how the network works.

Graphical representation

The Bayesian network is represented using a so-called directed acyclic graph (DAG), which is characterized by the fact that each edge has a clear direction and points from one node to another, but not in both directions. In addition, there are no cyclical dependencies between the nodes.

However, this representation does not really help with statistical analysis, as the relationships can be recognized, but no concrete values and predictions can be derived.

Probabilities

In order to be able to make concrete predictions with the Bayesian network and quantify the dependencies, we need to add the probabilities for the network defined above.

There are two types of probabilities that need to be distinguished. The prior probability is defined for all “parent nodes”, i.e. the nodes that only have outgoing edges and no incoming ones. These nodes are completely independent of the other nodes. In our example, the weather is such a parent node.

All nodes that are dependent on other nodes require conditional probabilities, as it must be defined how this node behaves depending on the state of the parent node. In our example, “Traffic” and “Go to work” are such dependent nodes.

- Prior Probabilities

The so-called prior probabilities, which represent the initial states of the random variables without the influence of other nodes, are defined for all parent nodes. In our case, this only applies to the “Weather” node, which could have the following probabilities, for example

- \(P(W = sunny) = 0.6\)

- \(P(W = rainy) = 0.3\)

- \(P(W = snowy) = 0.1\)

These probabilities can be found through statistical analysis, for example by observing the weather over a year. The probability for the condition “sunny” is then calculated by counting all sunny days and dividing by the number of days examined, i.e. 365. It is important here that the probabilities of the states add up to one so that it is ensured that one of the states will occur.

2. Conditional probabilities

Conditional probabilities must be established for the remaining nodes that depend on other nodes, i.e. have parents, as the achievement of a state depends on the state of the parent node. For traffic, for example, the following table results, which include the various states of the weather, as traffic depends on the weather. The first line shows that traffic jams occur 10% of the time in sunny weather. Correspondingly, in 90% of cases, there is no congestion in sunny weather.

| Weather | P(V = Traffic Jam) | P(V = No Traffic Jam) |

| sunny | 0,1 | 0,9 |

| rainy | 0,5 | 0,5 |

| snowy | 0,8 | 0,2 |

The probabilities for the states “Go to work” are somewhat more complex, as this variable depends on two nodes, namely “Traffic” and “Weather”, which is why more rows are required.

| Weather | Traffic | P(A = Yes) | P(A = No) |

| sunny | Traffic Jam | 0,6 | 0,4 |

| sunny | No Traffic Jam | 0,9 | 0,1 |

| rainy | Traffic Jam | 0,4 | 0,6 |

| rainy | No Traffic Jam | 0,7 | 0,3 |

| snowy | Traffic Jam | 0,2 | 0,8 |

| snowy | No Traffic Jam | 0,5 | 0,5 |

The first line then says that when the weather is sunny and there is a traffic jam, there is a 60% probability that an employee will come to the office. In the same way, it can also be interpreted to mean that, given the circumstances, around 60% of the workforce will show up at the office.

Now that we have understood the general structure of the Bayesian network, there is still the crucial question of how to arrive at the dependencies and the calculated probabilities. In doing so, we will also look into the question of where the network got its name from.

How do you create a Bayesian Network and calculate the probabilities?

To build a Bayesian network from a data set, we need two steps. Firstly, we need to recognize and build the edges between the nodes and secondly, we need to calculate the probabilities for the individual events and the conditional probabilities.

Parametric Learning

Parametric learning involves the process of calculating the parameters of the Bayesian network, i.e. the probability values of the individual nodes, from a given data set. These parameters include both the probabilities of the individual nodes and the dependencies between the nodes. The following methods, for example, can be used for parametric learning:

- Maximum Likelihood Estimation (MLE): In this approach, the probabilities are selected in such a way that they most likely explain the observed data. The probability represents the relative frequency of the events, which maximizes the probability of occurrence of the actual observed data. Using this approach, for example, we would estimate a probability of 70% for the weather “sunny” if it was sunny on 70% of the observed days.

- Bayesian Estimation: If the available data set is small or incomplete, Bayesian estimation can also be used. In contrast to MLE, this also incorporates the so-called a priori assumption, i.e. previous knowledge, into the estimate. This is then only updated by the new, observed data.

Example of Bayesian Estimation

Let’s assume we want to estimate the probabilities for the weather events and have two different data sources that we want to include in the estimate:

- Prior Knowledge: We learned from the German Weather Service that the probability of rainy weather for Germany as a whole is 0.3. This figure was calculated by collecting weather data over the years.

- New Data: In order to build a Bayesian network, we have observed the weather at our location over the last seven days and found that it has rained on four out of seven days.

Using these two sources, we want to calculate the probability of a rainy day using Bayesian estimation and use both sources.Anhand der Bayes’schen Formel müssen wir dazu die folgende Gleichung lösen:

\(\) \[P\left(rain\middle| D\right)=\frac{P\left(D\middle| rain\right)\cdot P\left(rain\right)}{P\left(D\right)} \]

Here are:

- \(P(D|rain)\): The probability of rain from the newly created dataset where it was rainy on a total of four days in seven days, i.e. 4/7 = 0.57.

- \(P(rain)\) is our a priori probability for rainy data, which we obtain from our prior knowledge. In our case, this is 0.3.

- \(P(D)\) is the overall probability of the data, i.e. how likely it is to observe the data regardless of what the weather is actually like. It is an important part of the calculation, as it provides a normalization and thus ensures that the values are later scaled correctly. If we assume that only the states “rain” and “no rain” can be observed, the overall probability is \(P(D) = 0.5\).

By inserting the specific values, we calculate a posterior probability of 0.342. This means that the probability of rain has increased slightly due to our new knowledge from the seven days of observations.t erhöht hat.

\(\)\[P\left(rain\middle| D\right)=\frac{P\left(D\middle| rain\right)\cdot P\left(rain\right)}{P\left(D\right)}\ =\ \frac{0,57\cdot 0,3}{0,5}\ =\ 0,342\]

Structural Learning

Now that we can calculate and update the individual probabilities, we still need to find out how to derive the edges between the nodes and their direction from the data set. Structural learning deals precisely with this question and determines which nodes are connected and in which direction the dependencies should point.

The following two approaches are primarily used for this:

- Score-based methods: In these methods, different possible network structures resulting from the data are calculated and compared using a specific evaluation measure. For example, the Bayesian Information Criterion (BIC) or the Akaike Information Criterion can be used for this. The network structure with the highest score is then used.

- Constraint-based methods: This approach is based on statistical tests, which are performed between the different variables to detect dependencies or independence between the nodes. A well-known algorithm in this area is the PC algorithm, which performs pairwise independence tests and creates an edge or not between the nodes based on the results.

How can you implement a Bayesian Network in Python?

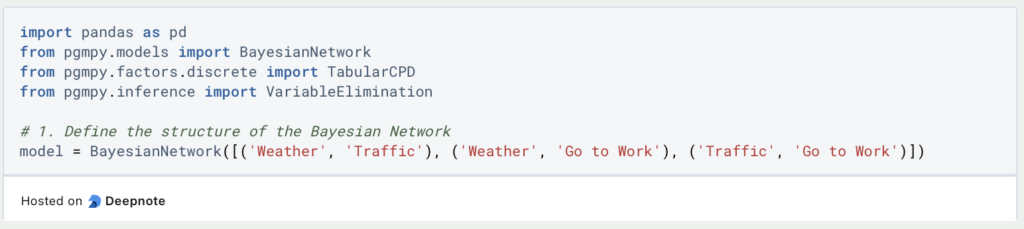

Bayesian networks can be created in Python using various modules. In our example, we use pgmpy, which allows us to define the structure of the network and the probabilities. It can then be used to make decisions.

In addition to pgmpy, we also import pandas to store the conditional probabilities and transfer them to the model. We then set up the model with the different nodes. Each of the Python tuples specifies an edge that runs between two nodes. The first node is the start point of the edge and the second node is the end point.

Once the network structure has been defined, we can now enter the probabilities. It is important here that the number of variables is always entered, i.e. how many probabilities are available. The parent nodes are also specified, if available.

This model can now be used for conclusions and the probability that an employee will go to work when it is raining and there is a traffic jam can be calculated, for example.

What are the Advantages and Disadvantages of Bayesian networks?

Bayesian networks are a powerful tool for visualizing dependencies and probabilities in data sets and also for updating probabilities using new knowledge. This is why they are used in many applications. In this section, we look at the advantages and disadvantages of this method.

Advantages:

- Flexibility: Bayesian networks are characterized by the fact that they can be used for a variety of applications and their dependencies. They are able to represent linear and non-linear dependencies and can be easily adapted to different contexts.

- Handling incomplete data: Even when datasets are not complete, Bayesian Networks provide the ability to make informed decisions by estimating missing values and calculating the best possible probabilities due to their probabilistic nature.

- Transparent decision-making: By visualizing the network using nodes and edges, decision-making is transparent and comprehensible. These factors play a particularly important role in applications such as medicine or law.

Disadvantages:

- Computing effort: For data sets with many variables and a resulting large Bayesian network, the computing effort is immense and the calculation of decisions can take a very long time.

- Problems with structural learning: Recognizing the correct network structure is often ambiguous and its calculation is also very complex. If there are many nodes, the number of possible network structures increases significantly. In addition, it is often difficult to find statistically significant dependencies between the variables.

- Dealing with uncertainties: While Bayesian networks are good at modeling and mapping uncertainties, they can lose immense reliability if the data set contains a lot of noise.

Bayesian networks can be a very powerful tool for decision-making, but they are also very complex. In order to exploit their full potential, the underlying data set and the statistical methods must therefore be thoroughly understood.

This is what you should take with you

- Bayesian networks are a type of probabilistic, graphical model for illustrating dependencies that work with uncertainties.

- They get their name from the use of Bayes’ theorem, which helps to update the probabilities.

- When creating Bayesian networks, both parametric learning, for estimating the probabilities, and structural learning, for building the network structure, are used.

- In Python, for example, the pgmpy module can be used to build a Bayesian network and also to calculate conclusions.

- The advantages of Bayesian networks lie in their flexibility and the transparency of how they arrive at decisions.

What is Grid Search?

Optimize your machine learning models with Grid Search. Explore hyperparameter tuning using Python with the Iris dataset.

What is the Learning Rate?

Unlock the Power of Learning Rates in Machine Learning: Dive into Strategies, Optimization, and Fine-Tuning for Better Models.

What is Random Search?

Optimize Machine Learning Models: Learn how Random Search fine-tunes hyperparameters effectively.

What is the Lasso Regression?

Explore Lasso regression: a powerful tool for predictive modeling and feature selection in data science. Learn its applications and benefits.

What is the Omitted Variable Bias?

Understanding Omitted Variable Bias: Causes, Consequences, and Prevention in Research." Learn how to avoid this common pitfall.

What is the Adam Optimizer?

Unlock the Potential of Adam Optimizer: Get to know the basucs, the algorithm and how to implement it in Python.

Other Articles on the Topic of Bayesian Networks

Different Python libraries can be used for Bayesian Networks. Please find the documentation of pgmpy here.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.