The data lake stores a large number of data in their raw format, i.e. unprocessed, from different sources in order to make them usable for Big Data analyses. This data can be structured, semi-structured, or unstructured. The information is stored there until it is needed for an analytical evaluation.

Why do companies use a data lake?

The data stored in a data lake can be either structured, semi-structured, or unstructured. Such data formats are unsuitable for relational databases, the basis of many data warehouses.

In addition, relational databases are also not horizontally scalable, which means that data stored in a data warehouse can become very expensive above a certain level. Horizontal scalability means that the system can be divided among several different computers and thus the load does not lie on a single computer. This offers the advantage that in stressful situations, e.g. with many simultaneous queries, new systems can simply be added temporarily as needed.

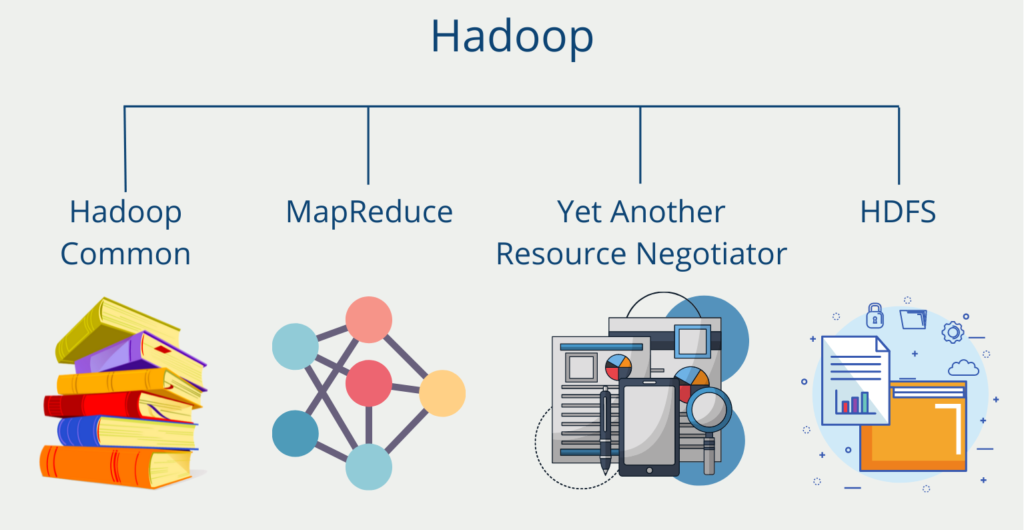

By definition, relational databases cannot divide the dataset among many computers, otherwise, data consistency would be lost. For this reason, it is very expensive to store large amounts of data in a data warehouse. Most systems used for the data lake, such as NoSQL databases or Hadoop, are horizontally scalable and can therefore be scaled more easily. These are the main reasons why the data lake, along with the data warehouse, is one of the cornerstones of the data architecture of many companies.

How is the architecture build?

The exact implementation of a data lake varies from company to company. However, there are some basic principles that should apply to the data architecture of the data lake:

- All data can be included. Basically, all information from source systems can be loaded into the Data Lake.

- The data does not have to be processed first, but can be loaded and stored in its original form.

- The data is only processed and prepared if there is a special use case with requirements for the data. This procedure is also called schema-on-read.

Otherwise, some basic points should still be considered when storing regardless of the system:

- Common folder structure with uniform naming conventions so that data can be found quickly and easily.

- Creation of a data catalog that names the origin of the files and briefly explains the individual data.

- Data screening tools so that it is quickly apparent how good the data quality is and whether there are any missing values, for example.

- Standardized data access, so that authorizations can be assigned and it is clear who has access to the data.

How can Data Governance be ensured?

Data governance is a crucial aspect of any data management system, including Data Lakes. It involves managing the availability, usability, integrity, and security of the data used in an organization. In a data lake, data governance ensures that the data is of high quality and is accessible and usable by those who need it.

Below are some important factors to consider when implementing data governance in this data lake:

- Data classification: Data classification is an essential aspect of data governance. It helps in identifying the sensitivity of the data and assigning policies to it for data processing. It involves understanding the different types of data, such as personal data, confidential data, or public data, and then classifying it accordingly.

- Data quality: Data quality ensures that data is accurate, complete, and relevant to the needs of the business. In a data lake, it is essential to establish data quality rules and implement data cleansing and validation procedures to maintain data quality.

- Data access: Access control ensures that only authorized personnel can access the data. This requires establishing access policies and procedures to ensure that only authorized users can access the data.

- Data security: Data security ensures that data is protected from unauthorized access, loss, or corruption. It includes the implementation of security measures such as encryption, access control, and data masking to ensure data security.

- Data protection: Data protection ensures that data is protected from unauthorized disclosure. It involves implementing data protection policies and procedures to ensure that sensitive data is not disclosed to unauthorized individuals.

In summary, data governance in a data lake is critical to ensure that data is of high quality, accessible and secure. By implementing data governance policies and procedures, organizations can

Differences to the data warehouse

The data warehouse can additionally be supplemented by a data lake, in which unstructured raw data is stored temporarily at low cost so that it can be used at a later date. The two concepts differ primarily in the data they store and the way the information is stored.

| Features | Data Warehouse | Data Lake |

|---|---|---|

| Data | Relational data from productive systems or other databases. | All Data Types (structured, semi-structured, unstructured). |

| Data Schema | Can be scheduled either before the data warehouse is created or only during the analysis (schema-on-write or schema-on-read) | Exclusively at the time of analysis (schema-on-read) |

| Query | With local memory very fast query results | – Decoupling of calculations and memory – Fast query results with inexpensive memory |

| Data Quality | – Pre-processed data from different sources – Unification – Single point of truth | – Raw data – Processed and unprocessed |

| Applications | Business intelligence and graphical preparation of data | Artificial Intelligence, Analytics, Business Intelligence, Big Data |

Which applications use Data Lakes?

Data Lakes are becoming increasingly popular because they provide an efficient and cost-effective way to store, process and analyze large amounts of data. Some of the most common use cases for Data Lakes include:

- Big Data analytics: Data lakes enable organizations to store and analyze large amounts of data from multiple sources, allowing data analysts to gain valuable insights that can be used to make data-driven decisions.

- Internet of Things (IoT): With the increasing use of IoT devices, these data lakes provide a scalable solution for storing and analyzing the large amounts of data generated by these devices. This can help companies identify patterns and anomalies, optimize processes, and improve the customer experience.

- Machine learning and artificial intelligence: Data lakes provide a centralized storage location for big data, making it easier to train machine learning and AI models. By combining different data sources and types, organizations can create more accurate models that can be used to predict outcomes, identify trends, and make recommendations.

- Regulatory compliance: Unstructured data stores can be used to store and manage data that is subject to regulatory requirements such as GDPR, HIPAA, or SOX. By providing secure access controls and data management policies, Data Lakes help organizations ensure compliance with these regulations.

- Data archiving and backup: Data Lakes can be used as a long-term storage solution for data that is no longer actively used. This allows organizations to free up space in their primary storage systems while ensuring that data remains accessible when needed for audits or other purposes.

Data Lakehouse vs. Data Lake

While data lakehouses and data lakes have some similarities, they differ fundamentally in their approach to data management. A data lake is a central repository where structured, semi-structured, and unstructured data is stored in its raw form. The data is not processed or transformed, and there is no defined schema or structure. This approach gives organizations the flexibility to store data from multiple sources and formats, but it can be difficult to analyze and derive insights from the data.

In contrast, data lakehouses offer companies the namesake benefits of flexibility and scalability, while providing the structure and organization of a data warehouse. Data lakehouses store data in its raw form but also enforce a schema-on-read approach that makes it easier for companies to analyze and derive insights from their data.

This is what you should take with you

- The data lake refers to a large data store that stores data in raw format from source systems so that it is available for later analysis.

- It can store structured, semi-structured, and unstructured data.

- It differs fundamentally from data warehouses in that it stores unprocessed data and the data is not prepared until there is a specific use case.

- This is primarily a case of data retention.

What is the Univariate Analysis?

Master Univariate Analysis: Dive Deep into Data with Visualization, and Python - Learn from In-Depth Examples and Hands-On Code.

What is OpenAPI?

Explore OpenAPI: A Comprehensive Guide to Building and Consuming RESTful APIs. Learn How to Design, Document, and Test APIs.

What is Data Governance?

Ensure the quality, availability, and integrity of your organization's data through effective data governance. Learn more here.

What is Data Quality?

Ensuring Data Quality: Importance, Challenges, and Best Practices. Learn how to maintain high-quality data to drive better business decisions.

What is Data Imputation?

Impute missing values with data imputation techniques. Optimize data quality and learn more about the techniques and importance.

What is Outlier Detection?

Discover hidden anomalies in your data with advanced outlier detection techniques. Improve decision-making and uncover valuable insights.

Other Articles on the Topic of Data Lakes

- Amazon AWS offers a detailed theoretical explanation on the topic of data lakes and also the options for building them in the cloud.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.