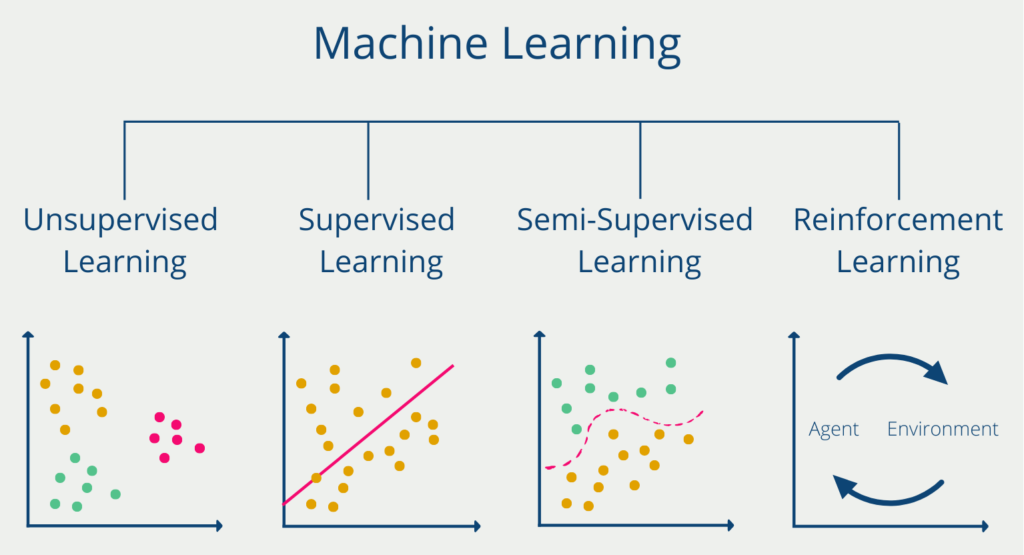

Supervised Learning is a subcategory of Artificial Intelligence and Machine Learning. It is characterized by the fact that the training data already contains a correct label. This allows an algorithm to learn to predict these labels for new data objects. The opposite of this is so-called unsupervised learning, where these labels are not present in the data set and the algorithm must be trained differently.

How does Supervised Learning work?

Supervised learning algorithms use datasets to learn correlations from the inputs and then make the desired prediction. Optimally, the prediction and the label from the dataset are identical. The training dataset contains inputs and already the correct outputs for them. The model can use these to learn from it in several iterations. The accuracy in turn indicates how often the correct output could be predicted from the given inputs. This is calculated using the loss function and the algorithm tries to minimize it until a satisfactory result is achieved.

You can think of it as a person who wants to learn English and can already speak German. With a German-English dictionary or a vocabulary book, the person can learn relatively easily on her own by covering the English column and then trying to “predict” the English word from the German word. She will repeat this training until she can correctly predict the English words a sufficient number of times. The person can measure her progress by counting the words she has translated incorrectly and put them in proportion to all the words she has translated. The person will try to minimize this ratio over time until she can correctly translate all German words into English.

Supervised learning can be divided into two broad categories:

- Classification is used to assign new data objects to one or more predefined categories. The model tries to recognize correlations from the inputs that speak for the assignment to a category. An example of this is images that are to be recognized and then assigned to a class. The model can then predict for an image, for example, whether a dog can be seen in it or not.

- Regressions explain the relationship between inputs, called independent variables, and outputs called dependent variables. For example, if we want to predict the sales of a company and we have the marketing activity and the average price of the previous year, the regression can provide information about the influence of marketing efforts on sales.

What are the applications of Supervised Learning?

There are a variety of business applications that can benefit from supervised learning algorithms. We have briefly summarized the most popular ones below:

- Object recognition in images: As mentioned earlier, supervised learning models can be used to recognize objects in images or assign images to a class. Companies use this feature, for example, in autonomous driving to recognize objects to which the car should react.

- Prediction: If companies are able to predict future scenarios or states very accurately, they can weigh different decision options well against each other and choose the best one. For example, high-quality regression analysis for expected sales in the next year can be used to decide how much budget to allocate to marketing.

- Customer sentiment analysis: Through the Internet, customers have many channels to publish their reviews of the brand or a product public. Therefore, companies need to keep track of whether customers are mostly satisfied or not. With a few reviews, which are classified as good or bad, efficient models can be trained, which can then automatically classify a large number of comments.

- Spam detection: In many mail programs there is the possibility to mark concrete emails as spam. This data is used to train machine learning models that directly mark future emails as spam so that the end-user does not even see them.

What are the problems with Supervised Learning?

The good results that supervised learning models achieve in many cases unfortunately also have some disadvantages that these algorithms bring with them:

- Labeling training data is in many cases a laborious and expensive process if the categories are not yet available. For example, there are few images for which it is categorized whether there is a dog in them or not. This has to be done manually first.

- Training supervised learning models can be very time-consuming.

- Human errors or discriminations are learned as well. So if a training dataset for classifying job applicants discriminates against certain social groups, the model will most likely continue to do so.

What is the impact of model selection and model tuning in Supervised Learning?

Model selection and tuning are critical steps in supervised learning that can greatly impact the performance of the model. In this section, we will discuss the different methods used for selecting and tuning models.

Model Selection

Model selection refers to the process of choosing the best model from a set of candidate models. In supervised learning, the goal is to select a model that generalizes well to new data. There are several methods for model selection, including:

- Cross-validation: Cross-validation is a technique for estimating the performance of a model on unseen data. It involves dividing the data into training and validation sets and iteratively training and evaluating the model on different subsets of the data. The average performance over all iterations is used as the estimate of the model’s performance.

- Grid search: Grid search is a technique for exhaustively searching over a hyperparameter space to find the optimal set of hyperparameters for a model. Hyperparameters are parameters that are not learned from the data but are set by the user, such as the learning rate, regularization parameter, or number of hidden layers in a neural network.

- Bayesian optimization: Bayesian optimization is a technique for finding the optimal set of hyperparameters by building a probabilistic model of the objective function and using it to guide the search for the optimal hyperparameters.

Model Tuning

Model tuning refers to the process of adjusting the hyperparameters of a model to improve its performance. This is typically done after model selection, as the selected model may not be the best possible model for the task at hand. There are several methods for model tuning, including:

- Random search: Random search is a technique for searching over a hyperparameter space by randomly sampling hyperparameters from a predefined distribution.

- Gradient-based optimization: Gradient-based optimization is a technique for optimizing the hyperparameters of a model by computing the gradient of the objective function with respect to the hyperparameters and updating the hyperparameters in the direction of the gradient.

- Genetic algorithms: Genetic algorithms are a family of optimization algorithms inspired by the process of natural selection. They involve creating a population of candidate solutions and iteratively selecting, mutating, and recombining them to generate a new population of better solutions.

In practice, a combination of these methods may be used for model selection and tuning, depending on the size of the hyperparameter space, the amount of available computational resources, and the specific requirements of the task at hand. It is important to keep in mind that model selection and tuning are iterative processes and require careful experimentation and evaluation to find the best model for a given task.

Supervised and Unsupervised Machine Learning in Comparison

Let’s say we want to teach a child a new language, for example, English. If we do this according to the principle of supervised learning, we simply give him a dictionary with the English words and the translation into his native language, for example, German. The child will find it relatively easy to start learning and will probably be able to progress very quickly by memorizing the translations. Beyond that, however, he will have problems reading and understanding texts in English because he has only learned German-English translations and not the grammatical structure of sentences in English.

According to the principle of unsupervised learning, the scenario would look completely different. We would simply present the child with five English books, for example, and he would have to learn everything else on his own. This is, of course, a much more complex task. With the help of the “data,” the child could, for example, recognize that the word “I” occurs relatively frequently in texts and in many cases also appears at the beginning of a sentence, and draw conclusions from this.

This example also illustrates the differences between supervised and unsupervised learning. Supervised learning is in many cases a simpler algorithm and therefore usually has shorter training times. However, the model only learns contexts that are explicitly present in the training data set and were given as input to the model. The child learning English, for example, will be able to translate individual German words into English relatively well, but will not have learned to read and understand English texts.

Unsupervised learning, on the other hand, faces a much more complex task, since it must recognize and learn structures independently. As a result, the training time and effort are also higher. The advantage, however, is that the trained model also recognizes contexts that were not explicitly taught to it. The child who has taught himself the English language with the help of five English novels can possibly read English texts, translate individual words into German and also understand English grammar.

This is what you should take with you

- Supervised learning is a subcategory of artificial intelligence and describes models that are trained on data sets that already contain a correct output label.

- Supervised learning algorithms can be divided into classification and regression models.

- Companies use these models for a wide variety of applications, such as spam detection or object recognition in images.

- Supervised learning is not without problems, as labeling data sets is expensive and can contain human errors.

What is a Boltzmann Machine?

Unlocking the Power of Boltzmann Machines: From Theory to Applications in Deep Learning. Explore their role in AI.

What is the Gini Impurity?

Explore Gini impurity: A crucial metric shaping decision trees in machine learning.

What is the Hessian Matrix?

Explore the Hessian matrix: its math, applications in optimization & machine learning, and real-world significance.

What is Early Stopping?

Master the art of Early Stopping: Prevent overfitting, save resources, and optimize your machine learning models.

What is RMSprop?

Master RMSprop optimization for neural networks. Explore RMSprop, math, applications, and hyperparameters in deep learning.

What is the Conjugate Gradient?

Explore Conjugate Gradient: Algorithm Description, Variants, Applications and Limitations.

Other Articles on the Topic of Supervised Learning

- IBM has written an interesting article on the topic of supervised learning, which also briefly describes concrete supervised learning algorithms.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.