Data augmentation refers to a process to increase the training data set by creating new but realistic data. For this purpose, various techniques are used to generate artificial data points from existing ones.

What is Data Augmentation?

In many use cases, the problem is that the data set is simply not large enough for deep learning algorithms. However, especially in the area of supervised learning, collecting new data points is not only expensive but may not even be possible.

However, if we simply create new data sets, we can also quickly run into a problem with overfitting. For this reason, several techniques in the field of data augmentation ensure that the model can learn new relationships from artificially created data points.

In which areas are small data sets a problem?

In recent years, many new Machine Learning and especially deep learning architectures have been introduced that promise exceptional performance in various application fields. However, all these architectures have in common that they have many parameters to be learned. Modern neural networks in image or text processing quickly have several million parameters. However, if the data set has only a few hundred data points, these are simply not enough to train the network sufficiently and therefore lead to overfitting. This means that the model merely learns the data set by heart and thus generalizes poorly, delivering only poor results for new data.

However, especially in these areas, i.e. image and text processing, it is difficult and expensive to obtain large data sets. For example, there are comparatively few images that have concrete labels, such as whether there is a dog or a cat in them. In addition, data augmentation can also improve the performance of the model, even if the size of the dataset is not a problem. This is because by changing the data set, the model becomes more robust and also learns from data that differs in structure from the actual data set.

What is the purpose of Data Augmentation?

Data augmentation is a central building block in machine learning and data science. The aim is to increase the size of the data set by adding slightly modified data points and also to improve the performance of the model.

In many areas, large and, above all, meaningful data sets are difficult to find or are expensive to create yourself. With the help of data augmentation, existing data sets that might otherwise have been too small for certain model architectures can be expanded.

In addition, data augmentation offers the possibility of making the training of the model more varied and thus achieving better generalization. The model learns to adapt to different circumstances and to distinguish between different scenarios. In image processing, for example, it can be useful to add images in black and white or from different angles to the data set.

Data augmentation can also help to prevent overfitting of the model. By adding new perspectives and slightly altered data points, the model can be prevented from simply learning the noise in the data. Data augmentation can also be used to compensate for class imbalances.

Finally, another reason for data augmentation is the creation of more realistic or diverse training data. Costs can also be saved as fewer data points with a specific annotation are required.

What methods are there to change data?

Depending on the application area, there are different methods to create new data points and thereby artificially extend the data set. For this purpose, we consider the areas of image, text, and audio processing.

Image Processing

There are various use cases in which Machine Learning models receive images as input, for example for image classification or the creation of new images. The data sets in this area are usually relatively small and can only be expanded with great effort.

Therefore, in the field of data augmentation, there are many ways to increase a small image dataset many times over. When working with images, these methods are also particularly effective as they add new views to the model, making it more robust. The most common methods in this area include:

- Geometric changes: A given image can be rotated, flipped, or cut to create a new data point from a given one.

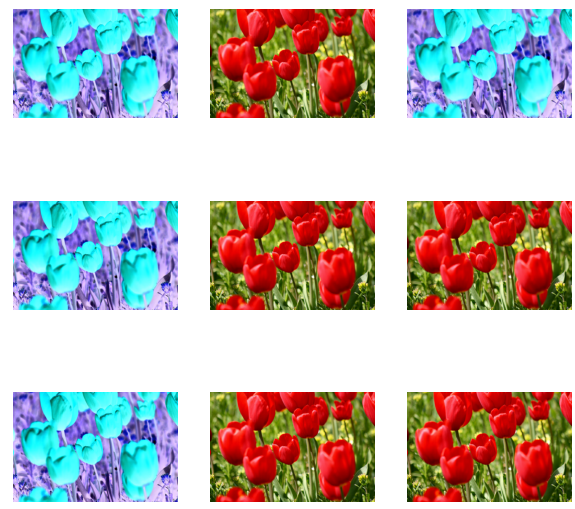

- Color changes: By changing the brightness or colors (e.g. black and white) a given image can be changed without changing the label, e.g. “there is a dog in the image”.

- Resolution change: Sharp images can be selectively blurred to train a robust model that can reliably perform the task even with worse input data.

- Other methods: In addition, there are other methods for modifying images that are significantly more involved. For example, random parts of the image can be deleted or multiple images with the same label can be mixed.

Text Processing

While there is a large amount of text that we can also generate ourselves via scraping websites, for example, these are usually not labeled and thus cannot be used for supervised learning. Again, there are some easier and harder methods to create new data points. These include:

- Translation: Translating a sentence into another language and then translating it back into the original language yields new sentences and words, but still the same content.

- Reordering sentences: The arrangement of sentences in a paragraph can be changed without affecting the content.

- Replacement of words: Words can also be selectively replaced with their synonyms to teach the model a broad vocabulary and thus become more robust.

Audio Processing

Data augmentation can also be applied to the processing of audio files. In fact, in this field, there are similar problems as in text processing, since data for supervised learning is very rare. Therefore, the following techniques for modifying data sets have become widely accepted:

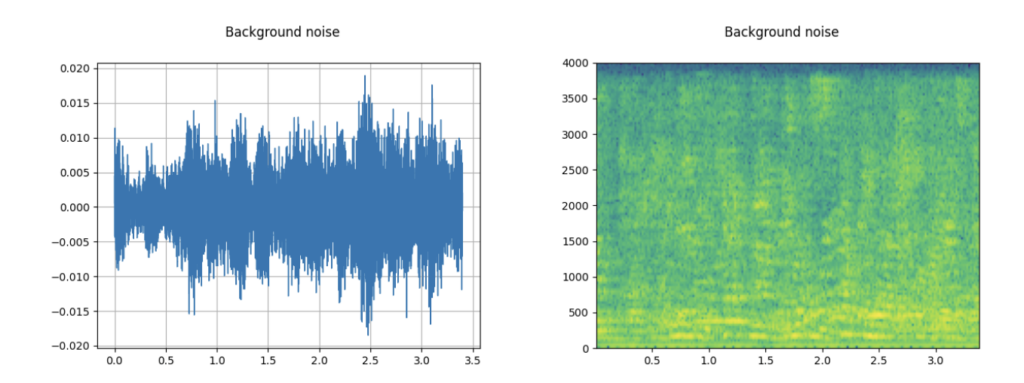

- Adding noise: By incorporating noise in the form of interfering sounds, for example, data points can be multiplied and the data set thus increased.

- Changing the speed: By increasing or decreasing the playback speed, the machine learning models become more robust and can also handle faster-spoken passages well.

What are the Advantages and Disadvantages of Data Augmentation?

Modification of data sets in the field of Data Augmentation can have advantages and disadvantages in the application.

On the one hand, high-performance models are getting bigger and bigger these days and more parameters have to be trained. This quickly leads to the risk that the data set is too small and the model is overfitted, i.e. it learns the properties of the training data set by heart and reacts poorly to new data. This problem can be solved by data augmentation. Furthermore, in various use cases, large datasets, especially in supervised learning, can only be obtained with high resource input. Thus, artificial augmentation of the data set is advantageous.

Apart from the data set size, data augmentation often leads to more robust models that can still deliver good results even with unclean data, for example with noise or poor quality. In reality, data quality issues can sometimes occur, so robust models are always beneficial.

On the other hand, data augmentation means another step in training a model. This can cost not only time but also resources if memory-intensive data, such as images or videos, have to be processed. In addition, biases are inherited from the old data set, which makes ensuring a fair data set all the more important.

Which libraries and tools can be used for Data Augmentation?

There is a wide range of libraries and tools that can be used for data augmentation. These are specialized for certain data types and areas and offer predefined transformations that can be used quickly and easily. Here is a collection of some popular libraries in this area:

Augmentor:

- Augmentor is a library in Python that can be used for data augmentation in the field of image processing. It offers simple options for rotating, mirroring, or resizing images.

- These functionalities can be queried using an API. In addition, large amounts of data can be processed in small batches and several transformations can be combined.

- Augmentor is particularly popular in the field of computer vision.

ImageDataGenerator (Keras):

- When working in Python with the Keras deep learning platform, the “ImageDataGenerator” class is a popular tool for data augmentation of images.

- It offers similar functionalities to Augmentor and can, for example, rotate images, zoom into certain areas or mirror them.

- The main advantage of this tool is the seamless integration into Keras, which requires no additional import or restructuring of the data.

NLTK (Natural Language Toolkit):

- NLTK is a Python library used in language processing to adapt texts accordingly.

- The central functionalities include the exchange of synonyms or the reformulation of texts.

OpenCV:

- OpenCV is a Python library used in the field of computer vision.

- It offers a further option for processing and enhancing images. The functionalities are suitable for both simple and more advanced applications.

TensorFlow Data Augmentation Layers:

- In addition to the Keras API, TensorFlow also offers the option of including data augmentation in model training.

- In contrast to Keras, the so-called data augmentation layer can be added directly to the model.

- This neural network layer enables data augmentation in real-time during model training. This significantly reduces the effort required by the programmer for data pre-processing.

Librosa:

- This Python library is used for data augmentation in the field of audio and music processing.

- Librosa offers various techniques, such as time stretching, pitch blending, or the option of adding noise to music.

- This library can be used to train varied data sets in the field of speech recognition and audio classification.

User-defined scripts and pipelines:

- Depending on the requirements and the specific data, data augmentation may also require the creation of custom scripts and pipelines.

- Different libraries can also be combined here, for example, if texts in the field of natural language processing are translated into another language and back-translated to perform data augmentation.

- Custom scripts make it possible to adapt the process to the specific requirements of the data set.

Various libraries and tools are available in Python that can help with data augmentation. These simplify the process considerably and make it possible to fall back on predefined functions.

This is what you should take with you

- Data augmentation is a method of increasing the size of a data set by adding modified data points again.

- This can increase the size of a dataset and make the trained model more robust as it can handle different variations of the dataset.

- Data augmentation can be applied in image, text, or even audio processing.

What is the Univariate Analysis?

Master Univariate Analysis: Dive Deep into Data with Visualization, and Python - Learn from In-Depth Examples and Hands-On Code.

What is OpenAPI?

Explore OpenAPI: A Comprehensive Guide to Building and Consuming RESTful APIs. Learn How to Design, Document, and Test APIs.

What is Data Governance?

Ensure the quality, availability, and integrity of your organization's data through effective data governance. Learn more here.

What is Data Quality?

Ensuring Data Quality: Importance, Challenges, and Best Practices. Learn how to maintain high-quality data to drive better business decisions.

What is Data Imputation?

Impute missing values with data imputation techniques. Optimize data quality and learn more about the techniques and importance.

What is Outlier Detection?

Discover hidden anomalies in your data with advanced outlier detection techniques. Improve decision-making and uncover valuable insights.

Other Articles on the Topic of Data Augmentation

A detailed tutorial on Data Augmentation in TensorFlow can be found here.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.