The Generative Pretrained Transformer 3, GPT-3 for short, is a Deep Learning model from the field of Natural Language Processing, which is capable of independently composing texts, conducting dialogs or deriving programming code from text, among other things. The third version of the model, just like the previous ones, was trained and made available by OpenAI.

What is a Generative Pretrained Transformer?

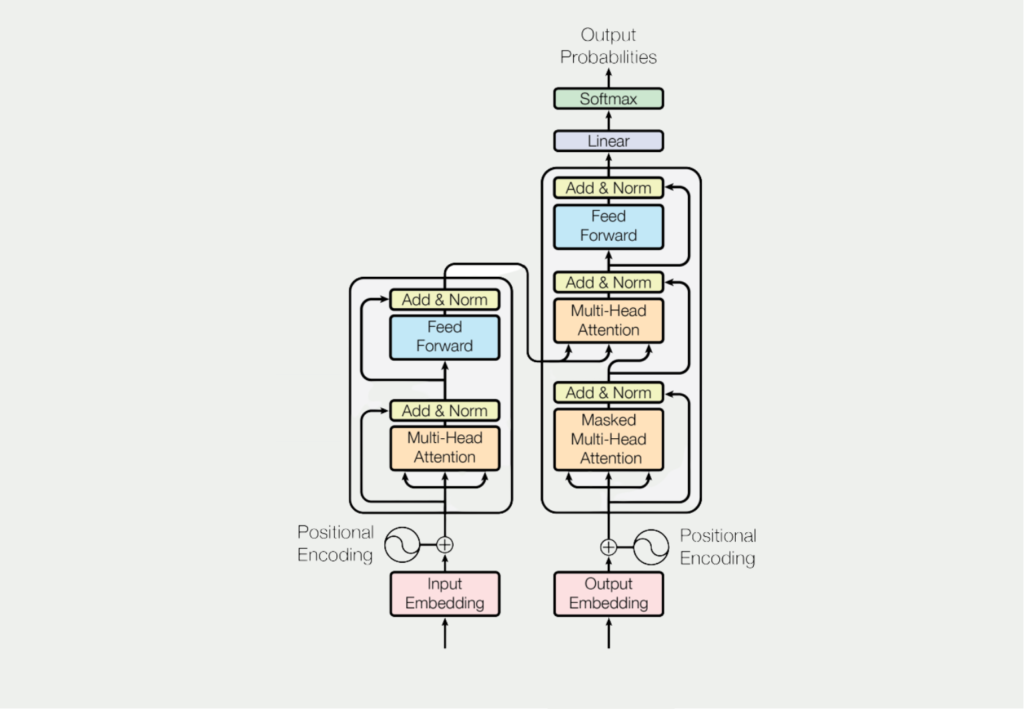

GPT (Generative Pre-trained Transformer) is a language model that uses Deep Learning to generate a human-like natural language. The model is pre-trained on a large corpus of text data, such as Wikipedia or web pages, to learn the patterns and structures of the language. The pre-training is done by training a neural transformer network to predict the next word in a sequence of words.

When the model receives a prompt, it generates a continuation of that prompt by predicting the most likely word to follow the given context. This process is repeated several times to generate a long sequence of words.

GPT uses an attention mechanism that allows the model to focus on the most important parts of the input sequence when generating the output. This helps the model generate more coherent and meaningful responses.

GPT models are usually trained using a variant of the transformer architecture that consists of multiple encoder and decoder layers. The encoding layers process the input sequence and extract features that are used by the decoding layers to produce the output sequence.

GPT-3 is a very large and powerful language model with up to 175 billion parameters, which is orders of magnitude more than previous GPT models. This allows it to generate very complex and sophisticated speech, which has led to a number of potential applications, from chatbots to automatic typing assistants.

The different generations of GPT models do not really differ in their technical design but are based on different data sets with which they have been trained. The GPT-3 model, for example, uses these data sets:

- Common Crawl includes data from twelve years of web scraping including website data, metadata, and texts.

- WebText2 contains websites that were mentioned in Reddit posts. As a quality feature, the URLs must have at least a Reddit score of 3.

- Books1 and Books2 are two datasets consisting of books available on the Internet.

- Wikipedia Corpus contains English Wikipedia pages on various topics.

What can you use it for?

There are various use cases for the application of a GPT-3 model. In addition to pure text creation and continuation, complete computer programs can be created, among other uses. Here are some example applications that OpenAI mentions on its homepage:

- Question-Answering System: With the help of a short, content-based text, the appropriate answers can be generated to a wide variety of questions.

- Grammar corrections: In the English language, grammatically incorrect sentences can be improved.

- Summaries: Longer texts can be summarized into short, concise sections. The difficulty levels can also be freely selected so that more complicated texts are summarized in the simplest possible language.

- Conversion of natural language into programming code: The GPT-3 model can convert linguistic paraphrases of algorithms into concrete code. Various languages and applications are supported, such as Python or SQL.

- Generate marketing text: The model can also generate appealing marketing texts adapted to the product from simple and short product descriptions.

What are the weaknesses of a GPT-3 model?

Although the GPT-3 model covers a wide range of tasks and performs very well in them, there are a few weaknesses of the model. The two main points mentioned in many contributions are:

- The model can currently only use 2048 tokens (about 1,500 words) as input and return them as output. Current research projects are trying to increase this size further.

- The GPT-3 model does not have any kind of memory. This means that each computation and task is considered individually, regardless of what the model computes before or after it.

If you look at the use cases from our previous chapter, you can quickly think that this model can already replace many human activities in the near future. Although the results in individual cases are already very impressive, the model is currently still rather far from taking over real tasks or jobs. For example, if we take programming as a use case, few programs will get by with 1,500 “words” as output. Even if the code is computed and assembled in different stages, it is rather unlikely that the independently generated building blocks can work together properly.

What are the potential issues with GPT-3?

As an artificial intelligence language model, GPT-3 has the potential to revolutionize several areas, including natural language processing, content creation, and automation. However, its immense capabilities also raise ethical considerations that must be addressed.

One of the main issues is the possibility of GPT-3 being used to create fake news, propaganda, or misinformation. The model can generate very convincing articles or texts, making it difficult to distinguish between real and fake content. This capability could be abused by malicious actors to spread false information and manipulate public opinion.

Another ethical concern is the potential biases of the GPT-3 model. The model is trained on vast amounts of data, many of which may contain implicit biases that could be reinforced in the results produced by the model. These biases could be related to race, gender, religion, or other characteristics and could lead to discrimination or exclusion of certain groups.

In addition, there is a possibility that GPT-3 could be used to automate certain jobs, which could lead to unemployment and inequality. While automation can increase efficiency and productivity, it could also have negative consequences for society if not properly implemented.

Finally, there is the question of what role AI should play in society and how it should be regulated, including from an ethical perspective. As GPT-3 and other AI models become more widespread, policymakers need to consider how to balance the benefits of these technologies against the potential risks and ensure that they are used ethically and responsibly. This includes developing appropriate legal and regulatory frameworks and promoting transparency and accountability in the development and use of AI models such as GPT-3.

What is ChatGPT?

ChatGPT (Generative Pre-trained Transformer) is a language model developed by OpenAI, a leading research lab in artificial intelligence. It is based on the Transformer architecture, a type of deep neural network that has shown exceptional performance in NLP tasks such as language translation, text summarization, and question-answering.

ChatGPT is a “generative” model, which means it can generate text on its own by predicting the most probable next word in a sequence. It is “pre-trained” on massive amounts of text data from the internet, such as Wikipedia articles, books, and web pages, to learn the statistical patterns and structures of natural language. This pre-training process allows it to acquire a vast knowledge of the language and the ability to produce coherent and fluent text.

How could ChatGPT change our world?

ChatGPT has the potential to change our world in several ways, as it enables us to process and generate natural language text in new and innovative ways.

- Revolutionizing Communication: It can be used to improve communication in various fields, such as customer service, healthcare, and education. Chatbots powered by ChatGPT can interact with customers, patients, or students in a natural language and provide personalized responses, improving the quality of communication and reducing the workload on human operators.

- Enhancing Accessibility: ChatGPT can also improve accessibility for people with disabilities, such as the visually impaired or those with motor impairments. For instance, it can generate text descriptions of images or videos or convert text to speech for people with difficulty reading.

- Improving Knowledge Management: It can be used to automate knowledge management tasks such as content creation, summarization, and organization. It can be used to summarize long documents, extract key information, and generate concise reports or articles, improving efficiency and productivity in various industries.

- Advancing Research and Development: ChatGPT can also be used to advance research and development in various fields. For instance, it can generate and analyze large volumes of text data, improving the speed and accuracy of natural language processing tasks such as sentiment analysis or language translation.

Overall, ChatGPT has the potential to change our world by revolutionizing communication, enhancing accessibility, improving knowledge management, and advancing research and development in various industries. As the technology continues to evolve and become more advanced, we can expect to see even more innovative applications and use cases for ChatGPT in the future.

This is what you should take with you

- The Generative Pretrained Transformer, GPT-3 for short, is a model from OpenAI that is used in the field of Natural Language Processing.

- It can be used, among other things, to convert natural language into programming code, to create content faithful summaries of texts, or to build a question-answering system.

- Although the progress in this area is amazing, the output size of 2048 tokens, or about 1,500 words is currently still a major weakness.

What is Collaborative Filtering?

Unlock personalized recommendations with collaborative filtering. Discover how this powerful technique enhances user experiences. Learn more!

What is Quantum Computing?

Dive into the quantum revolution with our article of quantum computing. Uncover the future of computation and its transformative potential.

What is Anomaly Detection?

Discover effective anomaly detection techniques in data analysis. Detect outliers and unusual patterns for improved insights. Learn more now!

What is the T5-Model?

Unlocking Text Generation: Discover the Power of T5 Model for Advanced NLP Tasks - Learn Implementation and Benefits.

What is MLOps?

Discover the world of MLOps and learn how it revolutionizes machine learning deployments. Explore key concepts and best practices.

Other Articles on the Topic of GPT-3

- Via the OpenAI API, the GPT-3 model is freely available and can be used for its own applications.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.