Natural Language Processing is a branch of Computer Science that deals with the understanding and processing of natural language, e.g. texts or voice recordings. The goal is for a machine to be able to communicate with humans in the same way that humans have been communicating with each other for centuries.

What are the Areas of NLP?

Learning a new language is not easy for us humans either and requires a lot of time and perseverance. When a machine wants to learn a natural language, it is no different. Therefore, some sub-areas have emerged within Natural Language Processing that are necessary for language to be completely understood.

These subdivisions can also be used independently to solve individual tasks:

- Speech Recognition tries to understand recorded speech and convert it into textual information. This makes it easier for downstream algorithms to process it. However, Speech Recognition can also be used on its own, for example, to convert dictations or lectures into text.

- Part of Speech Tagging is used to recognize the grammatical composition of a sentence and to mark the individual sentence components, such as a noun or a verb.

- Named Entity Recognition tries to find words and sentence components within a text that can be assigned to a predefined class. For example, all phrases in a text section that contains a person’s name or express a time can then be marked.

- Sentiment Analysis classifies the sentiment of a text into different levels. This makes it possible, for example, to automatically detect whether a product review is more positive or more negative.

- Natural Language Generation is a general group of applications that are used to automatically generate new texts that sound as natural as possible. For example, short product texts can be used to create entire marketing descriptions of this product.

What Algorithms do you use for NLP?

Most basic applications of NLP can be implemented with the Python modules spaCy and NLTK. These libraries provide far-reaching models for direct application to a text, without prior training of a custom algorithm. With these modules, part-of-speech tagging or named entity recognition in different languages is easily possible.

The main difference between these two libraries is the orientation. NLTK is primarily intended for developers who want to create a working application with Natural Language Processing modules and are concerned with performance and interoperability. SpaCy, on the other hand, always tries to provide functions that are up to date with the latest literature and may make sacrifices in performance.

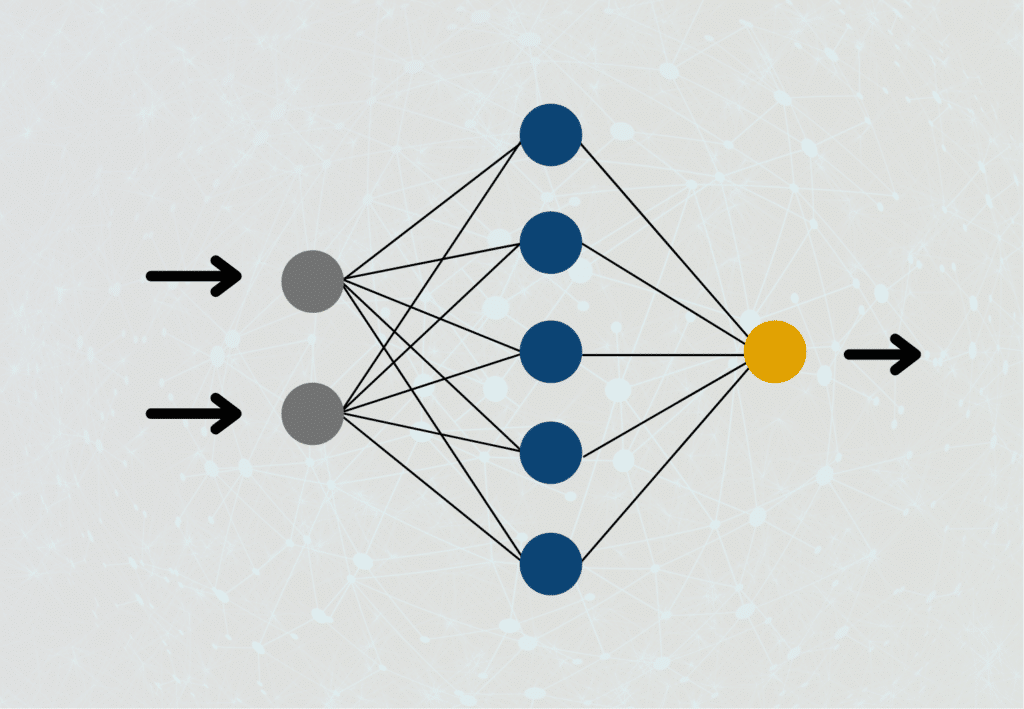

For more extensive and complex applications, however, these options are no longer sufficient, for example, if you want to create your own sentiment analysis. Depending on the use case, general Machine Learning models are still sufficient for this, such as a Convolutional Neural Network (CNN). With the help of tokenizers from spaCy or NLTK, the individual words can be converted into numbers, which in turn the CNN can work with as input. On today’s computers, such models with small neural networks can still be trained relatively quickly and their use should therefore always be examined and possibly tested first.

However, there are also cases in which so-called transformer models are required, which are currently state-of-the-art in the field of Natural Language Processing. They are particularly good at incorporating contextual relationships in texts into the task and therefore deliver better results, for example in machine translation or natural language generation. However, these models are very computationally intensive and require a very long computing time on normal computers.

What are the fields of application?

The range of possible applications for Natural Language Processing is very broad and new ones are added at regular intervals. The most widespread use cases currently include:

- Machine Translation is the automated translation of a text into another language.

- Chatbots refer to an interface for automated communication between a human and a machine. It must be possible to respond to human questions regarding content.

- Text Summarization is used to sift through large amounts of text faster by simply reading a suitable summary. The latest models, such as GPT-3, can also create different summaries with different levels of difficulty.

What are the differences between NLU and NLP?

The terms Natural Language Understanding and Natural Language Processing are often mistakenly confused. Natural Language Processing is a branch of Computer Science that deals with the understanding and processing of natural language, e.g. texts or voice recordings. The goal is to enable a machine to communicate with humans in the same way as humans have been doing for centuries.

Thus NLU is only one, albeit essential, part of NLP, which is the starting point for ensuring that the language was understood correctly. This also means that the quality of NLP depends immensely on how good the understanding of the text was. This is another reason why text comprehension is a major focus of research right now.

What trends are emerging in the field of Natural Language Processing?

The field of natural language processing (NLP) is evolving rapidly, and there are several future trends that will shape the direction of the field in the coming years. Here are some of the key future trends in NLP:

- Multimodal interaction: Multimodal Interaction uses a combination of input methods, such as speech and gestures, to enable more natural and intuitive communication between humans and machines. In the future, NLP systems are likely to become increasingly multimodal, allowing users to interact with machines in more natural and intuitive ways.

- Deep Learning: Deep learning is a powerful machine learning technique that has revolutionized the field of NLP in recent years. Deep Learning models can learn to perform complex NLP tasks such as machine translation and sentiment analysis with high accuracy. In the future, Deep Learning is likely to play an even larger role as researchers develop new models and techniques to improve performance and shorten training time.

- Neural Machine Translation: Neural Machine Translation (NMT) is a type of machine translation that uses neural networks to translate text from one language to another. NMT has shown great promise in recent years, outperforming traditional statistical machine translation methods. In the future, NMT is likely to become even more accurate and efficient, enabling more seamless communication between people speaking different languages.

- Conversational AI: Conversational AI uses NLP and other technologies to enable machines to have natural and meaningful conversations with humans. Chatbots and virtual assistants are already widely used in many industries and are likely to become more sophisticated in the future to handle more complex tasks and provide more customized responses.

- Ethical and social considerations: As NLP permeates our daily lives, it is important to consider the ethical and social implications of its use. Issues such as bias, privacy, and security are likely to become even more important in the future, and researchers and practitioners in the field of NLP will need to work together to develop solutions that are fair, transparent, and trustworthy.

These are just a few of the future trends in NLP. As the field evolves, new techniques, tools, and applications will emerge that will change how we interact with language and each other.

What are the ethical concerns in this area?

The increasing use of natural language processing (NLP) technologies has raised a number of ethical concerns that need to be considered by researchers, developers, and policymakers. Here are some of the ethical concerns in the field of NLP:

- Bias: One of the biggest ethical issues in NLP is the potential bias in the data used to train machine learning models. Biased data can lead to biased algorithms and decisions that perpetuate discrimination against certain groups of people.

- Privacy: NLP technologies can collect and analyze large amounts of personal data, raising privacy and data protection concerns. There is a risk that sensitive personal data may be misused or mishandled, with negative consequences for individuals and society as a whole.

- Transparency: NLP algorithms can be complex and difficult to interpret, making it difficult to understand how decisions are made. Lack of transparency can lead to a lack of trust in NLP systems and make it difficult to identify and correct any biases or errors.

- Accountability: as technology becomes more advanced, it becomes more difficult to determine who is responsible for the actions and decisions made by the machines. This raises questions about accountability and liability in the event of errors, accidents, or damage caused by NLP systems.

- Fairness: NLP systems should be designed to be fair and equitable, but there is a risk that they could perpetuate or exacerbate existing social inequalities. It is important to consider the potential impact of NLP systems on different groups of people and to ensure that they do not reinforce discrimination or bias.

These are just some of the ethical concerns in the field of NLP. As NLP technology continues to advance and become more widely used, it is important to address these concerns and develop solutions that prioritize fairness, transparency, and accountability

This is what you should take with you

- Natural Language Processing is a branch of Computer Science that tries to make natural language understandable and processable for machines.

- The Python modules spaCy and NLTK are the basic building blocks for most applications.

- It is one of the most current topics in machine learning and is experiencing many new innovations.

What is the Hessian Matrix?

Explore the Hessian matrix: its math, applications in optimization & machine learning, and real-world significance.

What is Early Stopping?

Master the art of Early Stopping: Prevent overfitting, save resources, and optimize your machine learning models.

What is RMSprop?

Master RMSprop optimization for neural networks. Explore RMSprop, math, applications, and hyperparameters in deep learning.

What is the Conjugate Gradient?

Explore Conjugate Gradient: Algorithm Description, Variants, Applications and Limitations.

What is the Elastic Net?

Explore Elastic Net: The Versatile Regularization Technique in Machine Learning. Achieve model balance and better predictions.

What is Adversarial Training?

Securing Machine Learning: Unraveling Adversarial Training Techniques and Applications.

Other Articles on the Topic of Natural Language Processing

- In this article, you will find a list of free tools with which you can directly implement online Natural Language Processing tasks.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.