The bias-variance tradeoff describes a fundamental problem in machine learning, which states that a compromise must be made between a model that is as simple as possible and a highly adaptable model that also delivers good results for new data.

The two central aspects of bias and variance conflict with every training. Before we can take a closer look at this problem, we should understand what bias and variance are exactly.

What is Bias and Variance?

Bias describes the problem that a model that is too simple does not recognize the underlying structures in the data and therefore always predicts deviating values, leaving a certain residual error. However, during training the model should not be too complex to prevent overfitting, which in turn would mean poor generalization to unseen data.

When training a model to predict the amount of ice sold depending on the outside temperature, a distortion can occur if a linear relationship is assumed. Assuming such a model would calculate a value of 0.7 scoops per degree Celsius increase in temperature. However, this would mean that you consume 3.5 balls more with a temperature increase of 5 °C, regardless of whether this temperature increase has taken place from 20 °C to 25 °C or from 30 °C to 35 °C. This model is too simple, as consumption tends to follow an exponential curve, i.e. ice cream consumption increases significantly more at higher, double-digit temperatures than at lower, single-digit temperatures.

Such a model with a linear relationship therefore has a clear bias, as it is too simple and therefore cannot correctly depict the true relationship between outdoor temperature and ice cream consumption. As a result, it is not possible to make accurate forecasts for ice cream consumption.

The variance, on the other hand, describes how much the predictions of a model fluctuate when the input values change only minimally. A high variance would mean that slight changes in the input would lead to very strong changes in the predictions. For example, a model with a high variance would predict an ice quantity of two balls at a temperature of 28 °C and an ice quantity of three balls at an outside temperature of 28.5 °C.

What is the Bias-Variance Tradeoff?

A good machine learning model should have as little variance as possible so that slight changes only have a minor impact on the predictions and the model therefore delivers reliable and robust predictions. At the same time, however, the bias should also be low so that the prediction is as close as possible to the actual result. The problem with this goal, however, is that a model with a low bias results in a high variance and vice versa.

This results in a conflict of conscience, which is summarized as the bias-variance tradeoff. To achieve a lower bias, the model must become more complex, which in turn leads to potential overfitting and a higher variance. Conversely, the variance can be reduced by reducing the complexity of the model, but this again leads to an increase in bias.

The trick now is to find a good compromise between an acceptable level of bias and an acceptable level of variance. It is important to understand this fundamental relationship to consider and integrate it in all areas of model training.

What scenarios can occur in model training?

In reality, this dependency results in a total of four scenarios that can occur:

- Low variance, Low bias: An optimal machine learning model combines a low variance with a low bias. In this case, the model delivers accurate predictions, both for the training data and for new, unseen data.

- High variance, Low bias: With a low bias, the predictions are very accurate, but a high variance means that the predictions fluctuate greatly and react strongly to small changes. Such a model suffers from overfitting and has adapted too strongly to the training data and only provides poor predictions for new values.

- Low variance, High bias: A model with these characteristics suffers from underfitting, as the predictions of the model are constant, but are also very inaccurate and not close to the actual value due to the bias. In such a case, a more complex model should be chosen that can recognize the structures in the data.

- High variance, High bias: Such a model is simply completely inadequate and the model architecture was chosen incorrectly. It is best to start from scratch to find a better model structure.

In practice, it is important to differentiate between the scenarios presented and react accordingly. In many cases, you come across scenario two or three and have to work specifically on moving towards scenario one.

What methods are there to deal with the Bias-Variance Tradeoff?

There are different approaches to counter the bias-variance tradeoff. Among other things, the selection of input parameters plays an important role. A high number of input variables increases the complexity of the model and thus the risk of generating a high variance. However, different input parameters may be related to each other, such as the input parameters outdoor temperature and humidity in the aforementioned model for ice cream consumption, and can be combined. This so-called feature engineering can reduce the complexity of the model without losing important information for the prediction.

Another way to take the bias-variance tradeoff into account in the training process is the selection of the model. Certain models, which are also presented on this website, are known to produce results with high variances. This characteristic should be taken into account when selecting a model.

The following tools can also be used:

- Regularization: In regularization, a penalty term is introduced into the model training to prevent the model from adapting too much to the training data, resulting in overfitting. As a result, regularization also influences the variance and bias.

- Cross-validation: With the help of cross-validation, the data set can be efficiently split into training and test data sets. This makes it possible to recognize at an early stage whether the model is too closely aligned with the training data and the hyperparameters can be changed accordingly.

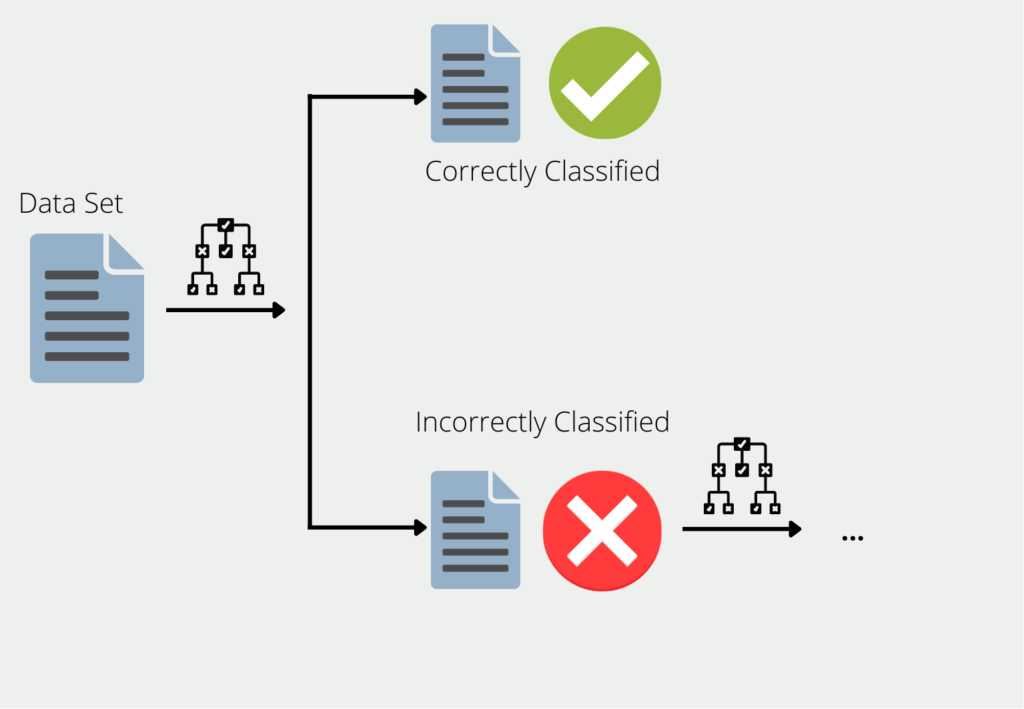

- Ensemble models: In ensemble training, several models are trained with similar data. Bagging and boosting are used for this. In bagging, different models are trained on subsets of the data set and the prediction of the ensemble is then the average of the predictions from the sub-models. In boosting, on the other hand, the models are trained one after the other and the subsequent model then gives more weight to the data that the previous model predicted incorrectly. This combination significantly reduces the variance.

In this section, several options were presented for dealing with the bias-variance tradeoff. These must be evaluated for the specific application to achieve an optimal result.

In which applications does the Bias-Variance Tradeoff play a role?

The bias-variance tradeoff plays a role in most applications and should always be kept in mind, as it has a central influence on the prediction quality. The most prominent applications that struggle with the bias-variance tradeoff, for example, are:

- Image recognition: In this area, it is particularly difficult to find the point at which a model is complex enough to correctly recognize all features in an image and yet does not overfocus on the training data so it produces poor results for new data.

- Medical diagnosis: This involves trying to predict whether a person is ill or not based on a patient’s characteristics. Here it is important to achieve the lowest possible bias, as an incorrect prediction can have immense consequences in reality. Nevertheless, the variance should be kept in mind so as not to react too sensitively to slight changes in the data.

- Predicting the stock market: In this application, it is important to find models with a low bias so that important signals in the share prices are not overlooked. At the same time, however, the variance should also be low so that the predictions do not fluctuate too much, which significantly increases the risk of the investment.

- Natural Language Processing: In the field of natural language processing (NLP), just as in image recognition, very complex models are required that have many trainable parameters. These architectures therefore tend to overfit, which results in increased variance. Sufficient hyperparameter tuning therefore plays an important role in this application.

These are just a few selected applications in which the bias-variance tradeoff occurs. It is important to keep the problem in mind for every project and to take appropriate measures during training to keep both the bias and the variance under control.

This is what you should take with you

- The bias-variance tradeoff describes the problem that the bias and the variance are in opposition to each other when training machine learning models. A model with a lower variance results in a higher bias and vice versa.

- If the bias is high, the model is not complex enough to recognize the structures in the data and therefore delivers consistently incorrect predictions.

- With a high variance, on the other hand, the model reacts too strongly to the smallest changes in the data, so that the predictions fluctuate greatly.

- The goal is to find a model architecture that combines a low level of bias with a low variance. In practice, this can be very difficult to achieve.

- To combat the bias-variance tradeoff, feature engineering or a suitable model selection can be used, for example.

- The bias-variance tradeoff plays a role in every machine learning application, but this problem can sometimes be more or less pronounced.

What is Random Search?

Optimize Machine Learning Models: Learn how Random Search fine-tunes hyperparameters effectively.

What is the Lasso Regression?

Explore Lasso regression: a powerful tool for predictive modeling and feature selection in data science. Learn its applications and benefits.

What is the Omitted Variable Bias?

Understanding Omitted Variable Bias: Causes, Consequences, and Prevention in Research." Learn how to avoid this common pitfall.

What is the Adam Optimizer?

Unlock the Potential of Adam Optimizer: Get to know the basucs, the algorithm and how to implement it in Python.

What is One-Shot Learning?

Mastering one shot learning: Techniques for rapid knowledge acquisition and adaptation. Boost AI performance with minimal training data.

What is the Bellman Equation?

Mastering the Bellman Equation: Optimal Decision-Making in AI. Learn its applications & limitations. Dive into dynamic programming!

Other Articles on the Topic of Bias-Variance Tradeoff

Cornell University offers an interesting lecture on the Bias-Variance Tradeoff.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.