Model selection is a crucial step in building a successful Machine Learning model. With the vast array of algorithms and techniques available, selecting the best model can be daunting. This article will provide an overview of model selection, including the importance of the process, standard procedures, and factors to consider when choosing a model.

What are the different models that you can select from?

In model selection, there are various types of models that can be considered. Here are some of the most commonly used ones:

- Linear models: These models use linear relationships between the input variables and output variables. Examples include linear regression and logistic regression.

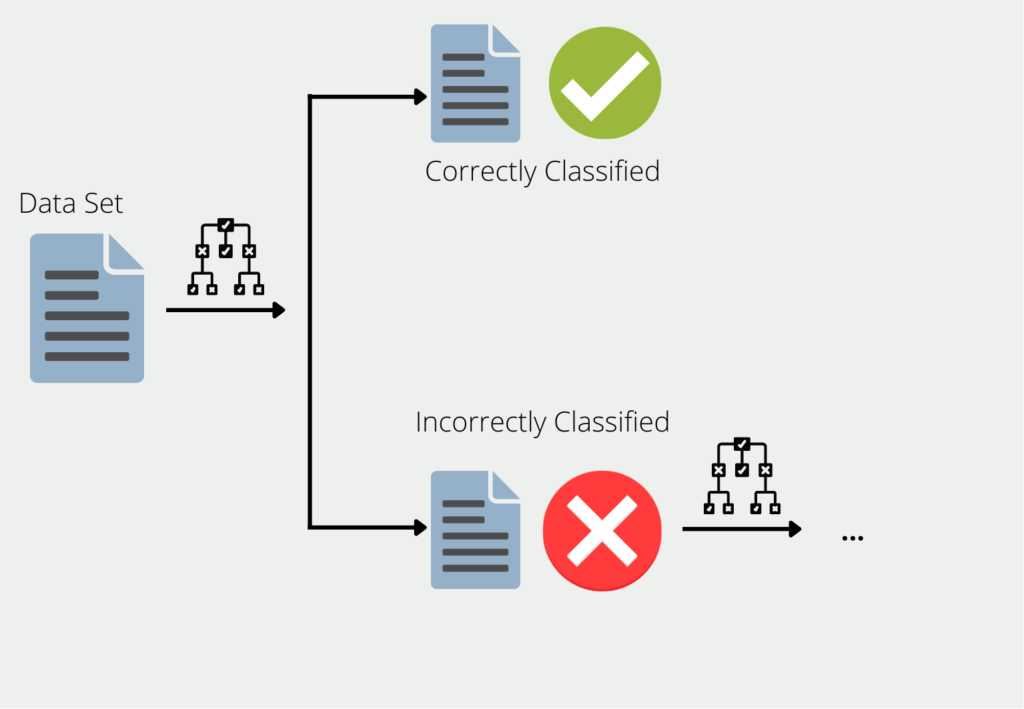

- Tree-based models: These models recursively partition the data into subsets, using decision trees, to make predictions. Examples include decision trees, random forests, and gradient-boosting machines.

- Support vector machines (SVMs): These models use a hyperplane to separate the data into different classes. SVMs work well in high-dimensional spaces and are commonly used in classification tasks.

- Neural networks: These models are designed to mimic the structure and function of the human brain, using layers of interconnected nodes to extract features from the data. Examples include feedforward neural networks, convolutional neural networks (CNNs), and recurrent neural networks (RNNs).

- Bayesian models: These models use Bayes’ theorem to update probabilities as more data is collected, and are useful for predicting outcomes and estimating uncertainty.

- K-nearest neighbors (KNN): This model uses the distance between data points to classify new data points.

Each model has its own strengths and weaknesses, and the choice of model will depend on the problem at hand and the nature of the data.

What are the Methods for Model Selection?

Model selection is a crucial step in the machine learning pipeline to choose the best model for a given problem. There are several methods for model selection, each with its advantages and disadvantages.

- Grid Search: This method involves testing all possible combinations of hyperparameters in a predefined range and evaluating each model using cross-validation. Grid search is simple to implement but can be computationally expensive, especially when dealing with large datasets or models with many hyperparameters.

- Random Search: Random search involves randomly sampling the hyperparameter space and evaluating each model using cross-validation. It is less computationally expensive than grid search and can lead to better results when some hyperparameters are more important than others.

- Bayesian Optimization: Bayesian optimization uses a probabilistic model to predict the performance of different hyperparameters and guides the search toward the most promising regions of the hyperparameter space. It is computationally efficient and can handle many hyperparameters but requires more setup than a grid or random search.

- Ensemble Methods: Ensemble methods combine the predictions of multiple models to improve overall performance. Examples include bagging, boosting, and stacking. Ensemble methods can be computationally expensive and require more data than a single model, but they often lead to better results.

- Automated Machine Learning (AutoML): AutoML is an automated approach to model selection that uses machine learning algorithms to search for the best model and hyperparameters. It is an increasingly popular approach as it can save time and resources. However, it can be limited by the available computational power and may not always lead to the best results.

Overall, the choice of method for model selection depends on the problem at hand, the available resources, and the specific requirements of the model.

What are the advantages and disadvantages of model selection?

Model selection is a crucial step in the Machine Learning pipeline that involves choosing the best model from a set of candidate models to solve a particular problem. The model selection offers several advantages and disadvantages.

One of the main advantages of model selection is that it helps in finding the optimal model for a given problem by considering multiple models. The process of selecting the best model for a given problem improves the performance of the model and enhances the accuracy of predictions. The model selection also ensures that the model is robust and can perform well on new and unseen data. By selecting the best model, we can also avoid overfitting and underfitting issues that can occur if the model is too complex or too simple.

However, the model selection also has some disadvantages. One of the main disadvantages is that it can be a time-consuming process that requires a considerable amount of resources and expertise. Selecting the best model from a large pool of candidates can be a daunting task, and it may require multiple iterations and experimentation to find the optimal solution. Model selection can also be prone to errors, as different models may perform differently on different datasets or under different conditions.

Another challenge in model selection is the trade-off between model complexity and model performance. A more complex model may achieve better performance on the training data, but it may not generalize well to new data, leading to overfitting. On the other hand, a simpler model may not capture all the nuances of the data and may underfit, leading to poor performance. Thus, selecting the optimal model involves finding the right balance between model complexity and model performance.

In summary, model selection is an essential step in Machine Learning that involves selecting the best model from a pool of candidates to solve a given problem. While it offers several advantages such as improving model performance and avoiding overfitting and underfitting, it also has some disadvantages such as being time-consuming and prone to errors. Moreover, finding the right balance between model complexity and model performance is a crucial trade-off that needs to be carefully considered during the model selection process.

What are strategies for comparing models?

Strategies for comparing models are crucial to select the most appropriate model for a given problem. Here are some common strategies for comparing models:

- Residual analysis: In this strategy, we examine the difference between the predicted and actual values of the outcome variable. Models with lower residuals are preferred as they have a better fit.

- Cross-validation: Cross-validation is a widely used technique for model selection. In this method, the data is split into training and testing sets, and the model is trained on the training set. The performance of the model is then evaluated on the testing set. The model with the best performance on the testing set is selected.

- Information criteria: Information criteria such as Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC) provide a quantitative measure of the quality of the model. Lower values of AIC and BIC indicate a better model.

- Hypothesis testing: Hypothesis testing can also be used for model selection. We can compare the F-statistic or p-value of two or more models to choose the best one.

- Domain expertise: Sometimes, domain expertise can help in selecting the most appropriate model. For instance, if we know that the relationship between variables is linear, we can choose a linear model.

Overall, it is important to use a combination of these strategies to select the most appropriate model for a given problem.

What is the trade-off between model complexity and model performance?

The trade-off between model complexity and model performance is a critical aspect of model selection. In general, more complex models can better fit the data, but they may also suffer from overfitting, where they become too specialized to the training data and perform poorly on new data. On the other hand, simpler models may not fit the training data as well, but they are often more robust and generalize better to new data.

When selecting a model, it is important to strike a balance between model complexity and model performance. One common approach is to use a metric that penalizes more complex models, such as the Akaike Information Criterion (AIC) or the Bayesian Information Criterion (BIC). These criteria take into account both the goodness of fit of the model and the complexity of the model and can help identify the optimal balance between the two.

Another approach is to use regularization techniques, such as Lasso or Ridge regression, which add a penalty term to the objective function of the model that encourages simpler models. These techniques can help prevent overfitting and improve the generalization performance of the model.

Ultimately, the choice of model complexity depends on the specific problem at hand and the available data. In some cases, a more complex model may be necessary to capture the nuances of the data, while in others, a simpler model may be sufficient. It is important to carefully evaluate different models and select the one that provides the best trade-off between complexity and performance.

This is what you should take with you

- Model selection is a critical step in the machine learning pipeline that helps in choosing the best model for the given task.

- There are various models available for machine learning, including decision trees, random forests, SVMs, and neural networks.

- Different methods are used for model selection, such as holdout, cross-validation, and bootstrap.

- Strategies for comparing models include comparing their performance on the validation set, the complexity of the models, and the interpretability of the models.

- Tuning hyperparameters is an essential step in model selection that helps in optimizing model performance.

- Model selection involves a trade-off between model complexity and model performance.

- The advantages of model selection include improved model performance, reduced overfitting, and improved interpretability of the models.

- The disadvantages of model selection include increased computational complexity, the possibility of selecting the wrong model, and the potential for overfitting.

What is the Bellman Equation?

Mastering the Bellman Equation: Optimal Decision-Making in AI. Learn its applications & limitations. Dive into dynamic programming!

What is the Singular Value Decomposition?

Unlocking insights and patterns: Learn the power of Singular Value Decomposition (SVD) in data analysis. Discover its applications.

What is the Poisson Regression?

Learn about Poisson regression, a statistical model for count data analysis. Implement Poisson regression in Python for accurate predictions.

What is blockchain-based AI?

Discover the potential of Blockchain-Based AI in this insightful article on Artificial Intelligence and Distributed Ledger Technology.

What is Boosting?

Boosting: An ensemble technique to improve model performance. Learn boosting algorithms like AdaBoost, XGBoost & more in our article.

What is Feature Engineering?

Master the Art of Feature Engineering: Boost Model Performance and Accuracy with Data Transformations - Expert Tips and Techniques.

Other Articles on the Topic of Model Selection

Please find a detailed article on Model Selection in Scikit-Learn here.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.