In recent years, Machine Learning has become a popular field in the world of computer science and data analytics. It has been used to build models that can make predictions, identify patterns, and classify data based on various features. However, building a Machine Learning model is not just about feeding raw data to an algorithm and getting results. In fact, one of the most important steps in building a successful Machine Learning model is feature engineering. This is the process of selecting and transforming raw data into features that can be used by an algorithm to make accurate predictions.

In this article, we will dive into the world of feature engineering, discussing what it is, why it’s important, the techniques used, and the challenges faced in this process. We will also explore some examples of how to feature engineering in Python.

What is Feature Engineering?

Feature engineering is the process of selecting and transforming raw data into features that can be used by a Machine Learning algorithm to make accurate predictions. The goal of feature engineering is to identify and extract the most relevant information from the raw data and transform it into a format that can be easily understood by machine learning algorithms.

The raw data can come in various forms such as numerical data, categorical data, and text data. These different types of data require different feature engineering techniques to convert them into a format that can be used by Machine Learning algorithms.

For example, in the case of numerical data, the process of feature engineering can involve scaling the features to a common range, normalizing the features, or transforming the features using mathematical functions. In the case of categorical data, the process can involve converting the categories into numerical values, one-hot encoding the categories, or using target encoding techniques. For text data, the process can involve transforming the text into numerical vectors using techniques like bag-of-words, TF-IDF, or word embeddings.

Overall, the purpose of feature engineering is to create a set of features that capture the most relevant information from the raw data and to transform those features into a format that can be easily understood by machine learning algorithms. By doing so, we can build models that are accurate, and effective, and can make predictions on new data.

What types of data are used in Feature Engineering?

In feature engineering, the data used can come in different types, including numerical data, categorical data, and text data. Each type of data requires different techniques to engineer features effectively.

- Numerical Data: Numerical data is represented as continuous or discrete values. Examples of numerical data include temperature, height, weight, and age. The process of feature engineering for numerical data can involve techniques such as scaling, normalization, and transformation. For instance, scaling can involve bringing all the features to the same scale, while normalization can involve converting the features to a range of 0 and 1.

- Categorical Data: Categorical data represents variables that have a limited number of categories. Examples of categorical data include gender, education level, and marital status. Feature engineering for categorical data can involve techniques such as one-hot encoding and target encoding. One-hot encoding involves converting categorical variables into binary vectors, where each category is represented by a unique binary value. Target encoding involves replacing each category with the mean target value of the corresponding class.

- Text Data: Text data represents human language, and it is often unstructured. Examples of text data include product reviews, customer feedback, and social media posts. Feature engineering for text data can involve techniques such as bag-of-words, TF-IDF, and word embeddings. Bag-of-words involves counting the frequency of words in a document and using the counts as features. TF-IDF involves weighting the counts by their inverse document frequency to identify the most relevant words. Word embeddings involve transforming words into numerical vectors using a neural network.

By understanding the different types of data used in feature engineering and the techniques used to engineer features for each type of data, we can create effective features that can be used to build accurate and robust machine learning models.

Which techniques are used in Feature Engineering?

Feature engineering involves a range of techniques for selecting and transforming raw data into features that can be used by Machine Learning algorithms. Here are some common techniques used in feature engineering:

- Scaling and normalization: This technique involves scaling and normalizing numerical features to make them comparable and improve the performance of machine learning models. Common methods used in scaling and normalization include min-max scaling, standardization, and log transformation.

- Imputation: Imputation is the process of filling in missing data in a dataset. Missing data can be imputed using various methods such as mean imputation, median imputation, mode imputation, and k-nearest neighbor imputation.

- Encoding: Encoding is the process of converting categorical data into a format that can be used by machine learning algorithms. Common encoding techniques include one-hot encoding, label encoding, and target encoding.

- Feature selection: Feature selection is the process of identifying and selecting the most important features in a dataset. This technique is used to reduce the number of features used in a machine learning model, making it more efficient and less prone to overfitting. Common feature selection techniques include correlation analysis, recursive feature elimination, and principal component analysis.

- Transformation: Transformation involves changing the scale, distribution, or shape of features to improve the performance of machine learning models. Common transformation techniques include logarithmic transformation, square root transformation, and box-cox transformation.

- Feature extraction: Feature extraction involves creating new features from existing features to improve the performance of machine learning models. Common feature extraction techniques include creating interaction terms, polynomial features, and statistical features such as mean, median, and standard deviation.

By using these techniques and selecting the appropriate ones for each type of data, we can engineer features that are effective and improve the performance of machine learning models.

Why is Feature Engineering so important?

Feature engineering is a critical step in the machine learning pipeline as it determines the quality of features used by machine learning models. Good feature engineering can lead to models that are more accurate, efficient, and robust, while poor feature engineering can lead to models that perform poorly or fail to generalize to new data.

Here are some reasons why feature engineering is important:

- Improves model accuracy: Feature engineering helps to identify the most important information in the data, which is critical for building models that are accurate and effective. By engineering relevant features, we can improve the accuracy of machine learning models and reduce errors.

- Reduces model complexity: Feature engineering can help reduce the complexity of machine learning models by selecting and transforming features that are most relevant to the task at hand. This simplifies the models, making them more efficient and less prone to overfitting.

- Enables model interpretability: Feature engineering can help to make models more interpretable by creating features that are easy to understand and explain. This is important in many applications, such as healthcare, where it is critical to understand how a model makes its predictions.

- Handles missing data: Feature engineering can help handle missing data in a dataset by imputing missing values or creating new features that are robust to missing data. This improves the quality of the data used to train the model, leading to more accurate predictions.

- Increases model efficiency: Feature engineering can help to reduce the number of features used in a model, making it more efficient and faster to train. This is important in applications where speed is critical, such as real-time prediction systems.

In conclusion, feature engineering is a critical step in the machine learning pipeline as it determines the quality of features used by machine learning models. By selecting and transforming features effectively, we can build models that are accurate, efficient, and robust, and that can make accurate predictions on new data.

What are the challenges of Feature Engineering?

One of the key aspects of feature engineering is determining the importance of each feature in a dataset. This is critical for identifying the most relevant features for a given task and selecting them for use in machine learning models. However, there are several challenges associated with determining feature importance:

- Correlated features: Correlated features can cause problems when determining feature importance, as it can be difficult to determine which feature is truly important. For example, if two features are highly correlated, it may be difficult to determine which feature is driving the prediction.

- Non-linear relationships: In some cases, features may have non-linear relationships with the target variable, making it difficult to determine their importance using linear models such as linear regression.

- High-dimensional data: In high-dimensional datasets, where the number of features is much larger than the number of observations, it can be difficult to determine the true importance of each feature.

- Bias: Bias can be introduced when determining feature importance, particularly when using model-based approaches. This can occur when the model is biased towards certain types of features, or when certain features are overrepresented in the dataset.

- Time-consuming and computationally expensive: Determining feature importance can be a time-consuming and computationally expensive task, particularly when using techniques such as permutation importance or SHAP values.

To address these challenges, it is important to carefully select the appropriate technique for determining feature importance based on the specific dataset and problem at hand. It is also important to carefully analyze the results and consider the potential biases that may be present. By addressing these challenges, we can better understand the importance of each feature in a dataset and build more accurate and efficient machine learning models.

How to do Feature Engineering in Python?

Feature engineering is a crucial step in the machine learning pipeline that involves transforming raw data into meaningful features. Here, we present several examples of feature engineering techniques in Python, along with explanations and corresponding code snippets.

- Polynomial Features: Polynomial features allow us to capture non-linear relationships in our data. By creating polynomial combinations of existing features, we can introduce higher-order terms. The

sklearn.preprocessingmodule provides thePolynomialFeaturesclass, which can be used as follows:

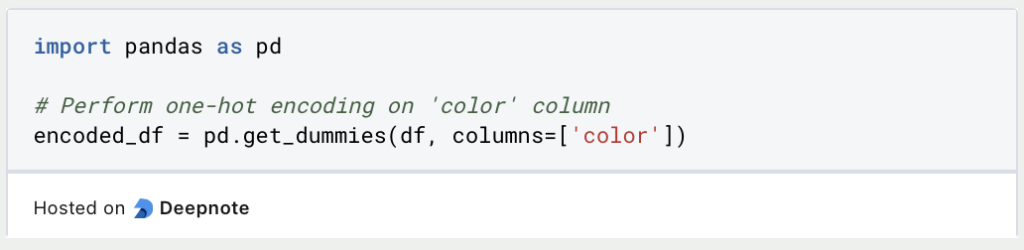

- One-Hot Encoding: One-hot encoding is used to represent categorical variables as binary vectors. Each category becomes a separate binary feature, with a value of 1 indicating the presence of that category and 0 otherwise. The

pandaslibrary offers theget_dummiesfunction for one-hot encoding:

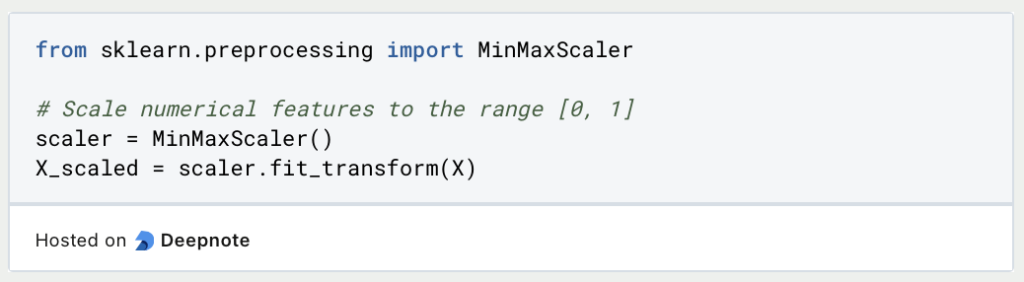

- Feature Scaling: Feature scaling ensures that numerical features are on a similar scale, preventing certain features from dominating others. Two commonly used methods are Min-Max scaling and Standardization. Here’s an example using

sklearn.preprocessing:

- Text Feature Extraction (TF-IDF): TF-IDF (Term Frequency-Inverse Document Frequency) is a technique used to convert text data into numerical features. It assigns weights to words based on their frequency within a document and across the entire corpus. The

sklearn.feature_extraction.textmodule provides theTfidfVectorizerclass:

- Date and Time Features: Extracting meaningful information from date and time data can be useful in various applications. Python’s

datetimemodule provides several methods to extract specific components such as day, month, year, hour, and more:

By utilizing these feature engineering techniques, you can enhance the quality and predictive power of your machine learning models.

This is what you should take with you

- Feature engineering is the process of selecting and transforming raw data into features that are relevant and informative for machine learning models.

- There are various techniques for feature engineering, including feature selection, feature extraction, and feature scaling.

- Different types of data, including numerical, categorical, and text data, require different techniques for feature engineering.

- Feature engineering is important because it improves model accuracy, reduces model complexity, enables model interpretability, handles missing data, and increases model efficiency.

- However, there are challenges associated with determining feature importance, including correlated features, non-linear relationships, high-dimensional data, bias, and computational complexity.

- It is important to carefully select the appropriate technique for determining feature importance and analyze the results to build accurate and efficient machine learning models.

- Good feature engineering requires domain knowledge and creativity, and it is an iterative process that requires continuous refinement and improvement.

What is a Boltzmann Machine?

Unlocking the Power of Boltzmann Machines: From Theory to Applications in Deep Learning. Explore their role in AI.

What is the Gini Impurity?

Explore Gini impurity: A crucial metric shaping decision trees in machine learning.

What is the Hessian Matrix?

Explore the Hessian matrix: its math, applications in optimization & machine learning, and real-world significance.

What is Early Stopping?

Master the art of Early Stopping: Prevent overfitting, save resources, and optimize your machine learning models.

What is RMSprop?

Master RMSprop optimization for neural networks. Explore RMSprop, math, applications, and hyperparameters in deep learning.

What is the Conjugate Gradient?

Explore Conjugate Gradient: Algorithm Description, Variants, Applications and Limitations.

Other Articles on the Topic of Feature Engineering

You can find an interesting article about Feature Engineering on GitHub here.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.