Presto is an open-source distributed SQL engine suitable for querying large amounts of data. It was developed by Facebook in 2012 and subsequently made open-source under the Apache license. The engine does not provide its own database system and is therefore often used with well-known database solutions, such as Apache Hadoop or MongoDB.

How is Presto built?

The structure of Presto is similar to that of classical database management systems (DBMS), which use so-called massively parallel processing (MPP). This uses different components that perform different tasks:

- Client: The client is the starting and ending point of each query. It passes the SQL command to the coordinator and receives the final result from the worker.

- Coordinator: The coordinator receives the commands to be executed from the client and breaks them down in order to analyze how complex their processing is. He plans or coordinates the execution of several commands and monitors their processing with the help of the scheduler. Based on the execution plan, the commands are then passed on to the scheduler.

- Scheduler: The scheduler is a part of the coordinator, which is ultimately responsible for passing on the commands to the workers. It monitors the correct execution of the commands according to the plan created by the coordinator.

- Worker: The workers take over the actual execution of the commands and receive the results from the data sources from the connectors. The final results are then passed back to the client.

- Connector: The Connectors are the interfaces to the supported data sources. They know the peculiarities of the different databases and systems and can therefore adapt the commands.

What applications use Presto?

This SQL engine can be used when connecting different data sources that store large amounts of data. These, even if they are non-relational databases, can be controlled using classic SQL commands. Presto is often used in the Big Data area, where low query times and high performance are of immense importance. It can also be used for queries on data warehouses.

In the industry, many well-known companies already rely on Presto. Besides Facebook, which invented the query engine, these include for example:

- Uber uses the SQL query engine for its massive data lakehouse with well over 59 petabytes of data. Various Data Scientists, as well as regular users, need to be able to access this data in a short period of time.

- At Twitter, the immensely increasing amount of data also became a cost issue as SQL query expenses increased. Therefore, SQL query engines were used to scale the system horizontally. In addition, a Machine Learning model was trained that can predict the expected query time even before a query is made.

- Alibaba relies on SQL query engines to build its data lake.

All of these examples were taken from the Use Case section on the Presto website.

What are the Advantages of using Presto?

Presto offers several advantages when working with large amounts of data. These include:

Open-Source

The open source availability not only offers the possibility to use the tool without licensing costs, but also goes hand in hand with the fact that the source code can be viewed and, with sufficient know-how, also tailored to one’s own needs.

In addition, open source programs also often have a large, active community, so problems can usually be solved by a quick Internet search. These many active users of Presto also ensure that the system is constantly being developed and improved, which in turn benefits all other users.

High Performance

Due to its architecture, this SQL query engine can also query large amounts of data within a few seconds and without large latency periods. This high performance is made possible by the distributed architecture, which enables horizontal scaling of the system.

In addition, Presto can be run both on-premise and in the cloud, so performance can be further improved by moving to the cloud if needed.

High Compatibility

By using the Structured Query Language, Presto is easy to use for many users, since the handling of the query language is already known and this knowledge can still be used. This makes it easy to implement even complex functions.

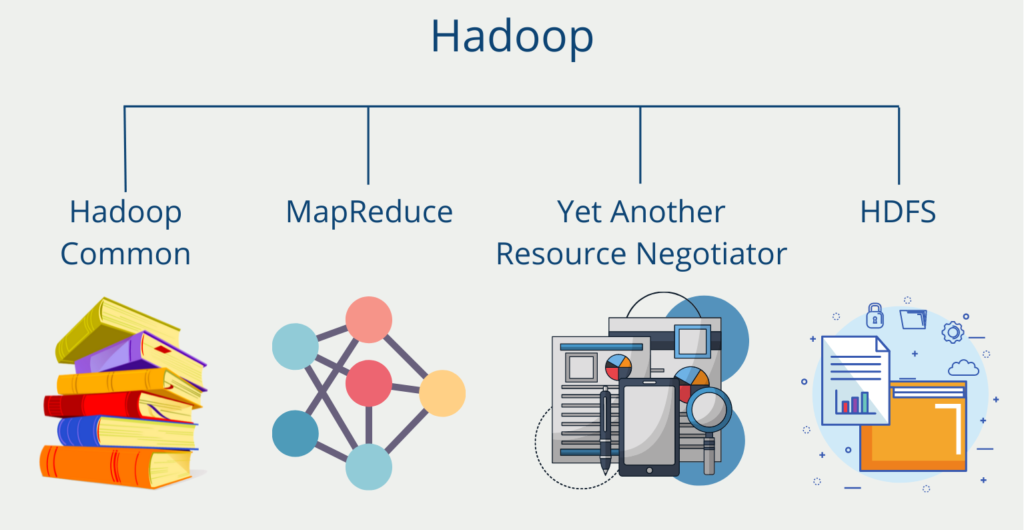

Compatibility is further ensured by a variety of available connectors for common database systems, such as MongoDB, MySQL, or the Hadoop Distributed File System. If these are not sufficient, custom connectors can also be configured or written.

How to query data using Presto?

Querying with Presto involves connecting to a data source and executing SQL queries. Here are the basic steps for querying with Presto:

- Installation: Install Presto on a cluster or a single machine. You can download the latest version from the official website.

- Configuration: Configure the system to connect to the data sources you want to query. For this, you need to have the connectors for each data source set up, the authentication details specified, and the required parameters set.

- Establish connection: Use a client to connect to the Presto cluster or machine. The Presto client can be a command line interface or a GUI tool such as Presto CLI or SQL Workbench.

- Execute SQL queries: Once you are connected, you can run SQL queries against the data sources. The queries can be simple SELECT statements or more complex queries with JOINs, GROUP BYs, and subqueries.

- Optimizing queries: Presto offers several options for optimizing queries, such as setting the number of nodes, configuring memory limits, and using query optimization techniques like cost-based optimization and dynamic filtering.

- Monitor query execution: The program provides several tools for monitoring query execution, such as CLI, the Web UI, and the query logs. You can use these tools to track the progress of queries, monitor resource usage and troubleshoot any problems.

In summary, querying with Presto involves connecting to data sources, executing SQL queries, and optimizing queries for performance. With its fast query execution and support for multiple data sources, the software can be a valuable tool for Big Data processing and analysis.

How can Presto and Hadoop be used together?

Presto does not inherently have a built-in data source that can store information. Therefore, it relies on the use of other, external databases. In practice, Apache Hadoop, or the Hadoop Distributed File System (HDFS), is often used for this purpose.

The connection between HDFS and Presto is established via the Hive Connector. The main advantage is that Presto can be used to easily search through different file formats and therefore search through all HDFS files. It is often used as an alternative to Hive since Presto is optimized for fast queries, which Hive cannot offer.

What are the Differences between Presto and Spark?

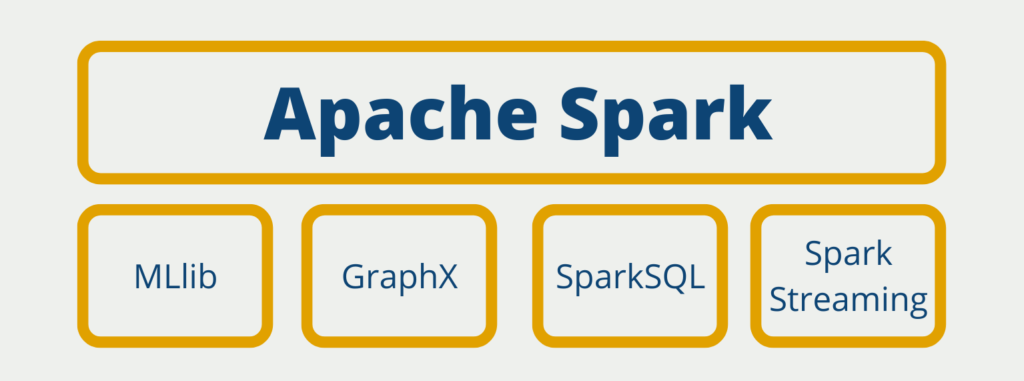

Apache Spark is a distributed analytics framework that can be used for many different Big Data applications. It relies on in-memory data storage and parallel execution of processes to ensure high performance. It is one of the most comprehensive Big Data systems on the market and offers, among other things, batch processing, graph databases, or support for Machine Learning.

It is often mentioned in connection with Presto or even understood as a competitor to it. However, the two systems are very different and share few similarities. Both programs are open-source available systems when working with Big Data. They can both offer good performance, due to their distributed architecture and the possibility of scaling. Accordingly, they can also be run both on-premise and in the cloud.

However, besides these (albeit rather few) similarities, Apache Spark and Presto differ in some fundamental characteristics:

- Spark Core does not support SQL queries, for now, you need the additional SparkSQL component for that. Presto, on the other hand, is a travel SQL query engine.

- Spark offers a very wide range of application possibilities, for example, also through the possibility of building and deploying entire machine learning models.

- Presto, on the other hand, specializes primarily in the fast processing of data queries for large data volumes.

This is what you should take with you

- Presto is an open-source distributed SQL engine suitable for querying large amounts of data.

- The engine can be used for distributed queries with fast response times and low latency.

- Presto differs from Apache Spark in that it is primarily focused on data querying, while Spark offers a wide range of application capabilities.

- Since Presto does not have its own data source, it is often used together with Apache Hadoop as an alternative to their Hive Connector.

What is OpenAPI?

Explore OpenAPI: A Comprehensive Guide to Building and Consuming RESTful APIs. Learn How to Design, Document, and Test APIs.

What is Data Governance?

Ensure the quality, availability, and integrity of your organization's data through effective data governance. Learn more here.

What is Data Quality?

Ensuring Data Quality: Importance, Challenges, and Best Practices. Learn how to maintain high-quality data to drive better business decisions.

What is Data Imputation?

Impute missing values with data imputation techniques. Optimize data quality and learn more about the techniques and importance.

What is Outlier Detection?

Discover hidden anomalies in your data with advanced outlier detection techniques. Improve decision-making and uncover valuable insights.

What is the Bivariate Analysis?

Unlock insights with bivariate analysis. Explore types, scatterplots, correlation, and regression. Enhance your data analysis skills.

Other Articles on the Topic of Presto

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.