Apache Spark is a distributed analytics framework that can be used for many Big Data applications. It relies on in-memory data storage and parallel execution of processes to ensure high performance. It is one of the most comprehensive Big Data systems on the market and offers, among other things, batch processing, graph databases, or support for Machine Learning.

What is Apache Spark?

The framework was initially developed at the University of Berkeley in 2009 and has been open-source since then. This is one of the reasons why it is already used by many large companies such as Netflix, Yahoo, and eBay.

The prominent usage is also due to the fact that Apache Spark is a very broad framework for almost all Big Data applications. As a result, it is used by users for machine learning models, to create graph databases, or to process streaming data.

A fundamental feature of Spark is its distributed architecture, i.e. the use of computer clusters to redistribute peak loads. This means that even large volumes of data can be processed very efficiently and cost-effectively.

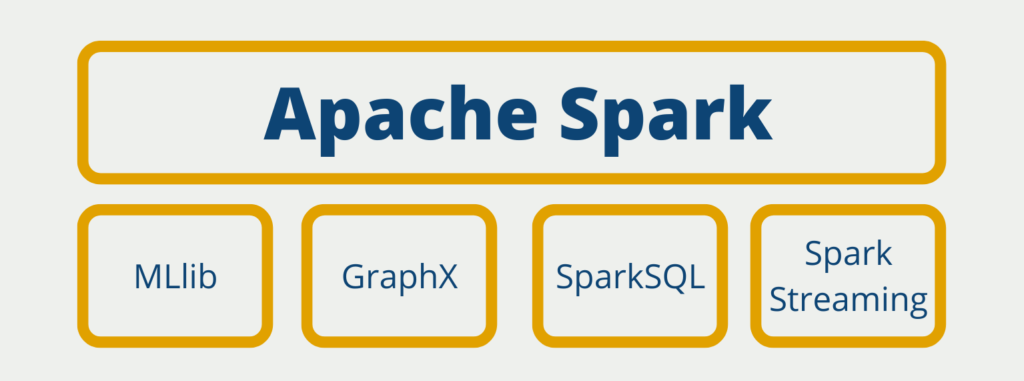

Which Components belong to Spark?

Spark provides components for a wide variety of data science applications. SparkCore is the brain of the Spark universe. This is where tasks are controlled and distributed, and basic functionality is provided.

The Machine Learning Library (MLlib) provides basic models for working with artificial intelligence. This includes the most common algorithms in this area such as Random Forest or K-Means Clustering.

Graphs are a modern type of data storage from the field of NoSQL databases. They can be used to store social networks or represent knowledge structures, for example. The creation and processing of such graphs in Spark are enabled by GraphX.

The data storage in Apache Spark is done by the so-called RDDs, which do not have any structure at first. However, they can be converted into so-called Spark DataFrames, which are very similar to Pandas’ DataFrames. With the help of SparkSQL, classic SQL queries can be executed on DataFrames, so that structured access to the data is possible.

In many applications, real-time data processing is required. For this purpose, so-called streams are set up in which data is written at irregular intervals. With the help of SparkStreaming, these data streams can be processed by combining them into smaller data packets.

What are Resilient Distributed Datasets?

The basic data structure of Apache Spark is Resilient Distributed Datasets (RDD) and because of their structure, data processing in Spark is also so much faster than in Hadoop. In short, it is a collection of data partitions to which you have read-only access. These partitions can be easily distributed across the different computers in the cluster. All components in Apache Spark are, in effect, designed to process and forward RDD files.

One of the characteristics is that the files cannot be changed after creation. This is only possible by creating a new RDD file and writing the changed data into it. The files are also kept in memory, which is one of the main reasons for Spark’s performance. However, RDD files can also be stored normally on a disk if desired.

How to program with Apache Spark?

Programming with Apache Spark involves using various programming interfaces to read, transform, and analyze large datasets. Spark provides several APIs, including Scala, Java, Python, and R, to interact with Spark’s core functionalities.

Here are some key aspects of programming with Apache Spark:

- DataFrames and Datasets: Spark’s DataFrames and Datasets APIs provide a high-level abstraction for working with structured and semi-structured data. They provide a similar programming interface to SQL and allow for easy manipulation and transformation of data.

- RDDs: Resilient Distributed Datasets (RDDs) are the fundamental building blocks of Spark. RDDs are an immutable distributed collection of objects that can be processed in parallel. They allow for efficient distributed computing but require more low-level programming than DataFrames and Datasets.

- Transformations and Actions: Spark’s APIs provide two types of operations: transformations and actions. Transformations are operations that create a new RDD, while actions are operations that return a result to the driver program or write data to storage.

- Spark SQL: Spark SQL is a Spark module for structured data processing. It provides a programming interface to work with structured data using SQL queries and DataFrames.

- Machine Learning: Spark’s MLlib library provides a set of machine learning algorithms for clustering, classification, regression, and collaborative filtering. It provides a high-level API for building and training machine learning models.

Programming with Apache Spark can be challenging, as it requires an understanding of distributed computing and parallel processing. However, Spark’s APIs provide powerful abstractions for working with large datasets and enable developers to write efficient, scalable, and fault-tolerant applications.

What are the Benefits of using Spark?

Spark’s benefits can be summarized in three broad themes:

- Performance: Thanks to its distributed architecture, Spark is very scalable and offers outstanding performance when processing large amounts of data. The fact that many calculations take place in memory, i.e. in the main memory, accelerates the processes even more. In one test, Apache Spark was even found to be 100 times faster than Hadoop in certain calculations.

- Ease of use: Apache Spark is also very easy to use for newcomers, for example by reverting to already familiar structures, such as the DataFrame. In addition, many of the applications can be called via APIs.

- Unity: The wide range of applications that can be implemented with Apache Spark make it possible to perform various stages of data processing in a single tool. This ensures the compatibility of the different steps and there is no need to manage different licenses or access to programs.

Which Applications use Apache Spark?

Due to the many functionalities and components that Spark already offers, the tool has become quasi-standard for most Big Data applications. This includes all use cases in which large amounts of data are to be processed with high performance. The following applications are of particular interest:

- Merging of different data sources and unification of information, e.g. in ETL context

- Analysis of large data sets through the possibility of structured SQL queries and data stored in memory

- Building basic machine learning models, which are already supported by Spark

- Introducing and processing real-time data streams using SparkStreaming.

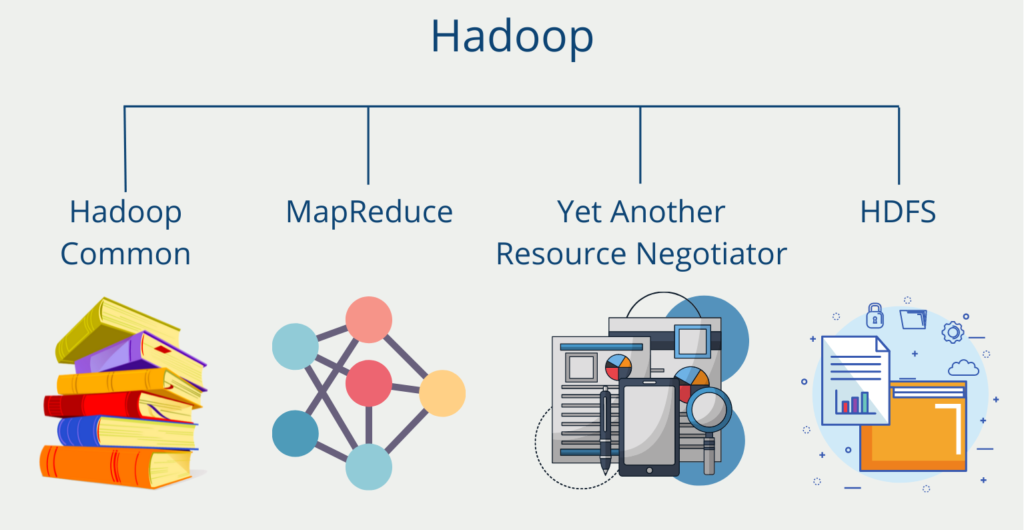

How is Spark different from Hadoop?

Apache Spark is considered an improvement on Hadoop in most sources because it can process large amounts of data much faster. The big advantage in the introduction of Hadoop was the use of the MapReduce algorithm. This splits complex computation into a Map and a Reduce phase, speeding up the process. However, it does so by relying on files that reside on disk. At this point, Spark draws its great performance advantage, since the data is mostly stored in memory. This is one of the reasons why Spark is up to 100 times faster than Hadoop, depending on the application.

Besides this main difference, there are other fundamental differences between Hadoop and Spark:

- Performance: As already mentioned, Apache Spark works mainly out of memory, while Hadoop stores data on disk and processes it in batches.

- Cost: Spark’s performance advantage naturally comes at a higher cost. Working memory is significantly more expensive compared to main memory (SSD or HDD).

- Data processing: Both systems are based on a distributed architecture. However, Hadoop is optimized for batch processing and one-time processing of large amounts of data. Spark, on the other hand, is better suited for processing data streams through the SparkStreaming component.

- Machine Learning: Apache Spark provides a simple way to use proven machine learning models quickly and easily through the Machine Learning Library.

These points come in large part from IBM’s detailed comparison between Spark and Hadoop. There you can find even more details about the differences between Hadoop and Spark.

What are the Differences between Presto and Spark?

Apache Presto is an open-source distributed SQL engine suitable for querying large amounts of data. It was developed by Facebook in 2012 and subsequently made open-source under the Apache license. The engine does not provide its own database system and is therefore often used with well-known database solutions, such as Apache Hadoop or MongoDB.

It is often mentioned in connection with Apache Presto or even understood as a competitor to it. However, the two systems are very different and share few similarities. Both programs are open-source available systems when working with Big Data. They can both offer good performance, due to their distributed architecture and the possibility of scaling. Accordingly, they can also be run both on-premise and in the cloud.

However, besides these (albeit rather few) similarities, Apache Spark and Apache Presto differ in some fundamental characteristics:

- Spark Core does not support SQL queries, for now, you need the additional SparkSQL component for that. Presto, on the other hand, is a travel SQL query engine.

- Spark offers a very wide range of application possibilities, for example, also through the possibility of building and deploying entire machine learning models.

- Apache Presto, on the other hand, specializes primarily in the fast processing of data queries for large data volumes.

This is what you should take with you

- Apache Spark is a distributed Big Data framework that can be used for a wide variety of use cases.

- Spark consists of various components. These include GraphX, with which graphs can be created and processed, and the Machine Learning Library, which provides widespread ML algorithms for use.

- The advantages include the high-performance processing of large amounts of data, user-friendliness, and the uniformity of the solution through the various components.

- In practice, Spark is primarily used for data processing in the Big Data area and for reading out real-time data streams.

What is the Univariate Analysis?

Master Univariate Analysis: Dive Deep into Data with Visualization, and Python - Learn from In-Depth Examples and Hands-On Code.

What is OpenAPI?

Explore OpenAPI: A Comprehensive Guide to Building and Consuming RESTful APIs. Learn How to Design, Document, and Test APIs.

What is Data Governance?

Ensure the quality, availability, and integrity of your organization's data through effective data governance. Learn more here.

What is Data Quality?

Ensuring Data Quality: Importance, Challenges, and Best Practices. Learn how to maintain high-quality data to drive better business decisions.

What is Data Imputation?

Impute missing values with data imputation techniques. Optimize data quality and learn more about the techniques and importance.

What is Outlier Detection?

Discover hidden anomalies in your data with advanced outlier detection techniques. Improve decision-making and uncover valuable insights.

Other Articles on the Topic of Apache Spark

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.