In the realm of machine learning, labeled data plays a crucial role in training accurate models. However, obtaining labeled data can be costly and time-consuming. Enter semi-supervised learning, a paradigm that harnesses the untapped potential of vast amounts of unlabeled data alongside limited labeled data. By seamlessly blending the benefits of both supervised and unsupervised learning, semi-supervised learning opens doors to new possibilities and expands the boundaries of what can be achieved. In this article, we delve into the world of semi-supervised learning, exploring its concepts, techniques, and real-world applications. Join us as we unravel the mysteries of this powerful approach that has revolutionized the field of machine learning.

What is Semi-Supervised Learning?

Semi-supervised learning is a type of Machine Learning technique that combines both labeled and unlabeled data to train a model. The goal is to use the small amount of labeled data available to guide the model while leveraging the vast amount of unlabeled data to improve the model’s accuracy.

In semi-supervised learning, the model is first trained on a small amount of labeled data to establish a basic understanding of the problem. The model is then used to label a large amount of unlabeled data, and the newly labeled data is added to the training set. This process is repeated iteratively until the model reaches a satisfactory level of accuracy.

How does Semi-Supervised Learning work?

Semi-supervised learning is a type of Machine Learning where the algorithm is trained on both labeled and unlabeled data to improve its performance in making predictions. In this section, we will discuss how semi-supervised learning works using an example of image classification.

Suppose we have a dataset of images of animals, where each image is labeled as either a cat or a dog. We have a limited number of labeled images, let’s say 1000, and a much larger set of unlabeled images, let’s say 100,000. We want to build a model that can accurately classify images as either a cat or a dog.

In supervised learning, we would use the 1000 labeled images to train a model, such as a convolutional neural network (CNN), to classify new images. However, the performance of the model would be limited by the small number of labeled images, and it may not generalize well to new, unseen images.

In semi-supervised learning, we can use the 1,000 labeled images along with the 100,000 unlabeled images to improve the performance of the model. The algorithm first trains on the labeled images to learn the features that distinguish cats from dogs. Then, it uses these learned features to classify the unlabeled images.

There are several approaches to semi-supervised learning, but one common method is called “self-training”. In self-training, the model is trained on the labeled images, and then it uses these labeled images to predict the labels of the unlabeled images. The model is then retrained on both the labeled and newly predicted labeled images, and the process is repeated iteratively. This process is illustrated below:

- Train the model on the labeled images

- Use the trained model to predict the labels of the unlabeled images

- Add the predicted labeled images to the labeled set

- Retrain the model on the combined set of labeled images

- Repeat steps 2-4 until convergence

Through this process, the model can learn to identify patterns in the unlabeled images and improve its performance on the task. In our example, the semi-supervised model can learn to identify common features of cats and dogs, such as fur color, ear shape, and nose structure, from the labeled images. Then, it can use these features to predict the labels of the unlabeled images. By iteratively retraining on the predicted labeled images, the model can improve its ability to distinguish between cats and dogs and make more accurate predictions.

What are the Differences between Machine Learning Methods?

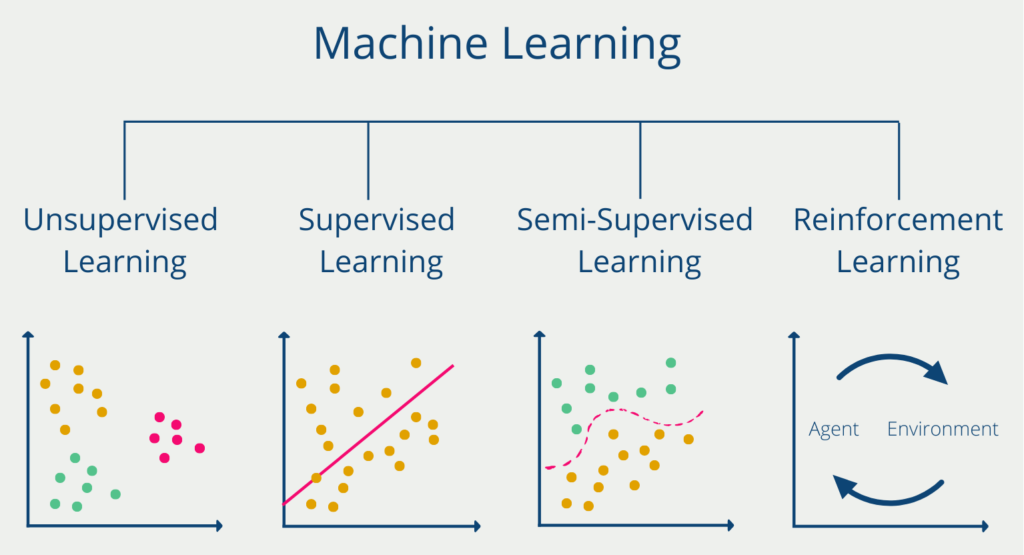

In the field of Machine Learning, a distinction is made between a total of four different learning methods:

- Supervised Learning algorithms learn relationships using a dataset that already contains the label that the model should predict. However, they can only recognize and learn structures that are contained in the training data. Supervised models are used, for example, in the classification of images. Using images that are already assigned to a class, they learn to recognize relationships that they can then apply to new images.

- Unsupervised Learning algorithms learn from a dataset, but one that does not yet have these labels. They try to recognize their own rules and structures in order to be able to classify the data into groups that have the same properties as far as possible. Unsupervised learning can be used, for example, when you want to divide customers into groups based on common characteristics. For example, order frequency or order amount can be used for this purpose. However, it is up to the model itself to decide which characteristics it uses.

- Semi-supervised Learning is a mixture of supervised learning and unsupervised learning. The model has a relatively small data set with labels available and a much larger data set with unlabeled data. The goal is to learn relationships from the small amount of labeled information and test those relationships in the unlabeled data set to learn from them.

- Reinforcement Learning is an exciting branch of Machine Learning that enables an agent to learn and make decisions in dynamic environments. At its core, reinforcement learning follows a simple principle: an agent interacts with an environment, takes actions, receives feedback in the form of rewards or punishments, and learns to maximize its cumulative reward over time. By employing techniques such as value iteration, policy gradients, or deep Q-networks, reinforcement learning algorithms iteratively learn optimal strategies by exploring and exploiting the environment. Through this continuous cycle of action and learning, reinforcement learning empowers machines to adapt and improve their decision-making abilities, leading to remarkable achievements in areas like game playing, robotics, and autonomous systems.

Semi-supervised learning differs from unsupervised and supervised learning in several ways:

- Labeled Data: In supervised learning, the model is trained on labeled data. In unsupervised learning, the model is trained on unlabeled data. In semi-supervised learning, the model is trained on both labeled and unlabeled data.

- Amount of Labeled Data: In supervised learning, the model requires a large amount of labeled data to achieve high accuracy. In unsupervised learning, the model does not require any labeled data. In semi-supervised learning, the model requires a small amount of labeled data to achieve high accuracy.

- Precision: Supervised learning is the most precise of the three techniques, as it is trained on labeled data. Unsupervised learning is less precise, as it is not trained on labeled data. Semi-supervised learning falls somewhere in between, as it is trained on both labeled and unlabeled data.

- Scalability: Unsupervised learning is highly scalable, as it can be used on large amounts of unlabeled data. Supervised learning requires a large amount of labeled data, which may be difficult to obtain. Semi-supervised learning is scalable to some extent, but it requires at least a small amount of labeled data to start.

Which models are part of Semi-Supervised Learning?

Semi-supervised learning is a type of Machine Learning that uses both labeled and unlabeled data to train a model. In contrast to supervised learning, where only labeled data is used, and unsupervised learning, where only unlabeled data is used, semi-supervised learning combines the strengths of both approaches.

Several models can be used in semi-supervised learning, including self-training, co-training, multi-view learning, generative models, and graph-based methods. Self-training involves training a model on a small amount of labeled data, using it to predict the labels of the unlabeled data, and adding the most confident predictions to the labeled dataset. The model is then retrained on the expanded labeled dataset, and the process is repeated iteratively until the desired level of accuracy is achieved.

Co-training involves training multiple models on different subsets of the features, using each model to label the data for the other model, and combining the labeled data to improve accuracy. Multi-view learning uses multiple views of the data to train the model, with each view providing different information that can be combined to improve accuracy. Generative models learn the underlying distribution of the data by training on both labeled and unlabeled data, allowing new labeled data to be generated and added to the training set. Graph-based methods create a graph where the data points are nodes and the edges represent the relationships between the data points, using the labeled data to propagate labels to the unlabeled data through the graph.

What are the applications of Semi-Supervised Learning?

Semi-supervised learning has many potential applications in various fields. Here are some specific examples:

- Image and speech recognition: Semi-supervised learning can be used to improve image and speech recognition. By using a small amount of labeled data, the model can be trained to recognize specific objects or words, while leveraging the vast amount of unlabeled data to improve its accuracy.

- Natural language processing: Semi-supervised learning can be used in natural language processing to learn new concepts or entities with just a few labeled examples. This can be particularly useful in scenarios where new words or concepts are constantly being introduced, such as in social media or news articles.

- Fraud detection: Semi-supervised learning can be used to detect fraud in financial transactions. By using a small amount of labeled data to identify fraudulent transactions, the model can leverage the vast amount of unlabeled data to identify new fraudulent patterns.

What are the advantages and disadvantages of Semi-Supervised Learning?

Semi-supervised learning has several advantages over supervised and unsupervised learning methods. One of the main advantages is that it can leverage the best of both worlds – the labeled and the unlabeled data. With labeled data, it can learn from clear examples and make accurate predictions. With unlabeled data, it can learn from more data and generalize better. Additionally, semi-supervised learning can be more cost-effective than fully supervised learning as labeling large amounts of data can be expensive.

However, semi-supervised learning also has some disadvantages. One of the main challenges is that it requires a large amount of unlabeled data to be effective. If the amount of unlabeled data is limited, then the performance gains may not be significant. Additionally, semi-supervised learning models can be complex, and require more computational resources to train than traditional supervised learning models. Lastly, semi-supervised learning models can be prone to overfitting if the amount of labeled data is too small or the model is too complex.

Despite these challenges, semi-supervised learning has shown great promise in a variety of applications, including natural language processing, computer vision, and speech recognition. With advancements in deep learning and other related fields, it is likely that semi-supervised learning will continue to be an important research area and a useful tool in solving real-world problems.

What are the challenges of Semi-Supervised Learning?

Semi-supervised learning presents several challenges, including:

- Unlabeled data quality: The quality of the unlabeled data can significantly affect the performance of semi-supervised learning. Low-quality unlabeled data may introduce noise and make the model less accurate.

- Limited availability of labeled data: Semi-supervised learning assumes that there is a limited amount of labeled data available. However, in some cases, obtaining labeled data can be time-consuming and expensive.

- Domain adaptation: In some cases, the distribution of labeled and unlabeled data may differ significantly. In such cases, the model may not generalize well to new, unseen data.

- Class imbalance: In some cases, the distribution of classes in the labeled and unlabeled data may be imbalanced, which can make the model biased towards the majority class.

- Model complexity: These learning models can be more complex than supervised learning models. Training such models requires more computational resources, which can be a challenge for some applications.

What are practical considerations and tips for getting started with Semi-Supervised Learning?

When diving into the realm of semi-supervised learning, there are several practical considerations and tips to keep in mind. These guidelines will help you navigate the process and make the most of your labeled and unlabeled data.

- Data Preparation – Balancing Labeled and Unlabeled Data: To begin, assess the availability and quality of your labeled data. Take note of the ratio between labeled and unlabeled data, as this will impact the learning process. Techniques like active learning or bootstrapping can help iteratively label more data from the unlabeled set. Additionally, ensure that both labeled and unlabeled data come from the same distribution to avoid introducing bias.

- Selecting Appropriate Algorithms: Next, explore different semi-supervised learning algorithms that are suitable for your specific problem domain. Familiarize yourself with techniques like self-training, co-training, or generative models. It’s crucial to understand the assumptions and limitations of each algorithm to make an informed choice that aligns with your dataset and problem requirements. Consider the scalability of the algorithm as well, particularly if you’re dealing with larger datasets.

- Addressing Bias and Labeling Errors: Be mindful of potential biases present in your labeled data and how they might affect the learning process. Mitigate biases by employing techniques such as debiasing algorithms or carefully selecting representative samples. Additionally, account for potential labeling errors by assessing the quality of labeled data, taking into consideration the expertise of annotators, and potentially applying error-correcting output codes.

- Regularization and Model Complexity: Overfitting is a concern, especially when working with limited labeled data. Employ regularization techniques to combat this issue. Experiment with different methods, such as L1 or L2 regularization, to strike the right balance between the influence of labeled and unlabeled data and prevent the model from becoming overly complex.

- Evaluation and Performance Metrics: When evaluating your semi-supervised learning model, choose appropriate performance metrics that align with your specific task and problem domain. Classification accuracy, precision, recall, or F1-score are commonly used metrics. Employ cross-validation techniques to ensure consistent evaluation. Additionally, compare the performance of your semi-supervised learning approach with traditional supervised or unsupervised methods to gain insights into its effectiveness.

- Incorporating Domain Knowledge: Leverage domain knowledge to guide the semi-supervised learning process. Incorporating expert rules or constraints into the learning algorithms can enhance performance and interpretability. Explore possibilities for combining semi-supervised learning with transfer learning or domain adaptation techniques to achieve better generalization.

Remember, these practical considerations and tips are meant to serve as a starting point. Every dataset, problem domain, and available resources are unique, so experimentation and iteration are key. By adapting and refining your approach based on your specific circumstances, you can harness the power of semi-supervised learning to unlock valuable insights from unlabeled data.

This is what you should take with you

- Semi-supervised learning is a powerful approach to Machine Learning that combines labeled and unlabeled data to improve accuracy.

- Multiple models can be used in semi-supervised learning, including self-training, co-training, multi-view learning, generative models, and graph-based methods.

- The specific model used will depend on the problem at hand and the available data.

- Semi-supervised learning is particularly useful when labeled data is limited or expensive to obtain.

- Semi-supervised learning has many applications, including in natural language processing, computer vision, and speech recognition.

- Research in semi-supervised learning is ongoing, and new models and techniques are being developed to improve accuracy and efficiency.

What is a Boltzmann Machine?

Unlocking the Power of Boltzmann Machines: From Theory to Applications in Deep Learning. Explore their role in AI.

What is the Gini Impurity?

Explore Gini impurity: A crucial metric shaping decision trees in machine learning.

What is the Hessian Matrix?

Explore the Hessian matrix: its math, applications in optimization & machine learning, and real-world significance.

What is Early Stopping?

Master the art of Early Stopping: Prevent overfitting, save resources, and optimize your machine learning models.

What is RMSprop?

Master RMSprop optimization for neural networks. Explore RMSprop, math, applications, and hyperparameters in deep learning.

What is the Conjugate Gradient?

Explore Conjugate Gradient: Algorithm Description, Variants, Applications and Limitations.

Other Articles on the Topic of Semi-Supervised learning

Scikit-Learn has an interesting article about Semi-Supervised Learning.

Niklas Lang

I have been working as a machine learning engineer and software developer since 2020 and am passionate about the world of data, algorithms and software development. In addition to my work in the field, I teach at several German universities, including the IU International University of Applied Sciences and the Baden-Württemberg Cooperative State University, in the fields of data science, mathematics and business analytics.

My goal is to present complex topics such as statistics and machine learning in a way that makes them not only understandable, but also exciting and tangible. I combine practical experience from industry with sound theoretical foundations to prepare my students in the best possible way for the challenges of the data world.